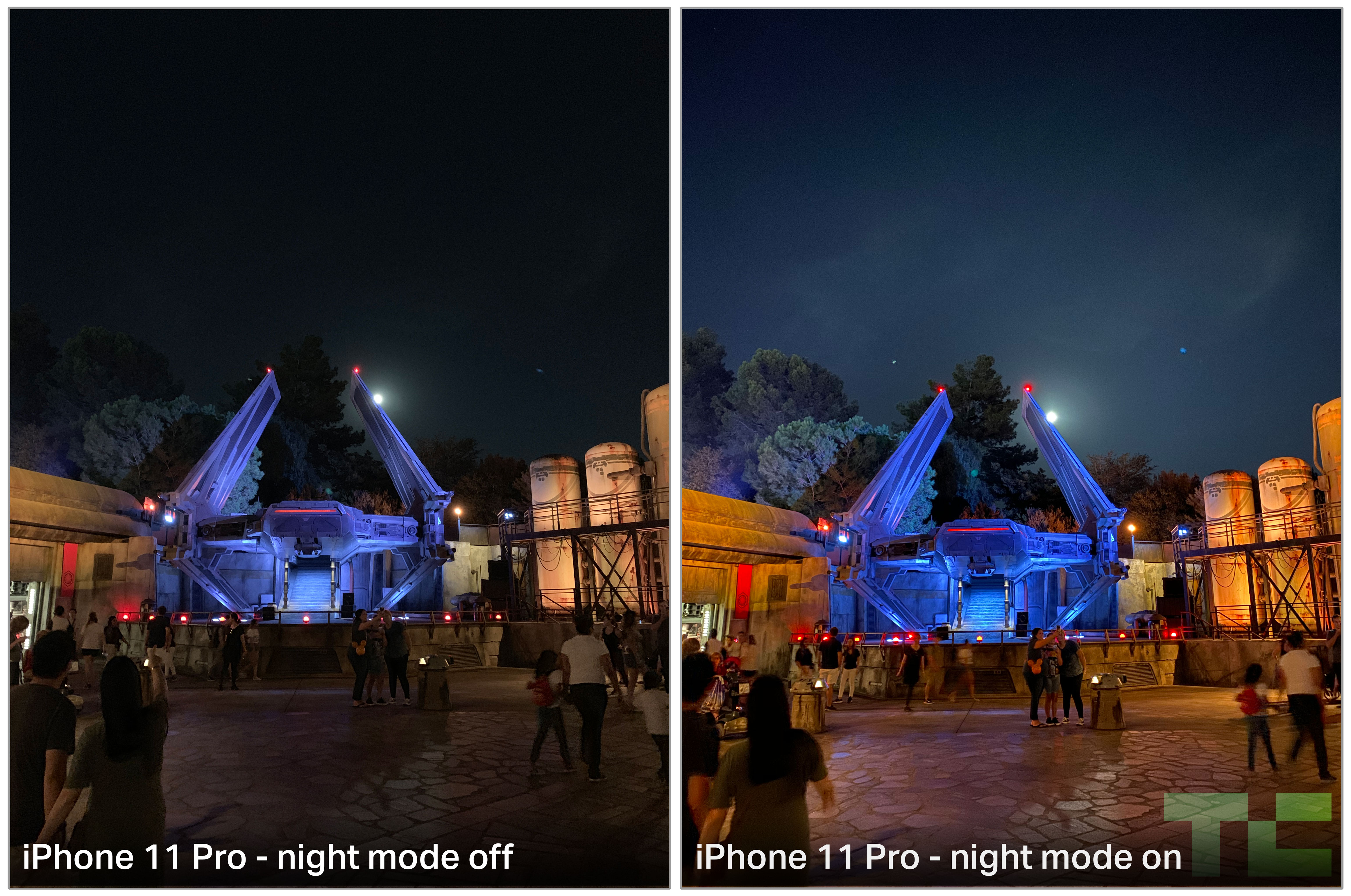

Let’s get this out of the way right up front: iPhone 11’s Night Mode is great. It works, it compares extremely well to other low-light cameras and the exposure and color rendition is best in class, period.

If that does it for you, you can stop right here. If you want to know more about the iPhone 11, augmented photography and how they performed on a trip to the edge of a galaxy far, far away, read on.

As you’re probably now gathering, yes, I took the new iPhones to Disneyland again. If you’ve read my other reviews from the parks, you’ll know that I do this because they’re the ideal real-world test bed for a variety of capabilities. Lots of people vacation with iPhones.

The parks are hot and the network is crushed. Your phone has to act as your ticket, your food ordering tool, your camera and your map. Not to mention your communication device with friends and family. It’s a demanding environment, plain and simple. And, I feel, a better organic test of how these devices fare than sitting them on a desk in an office and running benchmark tools until they go dead.

I typically test iPhones by using one or two main devices and comparing them with the ones they’re replacing. I’m not all that interested in having the Android versus iPhone debate because I feel that it’s a bit of a straw man, given that platform lock-in means that fewer and fewer people over time are making a truly agnostic platform choice. They’re deciding based on heredity or services (or price). I know this riles the zealots in both camps, but most people just don’t have the luxury of being religious about these kinds of things.

Given the similarities in models (more on that later), I mainly used the iPhone 11 Pro for my testing, with tests of the iPhone 11 where appropriate. I used the iPhone XS as a reference device. Despite my lack of desire to do a platform comparison, for this year’s test, given that much discussion has been had about how Google pulled off a coup with the Pixel 3’s Night Sight mode, I brought along one of those, as well.

I tried to use the iPhone XS only to compare when comparisons were helpful and to otherwise push the iPhone 11 Pro to handle the full load each day. But, before I could hit the parks, I had to set up my new devices.

Setup

Much of the iPhone’s setup process has remained the same over the years, but Apple has added one new feature worth mentioning: Direct Transfer. This option during setup sits, philosophically, between restoring from a backup made on a local Mac and restoring from an iCloud backup.

Direct Transfer is designed to help users transfer their information directly from one device to another using a direct, peer-to-peer connection between the two devices. Specifically, it uses Apple Wireless Direct Link (AWDL), which also powers AirDrop and AirPlay. The transfer is initiated using a particle cloud link similar to the one you see setting up Apple Watch. Once it’s initiated, your old iPhone and new iPhone will be out of commission for up to two to three hours, depending on how much information you’re transferring.

The data is encrypted in transit. Information directly transferred includes Messages history, full resolution photos that are already stored on your phone and any app data attached to installed apps. The apps themselves are not transferred because Apple’s app-signing procedure locks apps to a device, so they must be (automatically) re-dowloaded from the App Store, a process that begins once the Direct Transfer is complete. This also ensures that you’re getting the appropriate version of the app.

Once you’ve done the transfer, the data on your phone is then “rationalized” with iCloud. This helps in cases where you have multiple devices, and one of those other devices could have been making changes in the cloud that now need to be updated on the device.

Apple noted that Direct Transfer is good for a few kinds of people:

- People without an iCloud backup

- People who have not backed up in a while

- People in countries where internet speeds are not broadly strong, like China

- People who don’t mind waiting longer initially for a “more complete” restore

Basically what you’ve got here is a choice between having your iPhone “ready” immediately for basic functionality (iCloud backup restore) and waiting a bit longer to have far more of your personal data accessible from the start, without waiting for iCloud downloads of original photos, Messages history, etc.

Direct Transfer also does not transfer Face ID or Touch ID settings, Apple Pay information or Mail Data, aside from usernames and passwords.

After iPhone Migration is complete, the Messages content from the device will be reconciled with the Messages content in iCloud to ensure they are in sync. The same is true for Photos stored in iCloud.

Anecdotally, I experienced a couple of interesting things during my use of Direct Transfer. My first phone took around 2.5 hours to complete, but I still found that the Messages archive alerted me that it needed to continue downloading archived messages in the background. Apple suggested that this may be due to this rationalizing process.

I also noticed that when simultaneous Direct Transfer operations were active, side-by-side devices took much longer to complete. This is very likely due to local radio interference. Apple has a solution to that. There is a wired version of the Direct Transfer option using the Camera Connection Kit with a source device and connecting them via USB. Ideally, Apple says, the transfer speeds are identical, but of course the wired option side-steps the wireless interference problem entirely — which is why Apple will be using it for in-store device restores for new iPhones using the Direct Transfer option.

My experience with Direct Transfer wasn’t glitch-free, but it was nice having what felt like a “more complete” device from the get-go. Of note, Direct Transfer does not appear to transfer all keychain data intact, so you will have to re-enter some passwords.

Design and Display

I’ve been naked for years. That is, team, no case. Cases are annoying to me because of the added bulk. They’re also usually too slippery or too sticky. I often wear technical clothing, too, and the phones go into slots designed for quick in/out or fun party tricks, like dropping into your hand with the pull of a clasp. This becomes impossible with most cases.

Apple provided the clear cases for all three iPhones, and I used them to keep them looking decent while I reviewed them, but I guarantee you my personal phone will never see a case.

I’m happy to report that the iPhone 11 Pro’s matte finish back increases the grippyness of the phone on its own. The smooth back of the iPhone 11 and the iPhone XS always required a bit of finger oil to get into a condition where you could reliably pivot them with one hand going in and out of a pocket.

Traveling through the parks you get sweaty (in the summer), as well as greasy with that Plaza fried chicken and turkey legs and all kinds of kid-related spills. Having the confidence of a case while you’re in these fraught conditions is something I can totally understand. But day-to-day it’s not my favorite.

I do like the unified design identity across the line of making the bump surface blasted glass on the iPhone 11 with a glossy back and then flipping those on the iPhone 11 Pro. It provides a design language link even though the color schemes are different.

At this point either you’ve bought into the camera bump being functional cool or you hate its guts. Adding another camera is not going to do much to change the opinion of either camp. The iPhone 11 Pro and Pro Max have a distinctly Splinter Cell vibe about them now. I’m sure you’ve seen the jokes about iPhones getting more and more cameras; well, yeah, that’s not a joke.

I think that Apple’s implementation feels about the best it could be here. The iPhone 11 Pro is already thicker than the previous generation, but there’s no way it’s going to get thick enough to swallow a bump this high. I know you might think you want that, too, but you don’t.

Apple gave most reviewers the Midnight Green iPhone 11 Pro/Max and the minty Green iPhone 11. If I’m being honest, I prefer the mint. Lighter and brighter is just my style. In a perfect world, I’d be able to rock a lavender iPhone 11 Pro. Alas, this is not the way Apple went.

The story behind the Midnight Green, as it was explained to me, begins with Apple’s colorists calling this a color set to break out over the next year. The fashion industry concurs, to a degree. Mint, seafoam and “neon” greens, which were hot early in the year, have given way to sage, crocodile and moss. Apple’s Midnight Green is also a dark, muted color that Apple says is ideal to give off that Pro vibe.

The green looks nearly nothing like any of the photographs I’ve seen of it on Apple’s site.

In person, the Midnight Green reads as dark gray in anything but the most direct indoor light. Outdoors, the treated stainless band has an “80s Mall Green” hue that I actually really like. The back also opens up quite a bit, presenting as far more forest green than it does inside. Overall, though, this is a very muted color that is pretty buttoned up. It sits comfortably alongside neutral-to-staid colors like the Space Gray, Silver and Gold.

The Silver option is likely to be my personal pick this time around just because the frosted-white back looks so hot — the first time I won’t have gone gray or black in a while.

Apple’s new Super Retina display has a 2M:1 contrast ratio and displays up to 1,200 nits in HDR content and 800 in non-HDR. What does this mean out in the sun at the park? Not a whole lot, but the screen is slightly easier to read and see detail on while in sunny conditions. The “extended” portion of Apple’s new XDR screen terminology on the iPhone 11 Pro is due to lux, a luminance metric, not a color metric, so the color gamut remains the same. However, I have noticed that HDR images look slightly flatter on the iPhone XS than they do on the iPhone 11 Pro. The iPhone 11’s screen, while decent, does not compare to the rich blacks and great contrast range of the iPhone 11 Pro. It’s one of two major reasons to upgrade.

Apple’s proprietary 3D touch system has gone the way of the dodo with the iPhone 11. The reasoning behind this was that they realized they would never be able to ship the system economically or viably on the iPad models. So they canned it in favor of haptic touch, bringing more consistency across the lineup.

By and large it works fine. It’s a little odd for 3D touch users at first. You retain peek and quick actions but lose pop, for instance, because there’s no additional level of pressure threshold. Most of the actions that you probably commonly use 3D touch for, like the camera or flashlight or home screen app shortcuts, work just fine.

I was bullish on 3D touch because I felt there was an opportunity to add an additional layer of context for power users — a hover layer for touch. Unfortunately, I believe there were people at Apple (and outside of it) that were never convinced that the feature was going to be discoverable or useful enough, so it never got the investment it needed to succeed. Or, and I will concede this is a strong possibility, they were right and I was wrong and this just was never going to work.

Performance and Battery

Apple’s A13 Bionic features efficiency cores that are 20% faster and use 40% less power than the A12 bionic — part of where some impressive battery life improvements come from. Its overall clock speed and benchmarks are up around 20% overall. The performance cores also use 30% less power and the GPU uses 40% less power. The Neural Engine doesn’t escape either, and uses 15% lower power. (All compared to the iPhone XS.)

My focus there on the cores power usage is not to say this feels any less juicy, but all new iPhones feel great out of the box because Apple (usually) works to neatly match power requirements with its hardware. And any previous generation software is going to have plenty of overhead out of the box. No change here.

The biggest direct effect that this silicon work will have on most people’s lives will likely be battery life.

The iPhone 11 Pro has a larger battery than the iPhone XS, with a different higher voltage chemistry. That, coupled with power savings improvements mentioned above, along with more in the screen and other components, means better battery life.

My battery tests over several days at the parks point to Apple’s claims about improvements over the iPhone XS being nearly dead-on. Apple claims that the iPhone 11 Pro lasts four hours longer than the iPhone XS. The iPhone XS came in at roughly 9.5 hours in tests last year and the iPhone 11 Pro came in nearly bang-on at 12 hours — in extremely challenging conditions.

It was hot, the networks were congested and I was testing every feature of the camera and phone I could get my hands on. Disneyland has some Wi-Fi in areas of the park, but the coverage is not total, so I relied on LTE for the majority of my usage. This included on-device processing of photos and video (of which I shot around 40 minutes or so each day). It also included using Disney’s frustrating park app, about which I could write a lot of complaints.

I ordered food, browsed Twitter while in lines, let the kids watch videos while the wife and I had a necessary glass of wine or six and messaged continuously with family and team members. The battery lasted significantly longer on the iPhone 11 Pro with intense usage than the iPhone XS, which juuuust edged out my iPhone X in last year’s tests.

One of the reasons I clone my current device and run it that way instead of creating some sort of artificially empty test device is that I believe that is the way most people will be experiencing the phone. Only weirdos like device testers and Marie Kondo acolytes are likely to completely wipe their devices and start fresh on purchase of a new iPhone.

I’m pretty confident you’ll see an improvement in the battery, as well. I’ve done this a lot, and these kinds of real-world tests at theme parks tend to put more of the kind of strains you’ll see in daily life on the phone than a bench test running an artificial web browsing routine. On the other hand, maybe you’re a worker at a bot farm and I’ve just touched a nerve. If so, I am sorry.

Also, an 18W power adapter, the same one that ships with iPad Pro, comes in the iPhone 11 Pro box. Finally, etc. It is very nice having the majority of my cables have at least one end that is USB-C now because I can use multi-port GaN chargers from Anker and power bricks that have USB-C. Apple’s USB-C lightning cables are slightly thicker gauge now, and support data transfer as well as the 18W brick. The bigger charger means faster charging; Apple claims up to 50% charge in 30 minutes with the new charger, which feels about like what I experienced.

It’s quicker, much nicer to top off while nursing a drink and a meatball at the relatively secret upstairs bar at the Wine Country Trattoria in DCA. There’s an outlet behind the counter — just ask to use it.

Unfortunately, the iPhone 11 (non pro) still comes with a 5W charger. This stinks. I’d love to see the 18W become standard across the line.

Oh, about that improved Face ID angle — I saw, maybe, a sliiiiiiight improvement, if any. But not that much. A few degrees? Sometimes? Hard to say. I will be interested to see what other reviewers found. Maybe my face sucks.

Camera and Photography

Once upon a time you could relatively easily chart the path of a photograph’s creation. Light passed through the lens of your camera onto a medium like film or chemically treated paper. A development process was applied, a print was made and you had a photograph.

When the iPhone 8 was released I made a lot of noise about how it was the first of a new wave of augmented photography. That journey continues with the iPhone 11.

https://twitter.com/panzer/status/1171489666727477249?s=20

This is what makes the camera augmented on the iPhone 11, and what delivers the most impressive gains of this generation; not new glass, not the new sensors — a processor specially made to perform machine learning tasks.

What we’re seeing in the iPhone 11 is a blended apparatus that happens to include three imaging sensors, three lenses, a scattering of motion sensors, an ISP, a machine learning-tuned chip and a CPU all working in concert to produce one image. This is a machine learning camera. But as far as the software that runs iPhone is concerned, it has one camera. In fact, it’s not really a camera at all, it’s a collection of devices and bits of software that work together toward a singular goal: producing an image.

This way of thinking about imaging affects a bunch of features, from Night Mode to HDR and beyond, and the result is the best camera I’ve ever used on a phone.

But first, let’s talk new lenses.

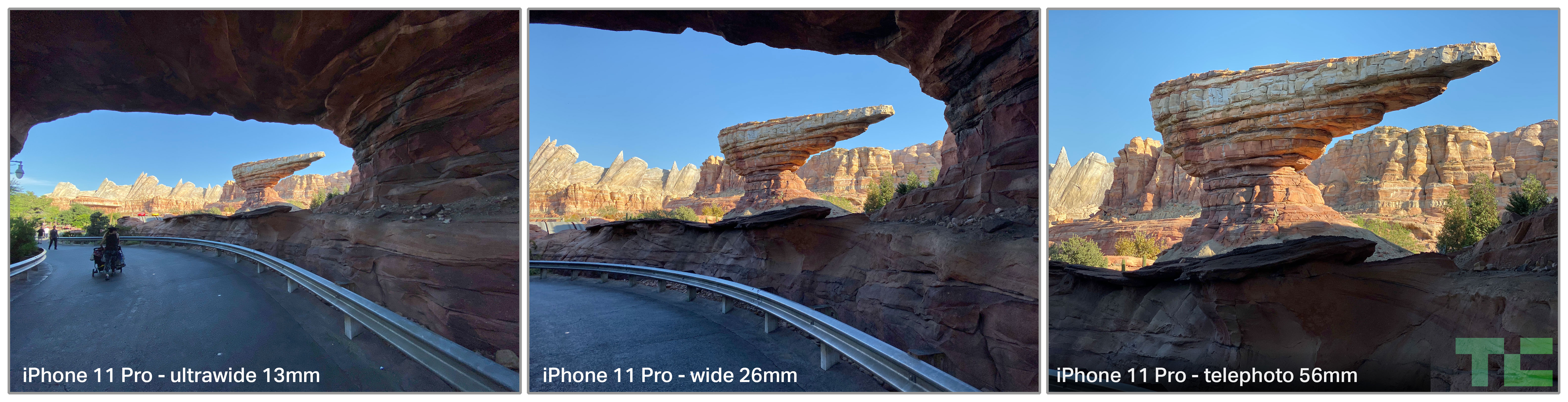

Ultra Wide

Both the iPhone 11 and the iPhone 11 Pro get a new “ultra wide angle” lens that Apple is calling a 13mm. In practice it delivers about what you’d expect from a roughly 13mm lens on a full-frame SLR — very wide. Even with edge correction it has the natural and expected effect of elongating subjects up close and producing some dynamic images. At a distance, it provides options for vistas and panoramic images that weren’t available before. Up close, it does wonders for group shots and family photos, especially in tight quarters where you’re backed up against a wall.

In my testing of the wide angle, it showed off extremely well, especially in bright conditions. It allowed for great close-up family shots, wide-angle portraits that emphasized dynamism and vistas that really opened up possibilities for shooting that haven’t been on iPhone before.

Portrait mode overall has had a nice improvement in edge detection, doing a better job with fine hair, glasses and complicated background patterns — normally a portrait mode Achilles’ heel. It almost completely nails this crazy fence that trips up older iPhones.

One clever detail here is that when you shoot at 1x or 2x, Apple blends the live view of the wider angle lenses directly into the viewfinder. They don’t just show you the wide with crop marks over it, they are piping in actual feeds from the sensor so that you get a precise idea of how the image might look, while still letting you see that you have other options outside of the frame. It’s the camera viewfinder engineer version of stunting.

I loved shooting people with it up close, but that won’t be for everyone. I’d guess most people will like it for groups and for landscapes. But I found it great to grab fun tight shots of people or really intimate moments that feel so much more personal when you’re in close.

Of note: The ultra-wide lens does not have optical image stabilization on either the iPhone 11 or iPhone 11 Pro. This makes it a much trickier proposition to use in low light or at night.

The ultra-wide camera cannot be used with Night Mode because its sensor does not have 100% focus pixels and, of course, no OIS. The result is that wide-angle night shots must be held very steady or soft images will result.

The ultra-wide lens coming to both phones is great. It’s a wonderful addition and I think people will get a ton of use out of it on the iPhone 11. If they had to add one, I think adding the UW was the better option because group shots of people are likely far more common than landscape photographers.

The ultra-wide lens is also fantastic for video. Because of the natural inward crop of video (it uses less of the sensor, so it feels more cramped), the standard wide lens has always felt a little claustrophobic. Taking videos on the carousel riding along with Mary Poppins, for instance, I was unable to get her and Burt in frame at once with the iPhone XS, but was able to do so with the iPhone 11 Pro. Riding Matterhorn you get much more of the experience and less “person’s head in front of you.” Same goes with Cars, where the ride is so dominated by the wind screen. I know these are very specific examples, but you can imagine how similar scenarios could play out at family gatherings in small yards, indoors or in other cramped locations.

One additional tidbit about the ultra-wide lens: You may very well have to find a new grip for your phone. The lens is so wide that your finger may show up in some of your shots because your knuckle is in frame. It happened to me a bunch over the course of a few days, until I found a grip lower on the phone. iPhone 11 Pro Max users will probably not have to worry.

HDR and Portrait Improvements

Because of those changes to the image pathway I talked about earlier, the already solid HDR images get a solid improvement in portrait mode. The Neural Engine works on all HDR images coming out of the cameras in iPhone to tone map and fuse image data from various physical sensors to make a photo. It could use pixels from one camera for highlight detail and pixels from another for the edges of a frame. I went over this system extensively back in 2016, and it has only gotten more sophisticated with the addition of the Neural Engine.

It seems to be getting another big leap forward when Deep Fusion launches, but I was unable to test that yet.

For now, we can see additional work that the Neural Engine puts in with Semantic Rendering. This process involves your iPhone doing facial detection on the subject of a portrait, isolating the face and skin from the rest of the scene and applying a different path of HDR processing on it than on the rest of the image. The rest of the image gets its own HDR treatment, then the two images are fused back together.

This is not unheard of in image processing. Most photographers worth their salt will give faces a different pass of adjustments from the rest of an image, masking off the face so that it doesn’t turn out too flat or too contrasty or come out with the wrong skin tones.

The difference here, of course, is that it happens automatically, on every portrait, in fractions of a second.

The results are portraits that look even better on iPhone 11 and iPhone 11 Pro. Faces don’t have the artificially flat look they could sometimes get with the iPhone XS — a result of the HDR process that is used to open up shadows and normalize the contrast of an image.

Look at these two portraits, shot at the same time in the same conditions. The iPhone 11 Pro is far more successful at identifying backlight and correcting for it across the face and head. The result is better contrast and color, hands down. And this was not an isolated experience, I shot many portrait shots side by side and the iPhone 11 Pro was the pick every time — with especially wide margins if the subject was backlit, which is very common with portraiture.

Here’s another pair, the differences are more subtle here, but look at the color balance between the two. The skin tones are warmer, more olive and (you’ll have to trust me on this one) truer to life on the iPhone 11 Pro.

And yes, the High Key Mono works, but is still not perfect.

Night Mode

Now for the big one. The iPhone 11 finally has a Night Mode. Though I wouldn’t really call it a mode because it doesn’t actually require that you enable it, it just kicks in automatically when it thinks it can help.

On a technical level, Night Mode is a function of the camera system that strongly resembles HDR. It does several things when it senses that the light levels have fallen below a certain threshold:

- It decides on a variable number of frames to capture based on the light level, the steadiness of the camera according to the accelerometer and other signals.

- The ISP then grabs these bracketed shots, some longer, some shorter exposure.

- The Neural Engine is relatively orthogonal to Night Mode working, but it’s still involved because it is used for semantic rendering across all HDR imaging in iPhone 11.

- The ISP then works to fuse those shots based on foreground and background exposure and whatever masking the Neural Engine delivers.

The result is a shot that brightens dark-to-very-dark scenes well enough to change them from throw away images to something well worth keeping. In my experience, it was actually difficult to find scenes dark enough to make the effect intense enough. The new 33% improvement in ISO in the wide camera and 42% improvement on telephoto on iPhone XS already help a lot.

But once you do find the right scene, you see detail and shadow pop and it becomes immediately evident even before you press the shutter that it is making it dramatically brighter. Night Mode works only in 1x and 2x shooting modes because only those cameras have the 100% focus pixels needed to do the detection and mapping that the iPhone 11 needs to make the effect viable.

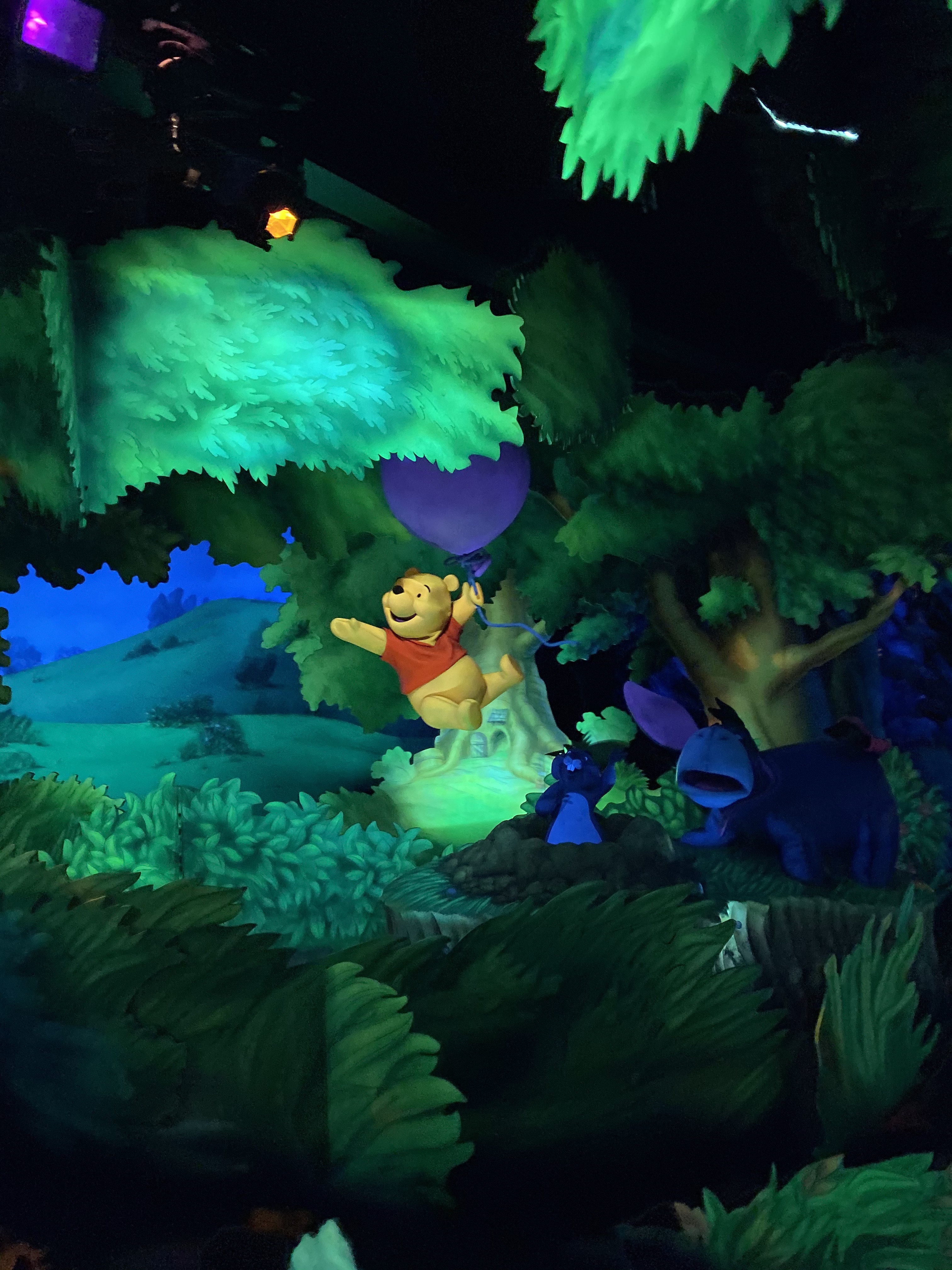

I have this weird litmus test I put every new phone camera through where I take it on a dark ride, like Winnie the Pooh, to see if I can get any truly sharp, usable image. It’s a great test because the black light is usually on, the car is moving and the subject is moving. Up until this point I have succeeded exactly zero times. But the iPhone 11 Pro pulled it off. Not perfect, but pretty incredible, all things considered.

A few observations about Night Mode:

- The night images still feel like night time. This is the direct result of Apple making a decision not to open every shadow and brighten every corner of an image, flaring saturation and flattening contrast.

- The images feel like they have the same genetic makeup as an identical photo taken without Night Mode. They’re just clearer and the subject is brighter.

- Because of the semantic mapping working on the image, along with other subject detection work, the focal point of the image should be clearer/brighter, but the setting and scene does not all come up at once like a broad gain adjustment.

- iPhone 11, like many other “night modes” across phones, has issues with moving subjects. It’s best if no one is moving or they are moving only very slightly. This can vary depending on the length of exposure, from 1-3 seconds.

- On a tripod or another stationary object, Night Mode will automatically extend up to a 10-second exposure. This allows for some great night photography effects, like light painting or trailing.

The result is naturally bright images that retain a fantastic level of detail while still feeling like they have natural color that is connected to the original subject matter.

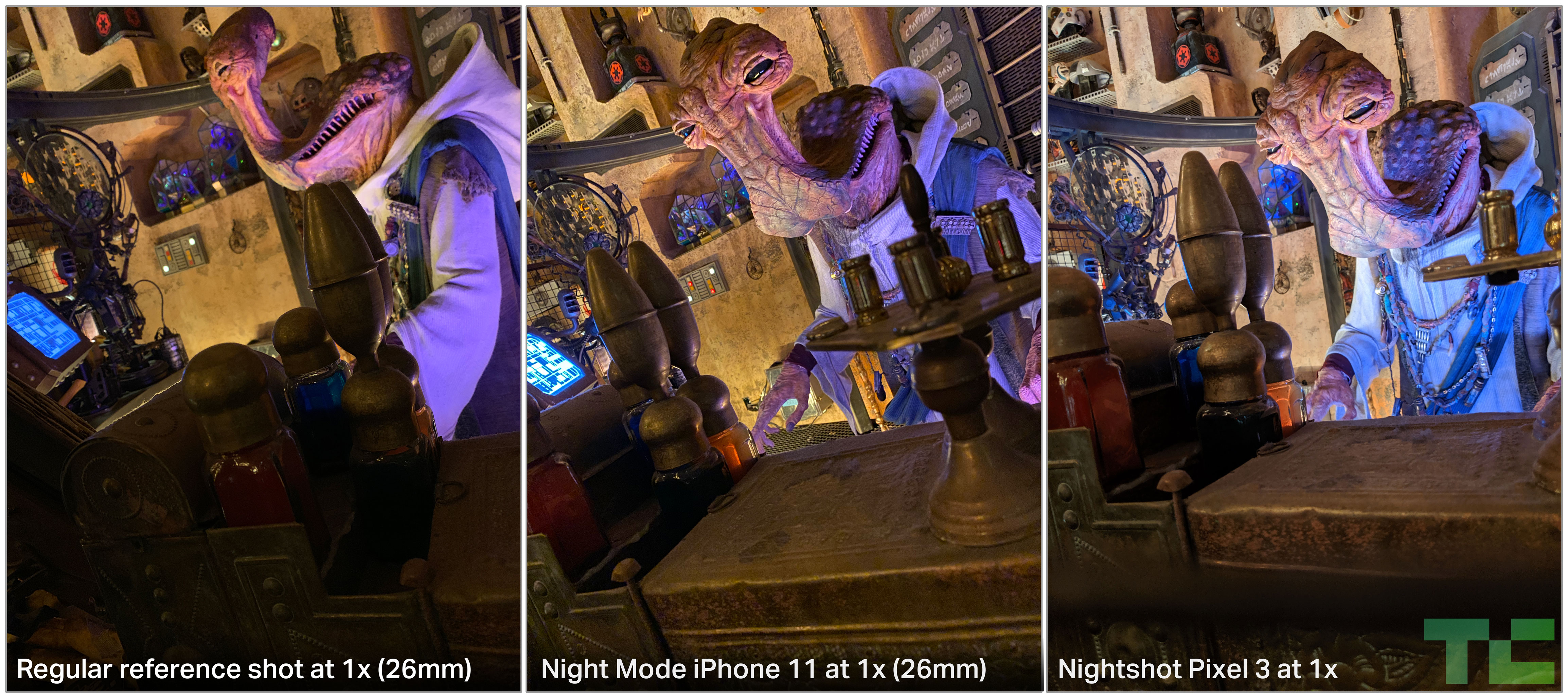

Back when the Pixel 3 shipped Night Sight I noted that choosing a gain-based night mode had consequences, and that Apple likely could ship something based on pure amperage but that it had consistently made choices to do otherwise and would likely do so for whatever it shipped. People really hated this idea, but it holds up.

https://twitter.com/panzer/status/1062931283804794881

Though the Galaxy 10+ has a great night mode as well, the Pixel 3 was the pioneer here and still jumps to mind when judging night shots. The choices Google has made here are much more in the realm of “everything brighter.” If you love it, you love it, and that’s fine. But it is absolutely not approaching this from a place of restraint.

Here are some examples of the iPhone 11 Pro up against images from the Pixel 3. As you can see, both do solid work brightening the image, but the Pixel 3 is colder, flatter and more evenly brightened. The colors are not representative at all.

![]()

In addition, whatever juice Google is using to get these images out of a single camera and sensor, it suffers enormously on a detail level. You can see the differences here in the rock work and towers. It’s definitely better than having a dark image, but it’s clear that the iPhone 11 Pro is a jump forward.

The Pixel 4 is around the corner, of course, and I can’t wait to see what improvements Google comes up with. We are truly in a golden age for taking pictures of dark shit with phone cameras.

Of note, the flash is now 36% brighter than the iPhone XS, which is a nice fallback for moving subjects.

Tidbits

Auto crop

The iPhone 11 will, by default, auto crop subjects back into your videos shot at 1x or 2x. If you’re chasing your kid and his head goes out of frame, you could see an auto button on the 1 up review screen after a bit of processing. Tapping this will re-frame your video automatically. Currently this only works with the QuickTake function directly from the iPhone’s lock screen. It can be toggled off.

You can toggle on auto cropping for photos in the Camera settings menu if you wish, it is off by default. This has a very similar effect. It’s using image analysis to see if it has image data that it can use to re-center your subject.

Slofies

Yeah, they’re fun; yeah, they work. They’re going to be popular for folks with long hair.

U1

Apple has included a U1 chip in the iPhone 11 — can’t test it, but it’s interesting as hell. Probably best to reserve talking about this extensively for a bit as Apple will ship the U1’s first iPhone functionality with a directional…AirDrop feature? This is definitely one of those things where future purposes, tile-like locator perhaps, were delayed for some reason and a side project of the AirDrop team got elevated to first ship. Interestingly, Apple mentioned, purely as an example, that this feature could be used to start car ignitions, given the appropriate manufacturer support.

If this sounds familiar, then you’ve probably read anything I’ve written over the last several years. It’s inevitable that iPhones and Apple Watches begin to take on functionality like this — it’s just a matter of how to do it precisely and safely. The U1 has a lot to do with location on a micro-level. It’s not broad, network-based or GPS-based location, it’s precise location and orientation. That opens up a bunch of interesting possibilities.

About that Pro

And then there was the name. iPhone 11 Pro. When I worked at a camera shop, you learned the power of the word “pro.” For some people it was an aphrodisiac, for others, a turn-off. And for others, it was simply a necessity.

Is this the pro model? Oh I’m not a pro. Oooh, this is the pro!

We used it as a sales tool, for sure. But every so often it was also necessary to use it to help prevent people from over-buying or under-buying for their needs.

In the film days, one of the worst things you could ever shoot as a pro-am photographer was gym sports. It was fast action, inside (where it’s comparatively dim) and at a distance from court-side. There was no cheap way to do it. No cranking the ISO to 64,000 and letting your camera’s computer clean it up. You had to get expensive glass, an expensive camera body to operate that glass and an expensive support like a monopod. You also had to not be a dumbass (this was the most expensive part).

Amateurs always balked at the barrier of entry to shooting in these kinds of scenarios. But the real pros knew that for every extra dollar they spent on the good stuff, they’d make it up tenfold in profits because they could deliver product no parent with a point and shoot could hope to replicate.

However, the vast majority of people who walked into the shop weren’t shooting hockey or wrestling. They were taking family photos, outdoor pics and a few sunsets.

Which brings us to what the term Pro means now: Pro is about edge cases.

It’s not about the 80% case, it’s about the 20% of people who need or want something more out of their equipment.

For this reason, the iPhone 11 is going to sell really well. And it should, because it’s great. It has the best new lens, an ultra-wide that takes great family photos and landscape shots. It has nearly every software feature of iPhone 11 Pro. But it doesn’t have the best screen and it doesn’t have telephoto. For people who want to address edge cases — the best video and photo options, a better dark mode experience, a brighter screen — the iPhone 11 Pro is there. For everyone else, there’s still fiscal 2020’s best-selling iPhone.

Comment