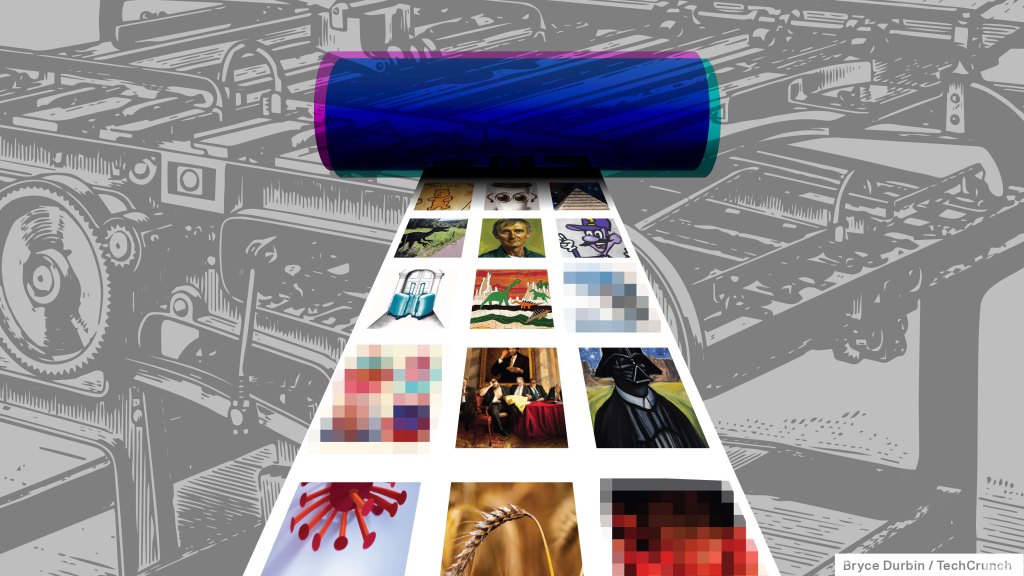

Image-generating AI models like DALL-E 2 and Stable Diffusion can — and do — replicate aspects of images from their training data, researchers show in a new study, raising concerns as these services enter wide commercial use.

Co-authored by scientists at the University of Maryland and New York University, the research identifies cases where image-generating models, including Stable Diffusion, “copy” from the public internet data — including copyrighted images — on which they were trained.

To be clear, the study hasn’t been peer reviewed yet. A researcher in the field, who asked not to be identified by name, shared high-level thoughts with TechCrunch via email.

“Even though diffusion models such as Stable Diffusion produce beautiful images, and often ones that appear highly original and custom tailored to a particular text prompt, we show that these images may actually be copied from their training data, either wholesale or by copying only parts of training images,” the researcher said. “Companies generating data with diffusion models may need to reconsider wherever intellectual property laws are concerned. It is virtually impossible to verify that any particular image generated by Stable Diffusion is novel and not stolen from the training set.”

Images from noise

State-of-the-art image-generating systems like Stable Diffusion are what’s known as “diffusion” models. Diffusion models learn to create images from text prompts (e.g., “a sketch of a bird perched on a windowsill”) as they work their way through massive training data sets. The models — trained to “re-create” images as opposed to drawing them from scratch — start with pure noise and refine an image over time to make it incrementally closer to the text prompt.

It’s not very intuitive tech. But it’s exceptionally good at generating artwork in virtually any style, including photorealistic art. Indeed, diffusion has enabled a host of attention-grabbing applications, from synthetic avatars in Lensa to art tools in Canva. DeviantArt recently released a Stable Diffusion–powered app for creating custom artwork, while Microsoft is tapping DALL-E 2 to power a generative art feature coming to Microsoft Edge.

To be clear, it wasn’t a mystery that diffusion models replicate elements of training images, which are usually scraped indiscriminately from the web. Character designers like Hollie Mengert and Greg Rutkowski, whose classical painting styles and fantasy landscapes have become one of the most commonly used prompts in Stable Diffusion, have decried what they see as poor AI imitations that are nevertheless tied to their names.

But it’s been difficult to empirically measure how often copying occurs, given diffusion systems are trained on upward of billions of images that come from a range of different sources.

To study Stable Diffusion, the researchers’ approach was to randomly sample 9,000 images from a data set called LAION-Aesthetics — one of the image sets used to train Stable Diffusion — and the images’ corresponding captions. LAION-Aesthetics contains images paired with text captions, including images of copyrighted characters (e.g., Luke Skywalker and Batman), images from IP-protected sources such as iStock, and art from living artists such as Phil Koch and Steve Henderson.

The researchers fed the captions to Stable Diffusion to have the system create new images. They then wrote new captions for each, attempting to have Stable Diffusion replicate the synthetic images. After comparing using an automated similarity-spotting tool, the two sets of generated images — the set created from the LAION-Aesthetics captions and the set from the researchers’ prompts — the researchers say they found a “significant amount of copying” by Stable Diffusion across the results, including backgrounds and objects recycled from the training set.

One prompt — “Canvas Wall Art Print” — consistently yielded images showing a particular sofa, a comparatively mundane example of the way diffusion models associate semantic concepts with images. Others containing the words “painting” and “wave” generated images with waves resembling those in the painting “The Great Wave off Kanagawa” by Katsushika Hokusai.

Across all their experiments, Stable Diffusion “copied” from the training data set roughly 1.88% of the time, the researchers say. That might not sound like much, but considering the reach of diffusion systems today — Stable Diffusion had created over 170 million images as of October, according to one ballpark estimate — it’s tough to ignore.

“Artists and content creators should absolutely be alarmed that others may be profiting off their content without consent,” the researcher said.

Implications

In the study, the co-authors note that none of the Stable Diffusion generations matched their respective LAION-Aesthetics source image and that not all models they tested were equally prone to copying. How often a model copied depended on several factors, including the size of the training data set; smaller sets tended to lead to more copying than larger sets.

One system the researchers probed, a diffusion model trained on the open source ImageNet data set, showed “no significant copying in any of the generations,” they wrote.

The co-authors also advised against excessive extrapolation from the study’s findings. Constrained by the cost of compute, they were only able to sample a small portion of Stable Diffusion’s full training set in their experiments.

Still, they say that the results should prompt companies to reconsider the process of assembling data sets and training models on them. Vendors behind systems such as Stable Diffusion have long claimed that fair use — the doctrine in U.S. law that permits the use of copyrighted material without first having to obtain permission from the rightsholder — protects them in the event that their models were trained on licensed content. But it’s an untested theory.

“Right now, the data is curated blindly, and the data sets are so large that human screening is infeasible,” the researcher said. “Diffusion models are amazing and powerful, and have showcased such impressive results that we cannot jettison them, but we should think about how to keep their performance without compromising privacy.”

For the businesses using diffusion models to power their apps and services, the research might give pause. In a previous interview with TechCrunch, Bradley J. Hulbert, a founding partner at law firm MBHB and an expert in IP law, said he believes that it’s unlikely a judge will see the copies of copyrighted works in AI-generated art as fair use — at least in the case of commercial systems like DALL-E 2. Getty Images, motivated out of those same concerns, has banned AI-generated artwork from its platform.

The issue will soon play out in the courts. In November, a software developer filed a class action lawsuit against Microsoft, its subsidiary GitHub and business partner OpenAI for allegedly violating copyright law with Copilot, GitHub’s AI-powered, code-generating service. The suit hinges on the fact that Copilot — which was trained on millions of examples of code from the internet — regurgitates sections of licensed code without providing credit.

Beyond the legal ramifications, there’s reason to fear that prompts could reveal, either directly or indirectly, some of the more sensitive data embedded in the image training data sets. As a recent Ars Technica report revealed, private medical records — as many as thousands — are among the photos hidden within Stable Diffusion’s set.

The co-authors propose a solution (not pioneered by them) in the form of a technique called differentially private training, which would “desensitize” diffusion models to the data used to train them — preserving the privacy of the original data in the process. Differentially private training usually harms performance, but that might be the price to pay to protect privacy and intellectual property moving forward if other methods fail, the researchers say.

“Once the model has memorized data, it’s very difficult to verify that a generated image is original,” the researcher said. “I think content creators are becoming aware of this risk.”

Comment