Connie Qian

Research shows that by 2026, over 80% of enterprises will be leveraging generative AI models, APIs, or applications, up from less than 5% today.

This rapid adoption raises new considerations regarding cybersecurity, ethics, privacy, and risk management. Among companies using generative AI today, only 38% mitigate cybersecurity risks, and just 32% work to address model inaccuracy.

My conversations with security practitioners and entrepreneurs have concentrated on three key factors:

- Enterprise generative AI adoption brings additional complexities to security challenges, such as overprivileged access. For instance, while conventional data loss prevention tools effectively monitor and control data flows into AI applications, they often fall short with unstructured data and more nuanced factors such as ethical rules or biased content within prompts.

- Market demand for various GenAI security products is closely tied to the trade-off between ROI potential and inherent security vulnerabilities of the underlying use cases for which the applications are employed. This balance between opportunity and risk continues to evolve based on the ongoing development of AI infrastructure standards and the regulatory landscape.

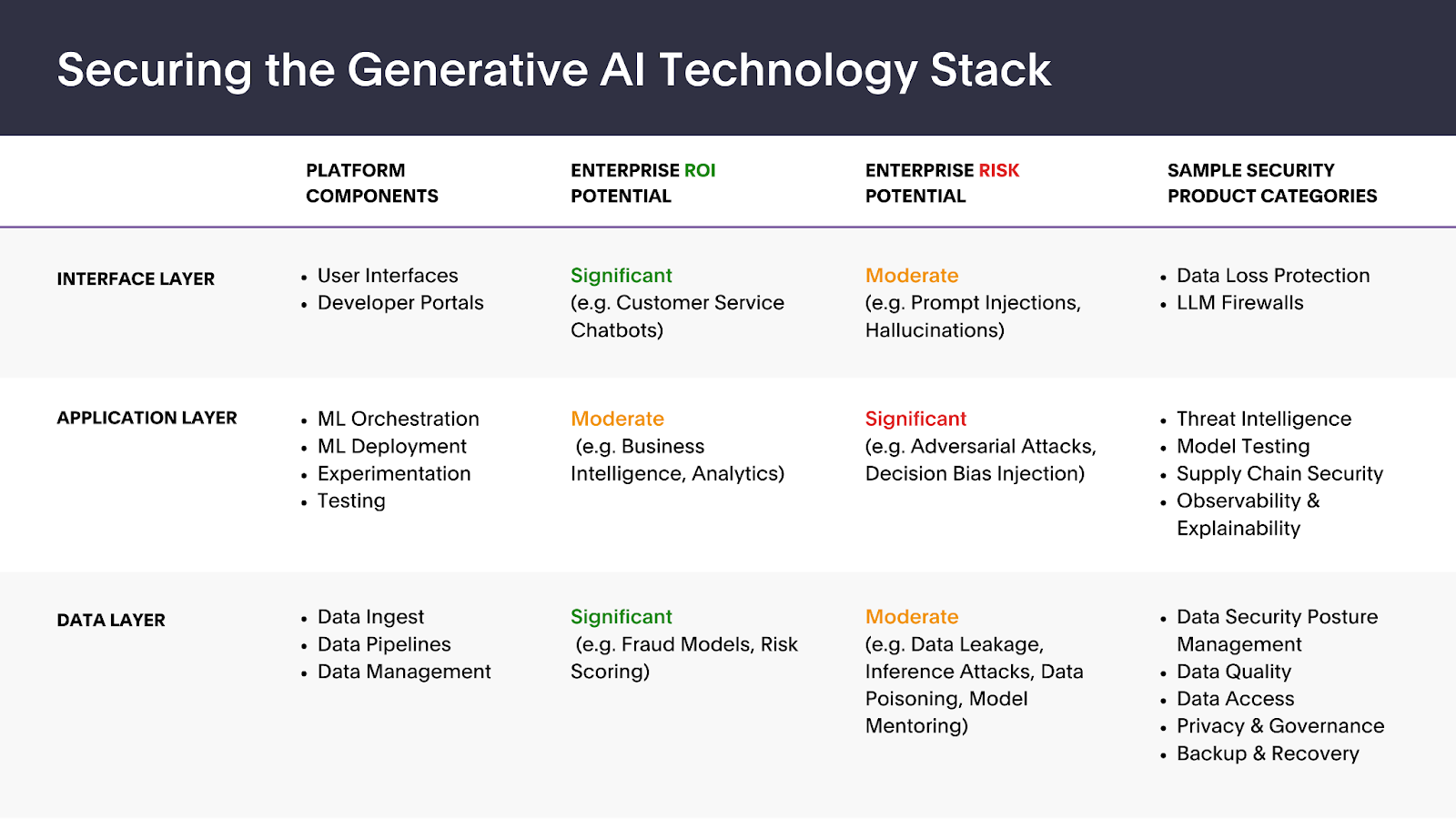

- Much like traditional software, generative AI must be secured across all architecture levels, particularly the core interface, application, and data layers. Below is a snapshot of various security product categories within the technology stack, highlighting areas where security leaders perceive significant ROI and risk potential.

Interface layer: Balancing usability with security

Businesses see immense potential in leveraging customer-facing chatbots, particularly customized models trained on industry and company-specific data. The user interface is susceptible to prompt injections, a variant of injection attacks aimed at manipulating the model’s response or behavior.

In addition, chief information security officers (CISOs) and security leaders are increasingly under pressure to enable GenAI applications within their organizations. While the consumerization of the enterprise has been an ongoing trend, the rapid and widespread adoption of technologies like ChatGPT has sparked an unprecedented, employee-led drive for their use in the workplace.

Widespread adoption of GenAI chatbots will prioritize the ability to accurately and quickly intercept, review, and validate inputs and corresponding outputs at scale without diminishing user experience. Existing data security tooling often relies on preset rules, resulting in false positives. Tools like Protect AI’s Rebuff and Harmonic Security leverage AI models to dynamically determine whether or not the data passing through a GenAI application is sensitive.

Due to the inherently non-deterministic nature of GenAI tools, a security vendor would need to understand the model’s expected behavior and tailor its response based on the type of data it seeks to protect, such as personal identifiable information (PII) or intellectual property. These can be highly variable by use case as GenAI applications are often specialized for particular industries, such as finance, transportation, and healthcare.

Like the network security market, this segment could eventually support multiple vendors. However, in this area of significant opportunity, I expect to see a competitive rush to establish brand recognition and differentiation among new entrants initially.

Application layer: An evolving enterprise landscape

Generative AI processes are predicated on sophisticated input and output dynamics. Yet they also grapple with threats to model integrity, including operational adversarial attacks, decision bias, and the challenge of tracing decision-making processes. Open source models benefit from collaboration and transparency but can be even more susceptible to model evaluation and explainability challenges.

While security leaders see substantial potential for investment in validating the safety of ML models and related software, the application layer still faces uncertainty. Since enterprise AI infrastructure is relatively less mature outside established technology firms, ML teams rely primarily on their existing tools and workflows, such as Amazon SageMaker, to test for misalignment and other critical functions today.

Over the longer term, the application layer could be the foundation for a stand-alone AI security platform, particularly as the complexity of model pipelines and multimodel inference increase the attack surface. Companies like HiddenLayer provide detection and response capabilities for open source ML models and related software. Others, like Calypso AI, have developed a testing framework to stress-test ML models for robustness and accuracy.

Technology can help ensure models are fine-tuned and trained within a controlled framework, but regulation will likely play a role in shaping this landscape. Proprietary models in algorithmic trading became extensively regulated after the 2007–2008 financial crisis. While generative AI applications present different functions and associated risks, their wide-ranging implications for ethical considerations, misinformation, privacy, and intellectual property rights are drawing regulatory scrutiny. Early initiatives by governing bodies include the European Union’s AI Act and the Biden administration’s Executive Order on AI.

Data layer: Building a secure foundation

The data layer is the foundation for training, testing, and operating ML models. Proprietary data is regarded as the core asset of generative AI companies, not just the models, despite the impressive advancements in foundational LLMs over the past year.

Generative AI applications are vulnerable to threats like data poisoning, both intentional and unintentional, and data leakage, mainly through vector databases and plug-ins linked to third-party AI models. Despite some high-profile events around data poisoning and leakage, security leaders I’ve spoken with didn’t identify the data layer as a near-term risk area compared to the interface and application layers. Instead, they often compared inputting data into GenAI applications to standard SaaS applications, similar to searching in Google or saving files to Dropbox.

This may change as early research suggests that data poisoning attacks may be easier to execute than previously thought, requiring less than 100 high-potency samples rather than millions of data points.

For now, more immediate concerns around data were closer to the interface layer, particularly around the capabilities of tools like Microsoft Copilot to index and retrieve data. Although such tools respect existing data access restrictions, their search functionalities complicate the management of user privileges and excessive access.

Integrating generative AI adds another layer of complexity, making it challenging to trace data back to its origins. Solutions like data security posture management can aid in data discovery, classification, and access control. However, it requires considerable effort from security and IT teams to ensure the appropriate technology, policies, and processes are in place.

Ensuring data quality and privacy will raise significant new challenges in an AI-first world due to the extensive data required for model training. Synthetic data and anonymization such as Gretel AI, while applicable broadly for data analytics, can help prevent scenarios of unintentional data poisoning through inaccurate data collection. Meanwhile, differential privacy vendors like Sarus can help restrict sensitive information during data analysis and prevent entire data science teams from accessing production environments, thereby mitigating the risk of data breaches.

The road ahead for generative AI security

As organizations increasingly rely on generative AI capabilities, they will need AI security platforms to be successful. This market opportunity is ripe for new entrants, especially as the AI infrastructure and regulatory landscape evolves. I’m eager to meet the security and infrastructure startups enabling this next phase of the AI revolution — ensuring enterprises can safely and securely innovate and grow.

Comment