In March 2016, DeepMind’s AlphaGo beat Lee Sedol, who at the time was the best human Go player in the world. It represented one of those defining technological moments like IBM’s Deep Blue beating chess champion Garry Kasparov, or even IBM Watson beating the world’s greatest Jeopardy! champions in 2011.

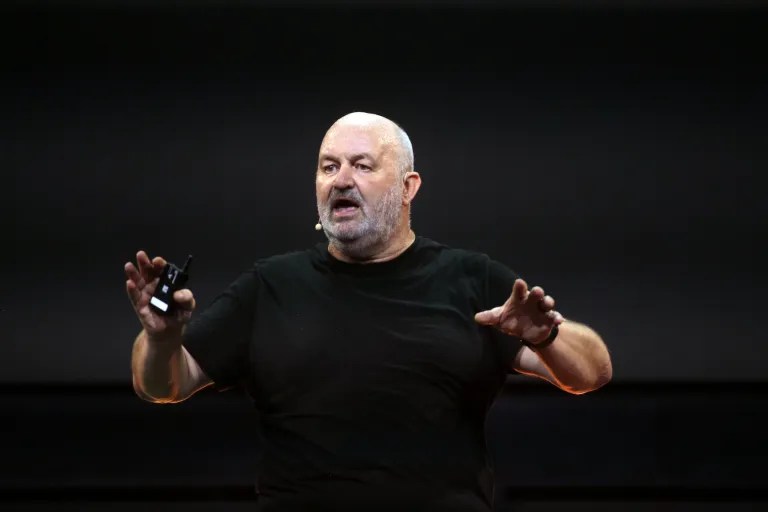

Yet these victories, as mind-blowing as they seemed to be, were more about training algorithms and using brute-force computational strength than any real intelligence. Former MIT robotics professor Rodney Brooks, who was one of the founders of iRobot and later Rethink Robotics, reminded us at the TechCrunch Robotics Session at MIT last week that training an algorithm to play a difficult strategy game isn’t intelligence, at least as we think about it with humans.

He explained that as strong as AlphaGo was at its given task, it actually couldn’t do anything else but play Go on a standard 19 x 19 board. He relayed a story that while speaking to the DeepMind team in London recently, he asked them what would have happened if they had changed the size of the board to 29 x 29, and the AlphaGo team admitted to him that had there been even a slight change to the size of the board, “we would have been dead.”

“I think people see how well [an algorithm] performs at one task and they think it can do all the things around that, and it can’t,” Brooks explained.

Brute-force intelligence

As Kasparov pointed out in an interview with Devin Coldewey at TechCrunch Disrupt in May, it’s one thing to design a computer to play chess at Grand Master level, but it’s another to call it intelligence in the pure sense. It’s simply throwing computer power at a problem and letting a machine do what it does best.

“In chess, machines dominate the game because of the brute force of calculation and they [could] crunch chess once the databases got big enough and hardware got fast enough and algorithms got smart enough, but there are still many things that humans understand. Machines don’t have understanding. They don’t recognize strategical patterns. Machines don’t have purpose,” Kasparov explained.

Gil Pratt, CEO at the Toyota Institute, a group inside Toyota working on artificial intelligence projects including household robots and autonomous cars, was interviewed at the TechCrunch Robotics Session, said that the fear we are hearing about from a wide range of people, including Elon Musk, who most recently called AI “an existential threat to humanity,” could stem from science-fiction dystopian descriptions of artificial intelligence run amok.

I think it’s important to keep in context how good these systems are, and actually how bad they are too, and how long we have to go until these systems actually pose that kind of a threat [that Elon Musk and others talk about] Gil Pratt, CEO, Toyota Institute

“The deep learning systems we have, which is what sort of spurred all this stuff, are remarkable in how well we do given the particular tasks that we give them, but they are actually quite narrow and brittle in their scope. So I think it’s important to keep in context how good these systems are, and actually how bad they are too, and how long we have to go until these systems actually pose that kind of a threat [that Elon Musk and others talk about].”

Brooks said in his TechCrunch Sessions: Robotics talk that there is a tendency for us to assume that if the algorithm can do x, it must be as smart as humans. “Here’s the reason that people — including Elon — make this mistake. When we see a person performing a task very well, we understand the competence [involved]. And I think they apply the same model to machine learning,” he said.

Facebook’s Mark Zuckerberg also criticized Musk’s comments, calling them “pretty irresponsible,” in a Facebook Live broadcast on Sunday. Zuckerberg believes AI will ultimately improve our lives. Musk shot back later that Zuckerberg had a “limited understanding” of AI. (And on and on it goes.)

It’s worth noting, however, that Musk isn’t alone in this thinking. Physicist Stephen Hawking and philosopher Nick Bostrom also have expressed reservations about the potential impact of AI on humankind — but chances are they are talking about a more generalized artificial intelligence being studied in labs at the likes of Facebook AI Research, DeepMind and Maluuba, rather than the more narrow AI we are seeing today.

Brooks pointed out that many of these detractors don’t actually work in AI, and suggested they don’t understand just how difficult it is to solve each problem. “There are quite a few people out there who say that AI is an existential threat — Stephen Hawking, [Martin Rees], the Astronomer Royal of Great Britain…a few other people — and they share a common thread in that they don’t work in AI themselves.” Brooks went onto say, “For those of us who do work in AI, we understand how hard it is to get anything to actually work through product level.”

AI could be a misnomer

Part of the problem stems from the fact that we are calling it “artificial intelligence.” It is not really like human intelligence at all, which Merriam Webster defines as “the ability to learn or understand or to deal with new or trying situations.”

The analogy that the brain is like a computer is a dangerous one, and blocks the progress of AI. Pascal Kaufmann, CEO at Starmind

Pascal Kaufmann, founder at Starmind, a startup that wants to help companies use collective human intelligence to find solutions to business problems, has been studying neuroscience for the past 15 years. He says the human brain and the computer operate differently and it’s a mistake to compare the two. “The analogy that the brain is like a computer is a dangerous one, and blocks the progress of AI,” he says.

Further, Kaufmann believes we won’t advance our understanding of human intelligence if we think of it in technological terms. “It is a misconception that [algorithms] works like a human brain. People fall in love with algorithms and think that you can describe the brain with algorithms and I think that’s wrong,” he said.

When things go wrong

There are in fact many cases of AI algorithms not being quite as smart as we might think. One infamous example of AI out of control was the Microsoft Tay chatbot, created by the Microsoft AI team last year. It took less than a day for the bot to learn to be racist. Experts say that it could happen to any AI system when bad examples are presented to it. In the case of Tay, it was manipulated by racist and other offensive language, and since it had been taught to “learn” and mirror that behavior, it soon ran out of the researchers’ control.

A widely reported study conducted by researchers at Cornell University and the University of Wyoming found that it was fairly easy to fool algorithms that had been trained to identify pictures. The researchers found that when presented with what looked like “scrambled nonsense” to humans, algorithms would identify it as an everyday object like “a school bus.”

What’s not well understood, according to an MIT Tech Review article on the same research project, is why the algorithm can be fooled in the way the researchers found. What we know is that humans have learned to recognize whether something is a picture or nonsense, and algorithms analyzing pixels can apparently be subject to some manipulation.

Self-driving cars are even more complicated because there are things that humans understand when approaching certain situations that would be difficult to teach to a machine. In a long blog post on autonomous cars that Rodney Brooks wrote in January, he brings up a number of such situations, including how an autonomous car might approach a stop sign at a cross walk in a city neighborhood with an adult and child standing at the corner chatting.

The algorithm would probably be tuned to wait for the pedestrians to cross, but what if they had no intention of crossing because they were waiting for a school bus? A human driver could signal to the pedestrians to go, and they in turn could wave the car on, but a driverless car could potentially be stuck there endlessly waiting for the pair to cross because they have no understanding of these uniquely human signals, he wrote.

Each of these examples show just how far we have to go with artificial intelligence algorithms. Should researchers ever become more successful at developing generalized AI, this could change, but for now there are things that humans can do easily that are much more difficult to teach an algorithm, precisely because we are not limited in our learning to a set of defined tasks.

Comment