Recent reports that have both Peter Jackson and James Cameron shooting films at 48 frames per second (fps) have attracted a lot of commentary, and as this is a blog that covers trends and bleeding-edge tech, it seems like a synthesis of this discussion is warranted.

Framerate standards sound like a rather dry topic to begin with, but it’s amazing what difference is created by even a minor shift on such supposedly technical grounds. Understanding why framerates are the way they are, and how they are changing, is fundamental to modern media production, and really is a major part of a number of multi-billion-dollar businesses. It’s powerful information, and more importantly, it’s interesting. Let’s take a look at the psychology and history that have created a worldwide standard for moving images, and examine why this standard is under revision.

I should preface all this by saying that frame standards are best experienced, not analyzed on paper. Very few people have actually experienced the types of media under discussion, just as few people had really experienced a true 3D movie until they saw Avatar. The proof of the pudding, as they say, is in the taste, and nobody has tasted the pudding yet. The pudding has only just been announced. That said, there is a lot of history and a few misconceptions about why things are the way they are that should be known by anyone who wants to hold an informed opinion.

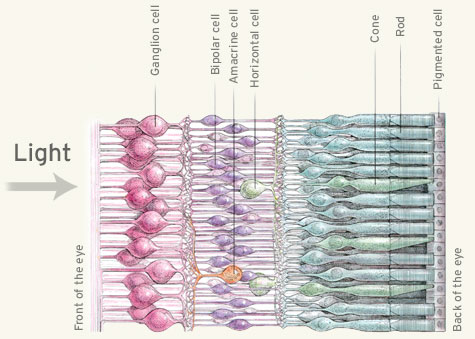

First, as with 3D technology, a brief course in neuroanatomy is required:

How we see

Not enough is known about how we see to simply say “here, this is the way we should make movies,” if that were even possible. But a little knowledge about the visual system helps to understand why some things look the way they do.

Light that hits your retina is collected and collated by a number of cell classes (whose technical names I forgo for the sake of clarity), some pulling data from various groups of rods and cones, getting aggregate readings, individual readings, and so on. These mediator cells (like many in the nervous system) actually act as a sort of analog-to-digital converter, sending signals at a rate corresponding to how much light their selected rods and cones are receiving. It’s very, very complicated and I’m really simplifying it, but the end result is that these cells tend to have a maximum regular “firing rate” of around 30 per second, sometimes more, sometimes less. This seems to be the source for the common misconception that we see at 30 frames per second, or that anything beyond that is imperceptible. People can perceive small numbers of photons and can identify images shown for only a few milliseconds.

What is conveniently ignored is that while individual cells may fire at that rate, they are not linked to each other via some light-speed network that tells them to fire all exactly in sync. There’s some synchronization, yes, but it happens downstream, as these raw signals from the eye are made into something intelligible to the higher-level visual system. So while the 30fps mark is an important one, it’s not that important — not like at 35 or 40fps, everything suddenly becomes perfectly smooth and life-like. Yet there’s a limit to how many “frames” you can perceive; try flapping your hand in front of your face. Why is it blurry if there are billions of photons bouncing onto a progressive and constant polling of high-frequency photodetectors?

Later in the visual system flow, there are some very high-level functions that affect perception. Some optical illusions have been made that show how your mind “fills in the gap” when a piece of an image is missing, and how cartoons animated at extremely low framerates still appear to generate natural motion despite having huge gaps between sequential positions of objects. This process of predictive perception is your brain wanting to make a complete and consistent image out of incomplete and contradictory data. It’s a skill you learned throughout your babyhood and youth, and it’s going on all the time.

The set of rules for predictive perception is as extensive and varied as are our personalities. The catalog of shortcuts and refinements the brain makes to visual data would be nearly impossible to make. But I have digressed too far in this direction; the point here is that there are rules to perception, but they are not hard and fast, nor are they authoritatively listed, whatever some may say.

History

The early days of cinema were a crazy world of inconsistent standards, analog and hand-cranked exposures, and to top things off, both audience and filmmakers were completely new to the art. Early silent films were cranked as consistently as possible, and projected at 16fps. This was established as the absolute floor framerate under which people would be bothered by the flicker when the shutter interrupts the light source. The question of persistence of vision (mentioned above) was answered around 1912, and though the myth persists today, early film pioneers took note. Edison actually recommended 46 frames per second, saying “anything less will strain the eye.” Of course, his stock and exposures were inadequate for this standard, and slower frame rates were necessitated by minimum exposure values.

Eventually a happy medium of 24 frames per second was established as larger studios began standardizing and patenting film techniques and technologies — it was visually appealing and compatible with existing two-bladed 48Hz projectors. It might have been 23, it might have been 25 (more on 25 later); it certainly could not have been 16 or 40. So 24 was chosen neither arbitrarily or scientifically.

It’s also worth noting that the way film was (and is) displayed is not the way we see things on computer screens and LCD TVs now. The image on screen would be shown for a set time (say a 48th of a second) then the shutter wheel would block the image, leaving the screen dark for an equal time. There’s variation here depending on blade count and so on, but the end result is that there is an image, then darkness, then a new image. Today, images follow one another instantly, since there is no need for a shutter to hide the movement of film. This is important.

Television threw a wrench into the proceedings. The display technology at the time prohibited displaying an entire frame all at once, so a compromise was made between film’s 24fps, the fields per second cathode ray tubes were capable of at the time, and the 60Hz alternating current frequency used by the US, producing the familiar interlaced image seen still seen on many analog TVs today. The effect (simulated below) can still be seen today; interlaced recording is still an option, indeed the default, on many cameras.

The UK and other countries with a 50Hz AC standard adopted a slightly slower field refresh rate with a slightly higher resolution. This is the beginning of the PAL/NTSC framerate conflict that has plagued motion picture production for half a century. I do want to say here that the 25/50 standard was much more logical than our 24/30/60 one then, and it still is now.

I pass over a great period of time during which things mostly stayed the same. A great number of techniques were created for converting analog to digital and back to analog, speeding up or slowing down framerates, deinterlacing, conversion pulldowns, and so on. Although these things are interesting to the video professional, they constitute a sort of dark age from which we are only recently emerging — and this emergence is the reason we are having this discussion.

As cinema, TV, and home video migrate to an all-digital, all-progressive frame format, we are ridding ourselves of the hated interlacing (my eternal enemy), of insane microscopic frame differences to allow for analog synchronization (23.976, 29.97, etc, though Jackson is filming at 47.96, not straight 48), and of standards established by the guys who were designing the first light bulbs. It’s a glorious time to be a filmmaker, and it’s only natural that adventurous types like Cameron and Jackson would want to stretch their legs.

There’s still some work to be done standardizing brightness and framerates in digital projectors (there are patents and other nonsense involved), but a world where you can shoot at virtually any framerate and have it displayed flawlessly at the same rate, essentially reproducing exactly what the director and production team produce, is a wonderful thing we should not take for granted. Yet we should also not assume that because things like 24 are old, that they are no longer relevant.

Look

Today, moving images are generally shot and viewed at one of several refresh rates: 24p, 25p, 30p, 50i, or 60i. There are more, of course, but these are the end products. Sometimes they’re converted to each other, which is a destructive process that will soon, thankfully, be obsolete.

The trouble is with the notion that higher framerates are necessarily better. We’ve written before about the plague of frame-interpolating 120Hz and 240Hz (and more) HDTVs that give an unreal, slippery look to things. This isn’t an illusion, or, to be precise, it isn’t any more of an “illusion” than anything else you see. What it’s doing is adding information that simply isn’t there.

Let’s look at an example. When Bruce Lee punches that guy in Enter The Dragon, you barely see his fist move. The entire punch takes up maybe five frames of film, and even a short exposure would have trouble capturing it clearly at beginning, halfway, three-quarters there, etc. — plus there would be no guarantee of capturing the exact moment it connected with the guy’s face. Yet with interpolation, a frame must be “created” for all these states.

Less extreme, but still relevant: imagine a lamp pole moving in the background of a panning shot. In film projection, it is here, and then it is black (empty frame), and then it is there. How do you know how it got from here to there? Remember predictive perception? Your mind fills in the blank and you don’t even notice that the pole, on its trip between points A and C, never existed at point B. You fill in the gap so effectively that it isn’t even noticeable. Again, interpolation attempts to fill in this gap — a gap your mind has already filled admirably, and for which (despite sophisticated motion tracking algorithms) there really is very little data. The result is a strange visual effect that is repulsive to the sensitive, though to be sure many people don’t even notice. Of course, people don’t notice lots of things.

But that’s just these trendy TV makers who need another big number to put on their sets. The real debate is when established filmmakers like Cameron and Jackson say they’ll be using 48fps for their next films, Avatar 2 and The Hobbit (Cameron may actually shoot at 60, it’s undecided, but 48 makes more sense). Many seem to have overlooked the fact that these are both 3D films. This isn’t an insignificant detail. There are complications with 3D display methods on existing projectors, using this or that style of glasses, encoding, and so on. A common complaint was strobing or flickering. Judder is another common effect when 24p content isn’t shot or shown properly, especially in 3D. Filming and displaying at 48fps alleviates many of these issues, as long as you have a suitably bright projector. Cameron and Jackson are making a technical decision here, that enables their films to be shown the way they should be seen.

But what about the artistic decision? This is a touchy subject, and we must be careful not to be sentimental. We don’t shoot on hand cranks any more, for good reason. Is 24 similarly something that needs to be left behind? I personally don’t think so. But is it something that needs to be rigidly adhered to? Again, I don’t think so. Directors, cinematographers, editors, colorists, all have immense artistic latitude in their modification of the raw footage — look at a scene before and after post production if you need any convincing on this point. What is the framerate but one more aspect to tweak? At the same time, no real filmmaker tweaks something just to tweak it.

24 is a look, one which engages the audience by implying movement and allowing the viewer’s brain to interpret it. Would Bruce Lee’s punch look faster if it was filmed and displayed at 60fps? I sincerely doubt it; in fact, I believe it would look slower. The implication of speed and movement is a stronger statement — just as in other media, like writing, where the most literal description may not be the best. Just as five words may tell more than a hundred, as fans of Hemingway have it, five frames of Lee’s blurred fist may not adequately document the punch, but they transmit the punch to the audience more effectively than a more high fidelity form of capture.

Yet for all this, 24 is not some magical number that cannot be improved, or that’s perfect for every shot or situation. Douglas Trumbull is the most famous talking point referred to by high-framerate evangelists like Roger Ebert. His 65mm, 60fps Showscan format amazed audiences with its unique look in the 80s, yet never caught on. Why? The same reason Edison couldn’t shoot 46fps in 1912. Too expensive, the film industry didn’t (and couldn’t) support it, and audiences, while finding it novel and compelling, likely were even more put off then by its totally different look. If you’ve never seen native 48, 50, or 60fps media, it’s worth noting that it’s actually unnerving, and you really can’t say why. Some people say “too smooth,” but isn’t life smooth? It may simply be that our mind is revolting against the impossibility of a “magic window” showing something so lifelike that is clearly not reality. Even people who have worked in cinema for their whole lives find it difficult to express this very experiential and qualitative difference.

The negative reaction to high framerates is also associational. For decades we’ve watched cheaply-produced TV shows shot on video tape or transmitted live at an end framerate of 60i. Flat lighting, bad production in general, and small screens have for our entire lives associated high framerates with low quality. So it’s understandable when objectors to 48 and up say they don’t want their movies looking like soap operas. But if TV had been transmitted at 24p, what would these objectors’ reactions to increased framerates be? Likely they would be lauding the powerful immersive quality of the new format, and writing blog posts consigning 24 to the pit.

We have to cast off this learned sentimentality and embrace advances for themselves, but also avoid reckless neophilia by acknowledging their limitations. High framerates, divested of their soap opera associations, simply provide more visual information, and that makes for a superior representation in some situations — most notably, situations when you want to show an image as close as possible to the object actually being present. Nature documentaries, sports, news reports, home videos, these things will look amazing at 48, 50, 60, or more. Feature films and television as well, as long as the director chooses the framerate for a reason germane to the concept or production — as Cameron and Jackson clearly have.

But “cinematic” isn’t an anachronism, and our love of the “film look” isn’t a case of Filmstockholm Syndrome, if you will. We like it because it looks good — in its right place. And soon, we’ll like 48, 60, and other framerates because they too look good — in their right places. Until we’ve all experienced what these new and powerful changes to visual media have to offer, it’s premature to dismiss or embrace any of them exclusively. I look forward to seeing what these talented and pioneering filmmakers have to offer, but at the same time I want to reserve judgment until the data are in.

Cinema is an experiential medium, yet it is also a process, and no one can argue against improving how it is produced. I believe that the current “advances” in 3D, resolution, and frame rates are simply more tools to be employed by the skilful filmmaker, more latitude for production, more power to capture and display. We’ll know soon; until then, patience is the word. Wait and see.

Update: Peter Jackson has written about his decision to use 48 in this post on Facebook, saying a few of things I’ve said here but mainly reassuring people that he’s doing this because it looks good to him. He included this photo:

Comment