[This post has been updated to include comments from Adam Kramer, the Facebook employee who coauthored the study, and new information from Cornell indicating that the study was funded internally. ]

A recent study conscripted Facebook users as unwitting participants during a weeklong experiment in direct emotional manipulation. The study set out to discover if the emotional tone of a users’ News Feed content had an impact on their own emotional makeup, measured through the tone of what they posted to the social service after viewing the skewed material.

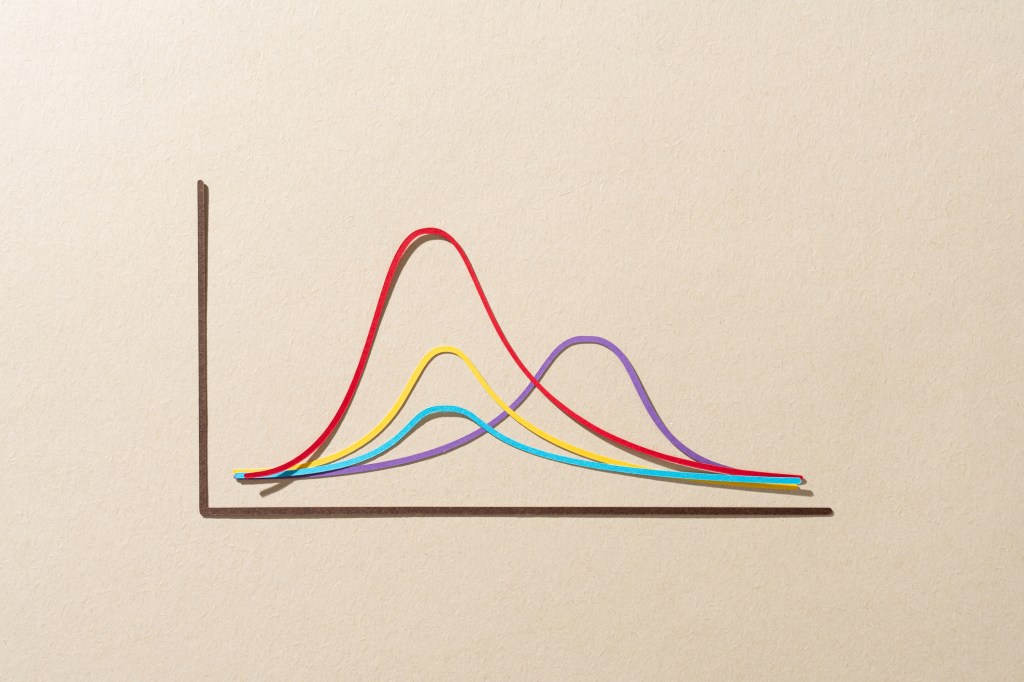

Nearly 700,000 Facebook users were shown either more positive, or more negative content. The study found that users who were given more positive news feeds posted more positive things, and users who were given more negative news feeds posted more negative things.

Surprising? Doubtful. Unethical? Yes.

Bear it in mind that the impact of the study wasn’t contained merely to those it directly manipulated. It notes that around 155,000 users from the positive and negative groups each “posted at least one status update during the experimental period.” So, hundreds of thousands of status updates were posted by the negatively-induced user group. Those negative posts likely caused more posts of similar ilk.

Contagion, after all, doesn’t end at the doorstep.

We won’t know if the experiment did any more than darken the days of a few hundred thousand users for a week in 2012. But it could have. And that’s enough to make a call on this: Allowing your users to be unwitting test subjects of emotional manipulation is beyond creepy. It’s a damn disrespectful and dangerous choice.

Not everyone is in a good emotional spot. At any given moment, a decent chunk of Facebook’s users are emotionally fragile. We know that because at any given moment, a decent chunk of humanity of emotionally fragile, and Facebook has a massive number of active users. That means that among the negatively influenced were the weak, the vulnerable, and potentially the young. I’ve reached out to Facebook asking if the study excluded users between the ages of 13 and 18, but haven’t yet heard back.

Adding extraneous, unneeded emotional strain to a person of good mental health is an unkindness. Doing so to a person who needs encouragement and support is cruel.

The average Facebook user has something akin to an unwritten social contract with the company: I use your product, and you serve ads against the data I’ve shared. Implicit to that is expected polite behavior, the idea that Facebook won’t abuse your data, or your trust. In this case, Facebook did both, using a user’s social graph against them, with intent to cause emotional duress.

We’re all manipulated by corporations. Advertising is among the more blatant examples of it. There’s far more of it out there than we realize. The pervasiveness of the manipulation makes us slightly inured to it, undoubtedly. But that doesn’t mean we can’t point out things that are over the line when we are shown what’s going on behind the curtain. If Facebook was willing to allow this experiment — lead author of which, according to the study itself is a Facebook employee working on its Core Data Science Team — what else might it allow in the future?

I am not arguing that Facebook has a moral imperative to make news feed content more positive on average. That would render the service intolerable — not all life events are positive, and the ability to commiserate with friends and loved ones digitally is now part of the human experience. And Facebook certainly tweaks its news feed over time for myriad reasons to improve its experience.

That’s all perfectly reasonable. Deliberately looking to skew the emotional makeup of its users, spreading negativity for no purpose other than curiosity without user assent and practical safeguards is different. It’s irresponsible.

For more on the topic, read our follow on the ethics of the experiment: The Morality Of A/B Testing

Here’s the response from Facebook’s Kramer:

OK so. A lot of people have asked me about my and Jamie and Jeff’s recent study published in PNAS, and I wanted to give a brief public explanation. The reason we did this research is because we care about the emotional impact of Facebook and the people that use our product. We felt that it was important to investigate the common worry that seeing friends post positive content leads to people feeling negative or left out. At the same time, we were concerned that exposure to friends’ negativity might lead people to avoid visiting Facebook. We didn’t clearly state our motivations in the paper.

Regarding methodology, our research sought to investigate the above claim by very minimally deprioritizing a small percentage of content in News Feed (based on whether there was an emotional word in the post) for a group of people (about 0.04% of users, or 1 in 2500) for a short period (one week, in early 2012). Nobody’s posts were “hidden,” they just didn’t show up on some loads of Feed. Those posts were always visible on friends’ timelines, and could have shown up on subsequent News Feed loads. And we found the exact opposite to what was then the conventional wisdom: Seeing a certain kind of emotion (positive) encourages it rather than suppresses is.

And at the end of the day, the actual impact on people in the experiment was the minimal amount to statistically detect it — the result was that people produced an average of one fewer emotional word, per thousand words, over the following week.

The goal of all of our research at Facebook is to learn how to provide a better service. Having written and designed this experiment myself, I can tell you that our goal was never to upset anyone. I can understand why some people have concerns about it, and my coauthors and I are very sorry for the way the paper described the research and any anxiety it caused. In hindsight, the research benefits of the paper may not have justified all of this anxiety.

While we’ve always considered what research we do carefully, we (not just me, several other researchers at Facebook) have been working on improving our internal review practices. The experiment in question was run in early 2012, and we have come a long way since then. Those review practices will also incorporate what we’ve learned from the reaction to this paper

Comment