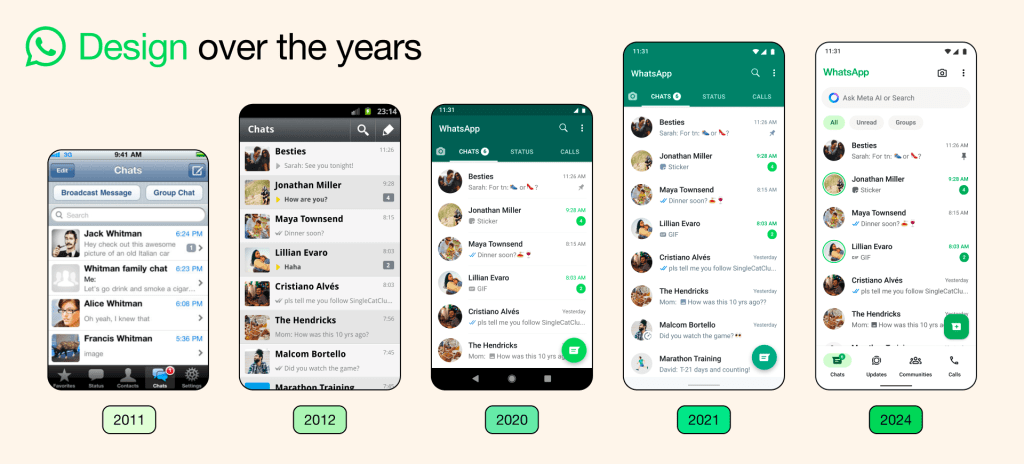

Facebook is a breeding ground for fake news and polarized outrage, accused of corrupting democracy and spurring genocide. Twitter knows it has become a seething battleground of widespread, targeted abuse — but has no solution. YouTube videos are messing with the minds of children and adults alike — so YouTube decided to pass the buck to Wikipedia, without telling them.

All three of those sentences would have seemed nearly unimaginable five years ago. What the hell is going on? Ev Williams says, of the growth of social media: “We laid down fundamental architectures that had assumptions that didn’t account for bad behavior.” What changed? And perhaps the most important question is: have people always been this awful, or have social networks actually made us collectively worse?

I have two somewhat related theories. Let me explain.

The Uncanny Social Valley Theory

“Social media is poison,” a close friend of mine said to me a couple of years ago, and since then more and more of my acquaintances seem to have come around to her point of view, and are abandoning or greatly reducing their time spent on Facebook and/or Twitter.

Why is it poison? Because this technology meant to provoke human connection actually dehumanizes. Not always, of course; not consistently. It remains a wonderful way to keep in contact with distant friends, and to enhance your relationship and understanding of those you regularly see in the flesh. What’s more, there are some people with whom you just ‘click’ online, and real friendships grow. There are people I’ve never met who I’d unhesitatingly trust with the keys to my car and home, because of our interactions on various social networks.

And yet — having stipulated all the good things — a lot of online interactions can and do reduce other people to awful caricatures of themselves. In person we tend to manage a kind of mammalian empathy, a baseline understanding that we’re all just a bunch of overgrown apes with hyperactive amygdalas trying to figure things out as best we can, and that relatively few of us are evil stereotypes. (Though see below.) Online, though, all we see are a few projections of those mammal brains, generally in the form of hastily constructed, low-context text and images … as mediated and amplified by the outrage machines, those timeline algorithms which think that “engagement” is the highest goal to which one can possibly aspire online.

I am reminded of the concept of the Uncanny Valley: “humanoid objects which appear almost, but not exactly, like real human beings elicit uncanny, or strangely familiar, feelings of eeriness and revulsion in observers.” Sometimes you ‘click’ with people online such that they’re fully human to you, even if you’ve never met. Sometimes you see them fairly often in real life, so their online projections are just a new dimension to their existing humanity. But a lot of the time, all you get of them is that projection … which falls squarely into an empathy-free, not-quite-human, uncanny social valley.

And so many of us spend so much time online, checking Twitter, chatting on Facebook, that we’ve all practically built little cottages in the uncanny social valley. Hell, sometimes we spend so much time there that we begin to believe that even people we know in real life are best described as neighbors in that valley … which is how friendships fracture and communities sunder online. A lot of online outrage and fury — the majority, I’d estimate, though not all — is caused not by its targets’ inherent awfulness but by an absence, on both sides, of context, nuance, and above all, empathy and compassion.

The majority. But not all. Because this isn’t just a story of lack of compassion. This is also a story of truly, genuinely awful people doing truly, genuinely awful things. That aspect is explained by…

The Intransigent Asshole Theory

Of course the Internet was always full of awful. Assholes have been trolling since at least 1993. “Don’t read the comments” is way older than five years old. But it’s different now; the assholes are more organized, their victims are often knowingly and strategically targeted, and many seem to have calcified from assholedom into actual evil. What’s changed?

The Intransigent Asshole Theory holds that the only thing that’s changed is that more assholes are online and they’ve had more time to find each other and agglomerate into a kind of noxious movement. They aren’t that large in number. Say that a mere three percent of the online population are, actually, the evil stereotypes that we perceive so many to be.

If three of 100 people are known to be terrible human beings, the other 97 can identify them and organize to defend themselves with relative ease. 97 is well within Dunbar’s number after all. But what about 30 of a 1,000? That gets more challenging, if those thirty band together; the non-awful people have to form fairly large groups. How about 300 of 10,000? Or 3,000 of 100,000? 3 million of 100 million? Suddenly three percent doesn’t seem like such a small number after all.

I chose three percent because it’s the example used by Nassim Taleb in his essay/chapter “The Most Intolerant Wins: The Dictatorship of the Small Minority.” Adopting his argument slightly, if only 3% of the online population really wants the online world to be horrible, ultimately they can force it to be, because the other 97% can — as empirical evidence shows — live with a world in which the Internet is often basically a cesspool, whereas those 3% apparently cannot live with a world in which it is not.

Only a very small number of people comment on articles. But they are devoted to it; and, as a result, “don’t read the comments,” became a cliché. Is it really so surprising that “don’t read the comments” spread to “Facebook is for fake outrage and Twitter is for abuse,” given that Facebook and Twitter are explicitly designed to spread high-engagement items, i.e. the most outrageous ones? Really the only thing that’s surprising is that it took this long to become so widespread.

Worst of all — when you combine the Uncanny Social Valley Theory with the Intransigent Asshole Theory and the high-engagement outrage-machine algorithms, you get the situation where, even if only 3% of people actually are irredeemable assholes, a full 30% or more of them seem that way to us. And the situation spirals ever downwards.

“Wait,” you may think, “but what if they didn’t design their social networks that way?” Well, that takes us to the third argument, which isn’t a theory so much as an inarguable fact:

The Outrage Machine Money Maker

Outrage equals engagement equals profit. This is not at all new; this goes back to the ‘glory’ days of yellow journalism and “if it bleeds, it leads.” Today, though, it’s more personal; today everyone gets a customized set of screaming tabloid headlines, from which a diverse set of manipulative publishers profit.

This is explicit for YouTube, whose creators make money directly from their highest-engagement, and thus (often) most-outrageous videos, and for Macedonian teenagers creating fake news and raking in the resulting ad income. This is explicit for the politically motivated, for Russian trolls and Burmese hate groups, who get profits in the form of the confusion and mayhem they want.

This is implicit for the platforms themselves, for Facebook and Twitter and YouTube, all of whom rake in huge amounts of money. Their income and profits are, of course, inextricably connected to the “engagement” of their users. And if there are social costs — and it’s become clear that the social costs are immense — then they have to be externalized. You could hardly get a more on-the-nose example of this than YouTube deciding that Wikipedia is the solution to its social costs.

The social costs have to be externalized because human moderation simply doesn’t scale to the gargantuan amount of data we’re talking about; any algorithmic solution can and will be gamed; and the actual solution — which is to stop optimizing for ever-higher engagement — is so completely anathema to the platforms’ business models that they literally cannot conceive of it, and instead claim “we don’t know what to do.”

https://twitter.com/CaseyNewton/status/973939328836603906

In Summary

- Only ~3% of people are truly terrible, but if we are sufficiently compliant with their awfulness, that’s enough to ruin the world for the rest of us. History shows that we have been more than sufficiently compliant.

- Social networks often dehumanize their participants; this plus their outrage-machine engagement optimization makes fully 30% of people seem like they’re part of those 3%, which breeds rancor and even, honestly no fooling not exaggerating, genocide.

- (Are those the exact numbers? Almost certainly not! My point is that social networks cause “you are an awful, irredeemable human being” to be massively overdiagnosed, by an order of magnitude or more.)

- A solution is for social networks to ramp down their outrage machine, i.e. to stop optimizing for engagement.

- They will not implement this solution.

- Since they won’t implement this solution, then unless they somehow find another one — possible, but unlikely — our collective online milieu will just keep getting worse.

- Sorry about that. Hang in there. There are still a lot of good things about social networks, after all, and it’s not like things can get much worse than they already are. Right?

- …Right?

Comment