As we all know by now, Amazon Web Services (AWS) had an outage on Friday. It would not have been much of a big deal except that it was AWS. And the fact that the outage took down big consumer properties like Netflix and Instagram.

AWS is usually quite silent about what it does but to its credit they have posted a lengthy explanation about what happened in North Carolina when the massive storms hit the East Coast and took out one of its data centers.

In scope, AWS said its generators failed in one of its data centers. That led to the downtime for Netflix and others. About 7% of the instances were affected in the”U S-East-1 region.”

But there’s more to this story.

And it’s what I find fascinating about what happened. Bad information caused a cascading effect that magnified the failure. And it all happened in the machines.

A bug AWS had never seen before in its elastic load balancers caused a flood of requests which created a backlog. At the same time, customers launched EC2 instances to replace what they had lost when the power went out caused by the storm. That in turn triggered instances to be added to existing load balancers in other zones that were not affected. The load, though, began to pile up in what equates to a traffic jam with only one lane available out of the mess. Requests took increasingly long to complete, which led to the issues with Netflix and the rest.

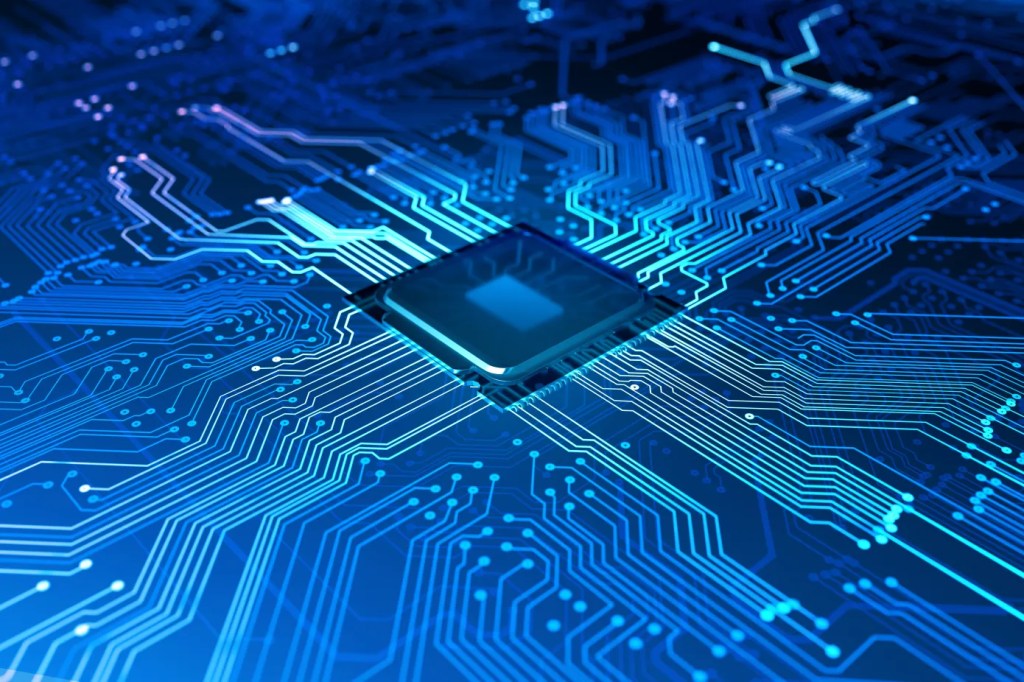

This is in part what separates AWS from other service providers. The AWS network is really a giant computer. It’s a living, breathing thing that relies on machine-to-machine communication. It’s the AWS secret sauce. Without it, Netflix could not economically offer its on-demand service. Instagram would have to rely on a traditional hosting environment, which can’t come close to what can be done with AWS.

It’s the constant tuning which makes AWS special. It’s those highly efficient machines that make it possible for AWS to keep its prices so low.

I think this is what the market does not often understand. Sure, AWS goes down. And they are taking steps to do that as explained in their summary. It’s like tuning a machine. They are learning and iterating so the giant computer it created performs better the next time. The machine will have trouble communicating sometimes, but there are programmatic ways to fix it, which AWS does pretty well.

Customers need to take this into account. Since AWS is a giant computer, it provides you with control over your own application on the infrastructure itself. It has flexibility. It has ways to make the app available no matter if a certain data center goes down. You just need to understand that and design your applications accordingly.

Comment