Video is, quite literally, what gets the world moving online these days, and is expected to account for 82% of all IP traffic this year. Today a startup that has built a set of tools to help better parse, index and ultimately discover that trove of content is announcing a big round of funding to expand its business after seeing 600% growth in the last year.

AnyClip — which combines artificial intelligence with more standard search tools to provide better video analytics for content providers to improve how those videos can be used and viewed — has raised $47 million, money that it will be using to build out its platform.

The funding is being led by JVP, with La Maison, Bank Mizrahi and internal investors also participating. The company is not officially disclosing its valuation but has raised $70 million to date and I understand from reliable sources that it is around $300 million.

Founded in Tel Aviv and now co-headquartered in New York, the challenge that AnyClip is tackling is the fact that there is a huge amount of video out in the world today, and it remains one of the most used content mediums, whether you are a consumer binging a Netflix series, someone trying to dig up an obscure classical music recording on YouTube, a business user on Zoom, or something in the very large in-between. The problem is that in most cases, people are just scratching the surface when they search.

That’s not just because hosts tweak algorithms to lead to watching some things instead of others; it’s because in most cases it’s too difficult, and some might say impossible, to search everything in an efficient way.

AnyClip is among the tech companies that believe it’s not impossible. Using technologies that include deep learning models based on computer vision, NLP, speech-to-text, OCR, patented key frame detection and closed captioning to “read” the content in videos. It can recognize people, brands, products, actions, millions of keywords and build taxonomies based around what the videos contain. These can be based on, for example, content category, brand safety, or whatever a customer requests.

The videos that AnyClip currently works with are hosted by AnyClip itself — on AWS, president and CEO Gil Becker tells me — and the process of reading and indexing is super quick, “10x faster than real time.”

The resulting data and what can be done with it, as you might guess, has a lot of potential uses. Currently, Becker said that AnyClip is finding a strong audience among customers that are looking for ways of better organising their video content for a variety of use cases, whether that’s for internal purposes, for B2B purposes, or for consumers to better discover something.

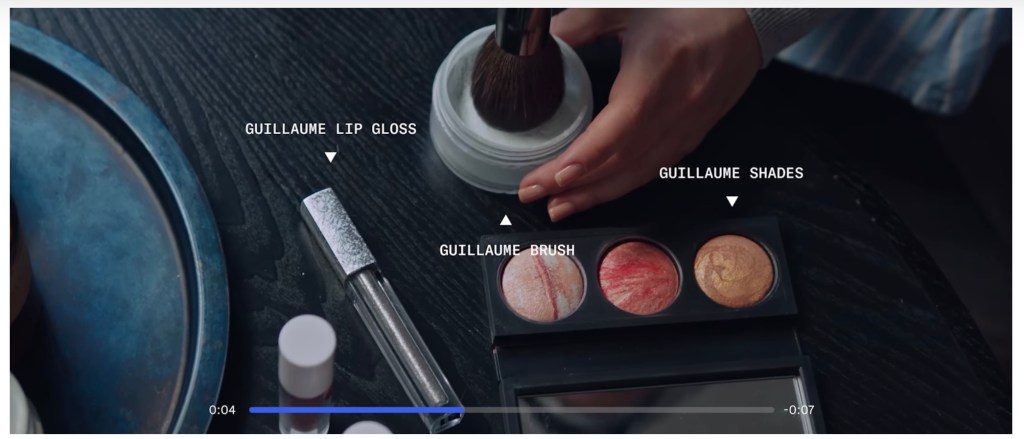

As the illustration above shows, that tech can be used, naturally, to better monetize video. By identifying more objects, themes, moods and language in videos more efficiently, AnyClip essentially can build a framework not just for people to better discover videos, but for advertisers to place ads next to whatever they want to be near (or conversely, better avoid content that they do not want any association with at all).

The list of those it works with is pretty impressive — although Becker would not get very specific on what it does for all of its clients. It includes Samsung, Microsoft, AT&T, Amazon (Prime Video specifically), Heineken, Discovery, Warner Media (the latter two soon to be one), Tencent, Internet Brands and Google.

AnyClip does not count Google as an investor per se, but it has received funding from it, specifically as part of its Google News Initiative’s Innovation Challenge to create a streaming video page experience for media companies that mimics the functionality and design of today’s most popular video-on-demand services while accessing advanced video management tools supported by AnyClip’s AI backbone. AnyClip was chosen from among hundreds of companies for its solution that allows companies to transform any library into a “Netflix or YouTube-like” library, creating channels and subchannels, in less than 30 seconds.

AnyClip has an interesting history that led to it building the search and discovery tools that it sells today. It started life back in 2009 with a concept that spoke directly to its name: It let media companies create clips of films that could be shared around the internet, which it hosted on a site of its own. These could be found using a number of taxonomies built by AnyClip’s algorithms, by humans at the company, and by contributors. Kind of like a Giphy before its time, if you will.

It turned out to be possibly too far ahead of its time. At a time when piracy was still a big deal, and there were no Netflixes or other places for streaming efficiently and legally, the idea proved to be too complicated and too hard of a sell for rights owners. The company subsequently pivoted to building a video-based ad network, which itself was probably too early, too.

But there was something to the technology, given the right place and right time, and that seems to be where the startup has landed today, with patents behind what it has built and a team of engineers continuing to expand the tech. It hopes that this will be enough to keep it ahead of competitors, which include the likes of Kaltura, Brightcove and many others. And naturally, given the size of the opportunity, that competition will not be disappearing soon.

Notably, AnyClip’s own growth, on the back of what has up to now been a modest amount of funding ($30 million in 12 years) definitely speaks of its own ability not just to win business against them, but be capital efficient in what is typically considered a very bandwidth- and resource-intensive medium.

“There is a revolution coming in the way enterprises use video to convey their message and their identity”, says Erel Margalit, JVP founder and Chairman, and AnyClip’s board chairman, in a statement. “For the first time, AI meets video. Companies and organizations are now working to utilize this to create a new mode of communications, internally and externally, in all areas where video dominates in a much stronger way than text. Whether it’s how to create videos for consumers or training videos for the organization, or learning how to manage conferences run by video on Zoom that need intelligent management in the retrieving of content. This is a new era, and AnyClip is a vital tool for anyone embarking upon it.”

Comment