I see far more research articles than I could possibly write up. This column collects the most interesting of those papers and advances, along with notes on why they may prove important in the world of tech and startups.

In this edition: a new type of laser emitter that uses metamaterials, robot-trained dogs, a breakthrough in neurological research that may advance prosthetic vision and other cutting-edge technology.

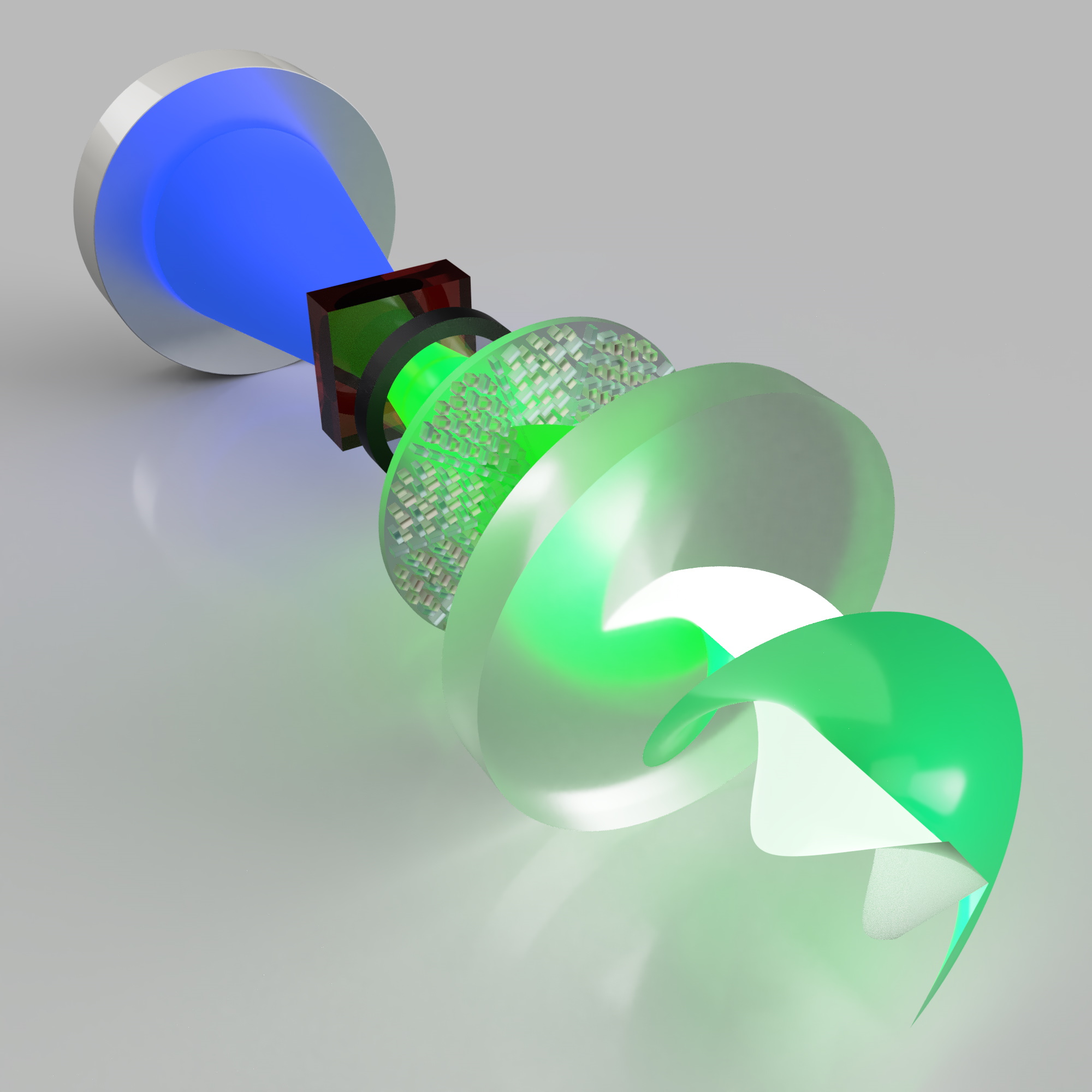

Twisted laser-starters

We think of lasers as going “straight” because that’s simpler than understanding their nature as groups of like-minded photons. But there are more exotic qualities for lasers beyond wavelengths and intensity, ones scientists have been trying to exploit for years. One such quality is… well, there are a couple names for it: Chirality, vorticality, spirality and so on — the quality of a beam having a corkscrew motion to it. Applying this quality effectively could improve optical data throughput speeds by an order of magnitude.

The trouble with such “twisted light” is that it’s very difficult to control and detect. Researchers have been making progress on this for a couple of years, but the last couple weeks brought some new advances.

The trouble with such “twisted light” is that it’s very difficult to control and detect. Researchers have been making progress on this for a couple of years, but the last couple weeks brought some new advances.

First, from the University of the Witwatersrand, is a laser emitter that can produce twisted light of record purity and angular momentum — a measure of just how twisted it is. It’s also compact and uses metamaterials — always a plus.

The second is a pair of matched (and very multi-institutional) experiments that yielded both a transmitter that can send vortex lasers and, crucially, a receiver that can detect and classify them. It’s remarkably hard to determine the orbital angular momentum of an incoming photon, and hardware to do so is clumsy. The new detector is chip-scale and together they can use five pre-set vortex modes, potentially increasing the width of a laser-based data channel by a corresponding factor. Vorticality is definitely on the roadmap for next-generation network infrastructure, so you can expect startups in this space soon as universities spin out these projects.

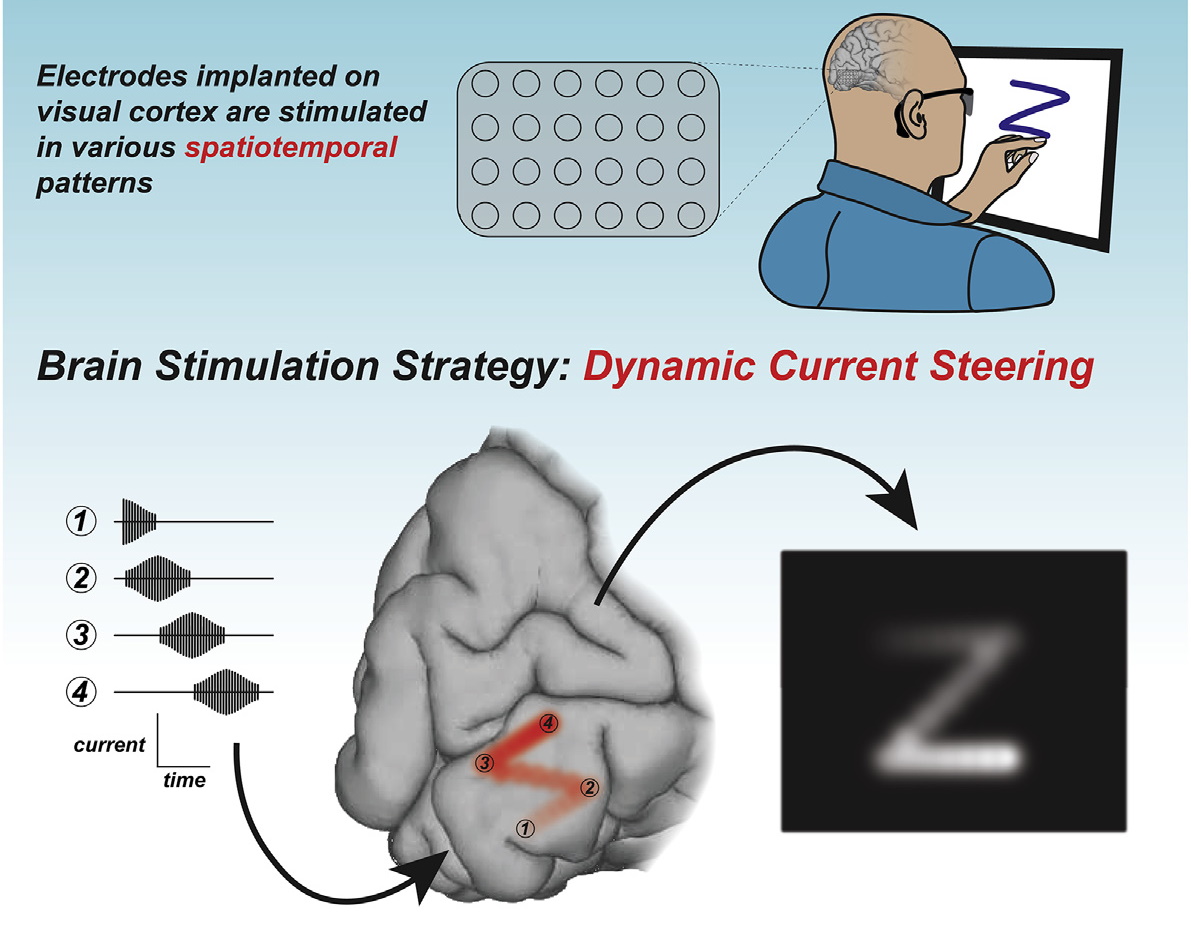

Tracing letters on the brain-palm

Research into prosthetic vision has hit a number of brick walls since early advances in microelectrode arrays and control systems produced a wave of hype around the turn of the century. But one need not reproduce a rich visual scene to provide utility to the vision-impaired. This research from the University of Pennsylvania looks at stimulating the visual cortex in a new and effective way.

The visual cortex is laid out roughly like our field of vision is, making it theoretically easy to send imagery to. Surprise: It’s not that simple! Without the fineness of the signals normally sent through the retina and optic nerve, simultaneous stimulation of multiple points on this part of the brain produces a muddled blip, not a stark outline or overall impression.

This experiment showed that by drawing a stimulus, for instance a letter, across the visual cortex as if tracing out a letter on a palm, works like a charm. Blind study participants were able to recognize these forms at a rate of over one per second for minutes at a time.

It won’t replace audiobooks or Braille in its current form, but this is a new avenue for prosthetic vision and perhaps a more realistic place to start than representing the “blooming, buzzing confusion” of the world.

Dog-robot and robo-dog interactions

After millennia of co-existence, dogs and humans make a great team. But does the canine eagerness to please people extend to robots that just look like people? This Yale study took dozens of dogs and put them in a situation where a Nao robot — humanoid in shape but definitely different — interacted normally with real humans, gave the test dog a treat, then told it to sit. A control group had the command come from a speaker.

Turns out dogs are way more likely to respond to the commands of a robot, although, as expected, they were generally a bit perplexed by the whole thing. You can watch the Very Good Video below:

This has a bearing on future pet care products for sure. Unlike a Roomba, which can take any form as long as it vacuums well, a pet care robot (you know they’re coming) may perform much better in anthropomorphic form.

In another piece of work totally unrelated except that it involves four legs, engineers at NASA’s Johnson Space Center and Georgia Tech put together a weird but effective new form of locomotion for wheeled robots, in particular those that need to navigate tricky or slippery terrain.

Instead of reinventing the wheel — literally, since that’s what NASA has had to do for Mars rovers — they combined the capability of rolling with that of walking, with wheels serving as feet in a shuffling quadrupedal form of locomotion that works even on sandy slopes:

This type of switchable movement could be really helpful for rovers on Mars, where the dust is notoriously difficult to manage. It also could have implications for autonomous robots here on Earth that must navigate more prosaic, but no less difficult, terrain, like gravel walkways and stairs.

A light touch

The sensitivity of the human fingertip is a truly remarkable thing, making it a tool for all occasions — though we end up just banging these miraculous instruments on keyboards all day. Robotic sensory faculties have a long way to go before they match up, but two new approaches using light may indicate a way forward.

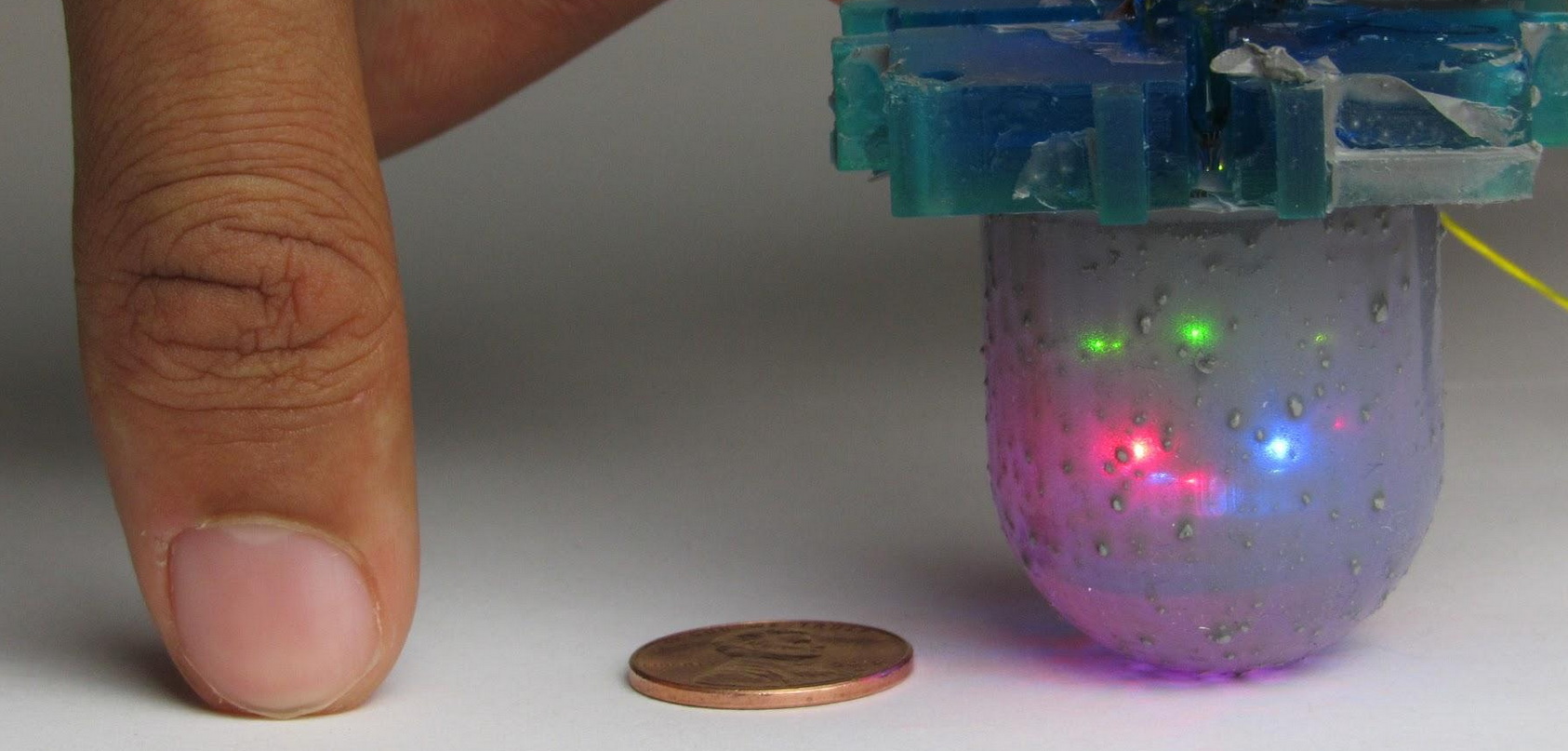

One is OmniTact, an evolution of existing light-based touch-detection devices. These were devised years ago, essentially a miniature camera that watches the inside of a flexible illuminated surface, observing deformations and the pressures and movements they imply. These were limited in the size and area they could cover, but OmniTact uses multiple cameras and LEDs to cover a much larger space — about the size of a thumb. The slightest shift of the surface produces a pattern the cameras pick up on and track.

ETHZ in Switzerland has a similar approach, using a film filled with tiny microbeads, the locations of which are tracked by a tiny camera inside the sensor.

There are several advantages to these techniques. The touch-sensitive surface is just plain silicone or another inert, durable material, meaning it can be deployed in all kinds of situations. And the nature of the force tracking means these can detect not just point pressure on the surface, but sideways shear forces as well. They’re fairly low-cost as well, using small but far-from-exotic cameras and electronics. This approach is definitely a contender in the fast-evolving domain of robotic sensation.

Video games good, social media bad

A few years ago one might have expected to hear about the deleterious effects of gaming and the great promise of social networks. Well, as usual, things are never quite how we expect.

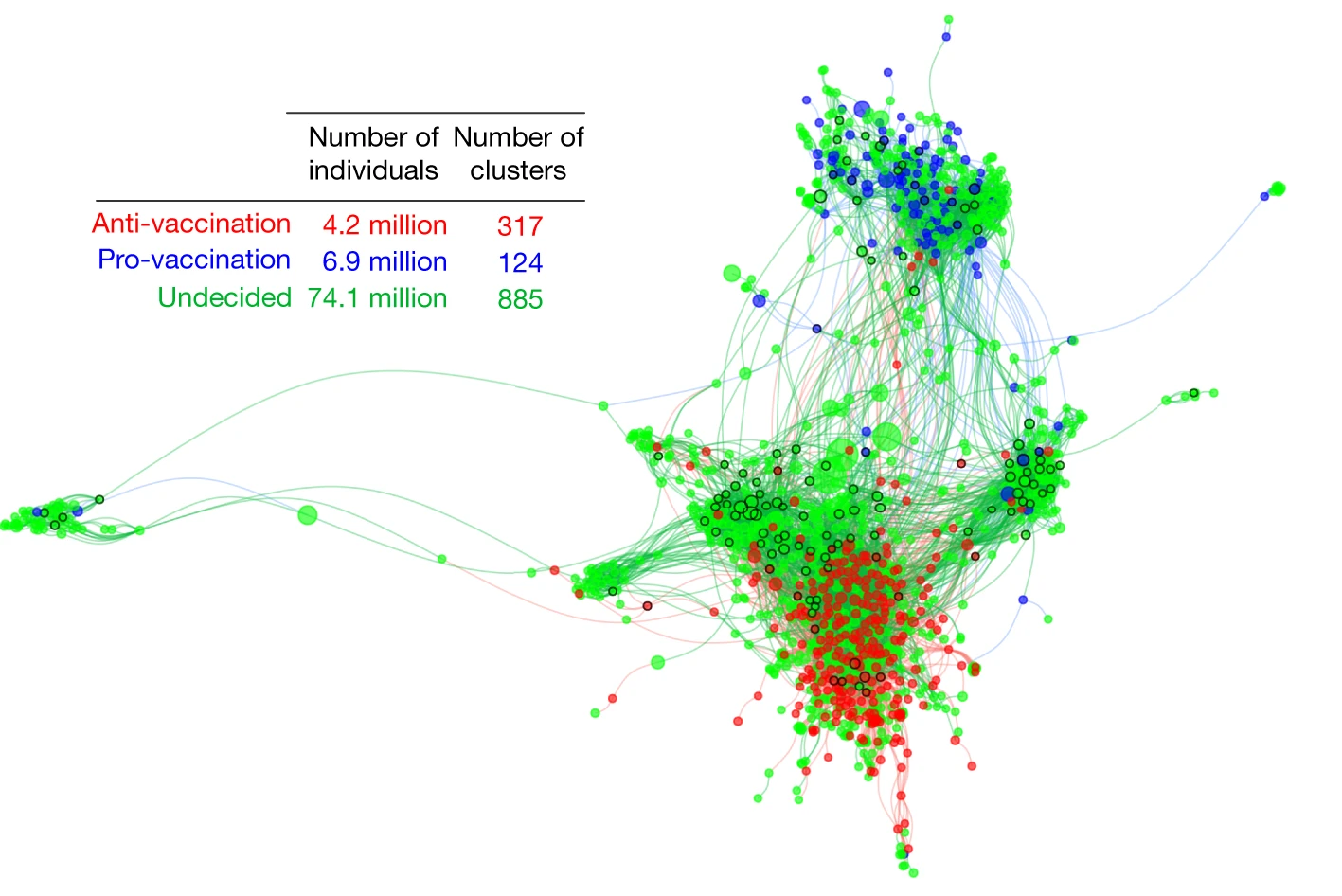

The latest ill effect from social networks turns out to be a set of network effects that amplify the spread of anti-vaccination groups and views. Based on sophisticated models of interactions, groupings and other data from months of Facebook use, researchers found that groups espousing good science were larger but fewer, and showed little growth — while anti-vaccination groups were small but numerous, multiplying and growing like crazy. If current trends continue, the authors warn in their paper published in Nature, anti-vaccination views could soon dominate, with serious detriment to world health.

Lastly, a palate cleanser. An interesting study tested seniors aged 80-97 on their working memory and a few other factors before and after taking part in regular sessions of the online multiplayer action game Star Wars Battlefront (!). After three weeks of regular 30-minute sessions, the game-playing group showed significant improvements to visual attention, task-switching and working memory compared with a control group. Just imagine folks in their 90s playing Battlefront and then being sharper than ever. I hope LAN parties become a regular pastime at retirement communities.

Comment