Last week, Google finally released the developer guides and other necessary documents that will allow developers to write apps for Glass. In some respects, the so-called Mirror API may have been a disappointment to developers who were expecting to run full-blown augmented-reality apps, but even in its current form, it will allow developers to create new experiences for their new and existing apps that just weren’t possible before.

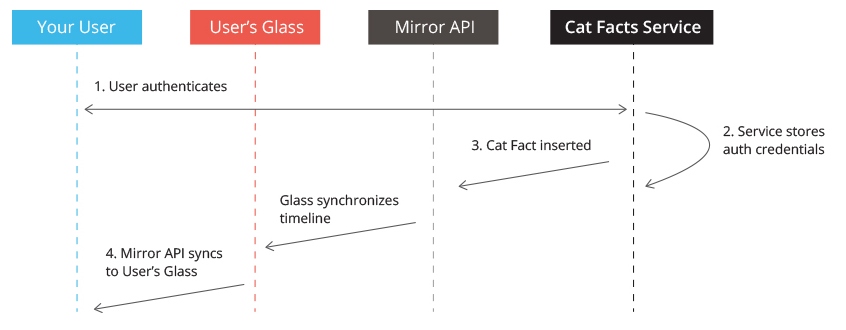

One thing many developers may not have realized before Google published these documents is that the API is essentially an old-school RESTful service. The only way to interact with Glass is through the cloud. The only apps you can build – at least for now – are web-based, and despite the fact that Glass runs Android, you can’t run any services directly on the hardware.

Google may have made this choice for a number of reasons. It ensures that Glass’ battery life is reasonable (Google says it should last a full day, assuming you don’t record a lot of video), but this also means that if a service goes haywire and sends out a fresh cat picture to users every second, it can intervene and cut that service’s access off. Depending on how you look at this, that’s either a good or a bad thing, but Google is clearly interested in keeping some control over what’s happening on Glass for now.

The way the API works, however, also means there are things you can’t quite do with Glass yet that are possible on any modern smartphone. You can’t write a real augmented-reality app, for example. It also doesn’t look as if you could easily stream audio or video from the device to your own services (though you can obviously use Hangouts on Glass).

Because the platform is essentially web-based, you are also limited to HTML and CSS when it comes to styling your apps, and Google would prefer it if you didn’t write any custom CSS and just stuck with its own templates.

For the most part, though, developers will be able to approximate the experience Google shared in its first Glass demo video last year.

Assuming you have an Android phone, you will be able to create location-enabled apps. Users can send images to your service (so you could build a service that manipulates or analyzes these images in the cloud and then sends the results back to the user) and upload videos, too (and in return, you can also send audio, video and photos to the user).

What developers aren’t allowed to do (yet?), however, is to display advertising onto a user’s Glass screen or to sell their apps (unlawful gambling apps are also out of the question). Given the current size of the market for Glass apps, that’s not a huge issue. I expect that Google will allow developers to sell access to their apps in the future, but traditional ads just don’t make sense on this platform.

Google stresses that Glass is still a very new platform and that developers should keep this in mind when they write applications for it. That’s also why only developers who own the actual hardware can currently get API access.

For the time being then, developers will likely remain somewhat underwhelmed by the API. It’s worth remembering, however, that this is just a first release. Google could open the hardware up to more sophisticated apps in the future and/or enable access to more features through the current API. Without native apps, Glass won’t be able to do many things developers may have envisioned for it, but even with these limitations, I’m sure we’ll see a fair amount of innovative Glassware in the near future.