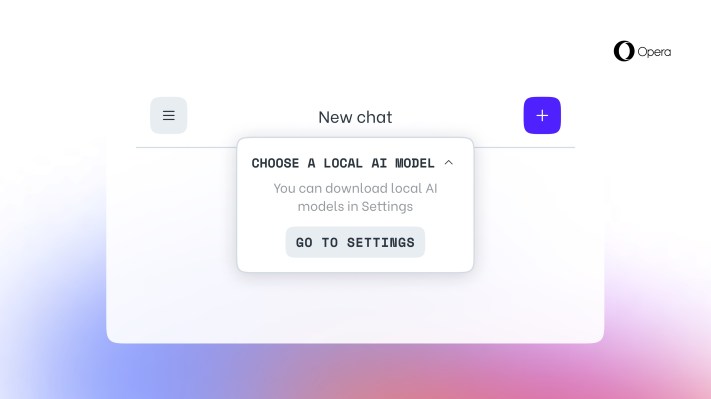

Web browser company Opera announced today it will now allow users to download and use large language models (LLMs) locally on their computer. This feature is first rolling out to Opera One users who get developer stream updates and will allow users to select from over 150 models from more than 50 families.

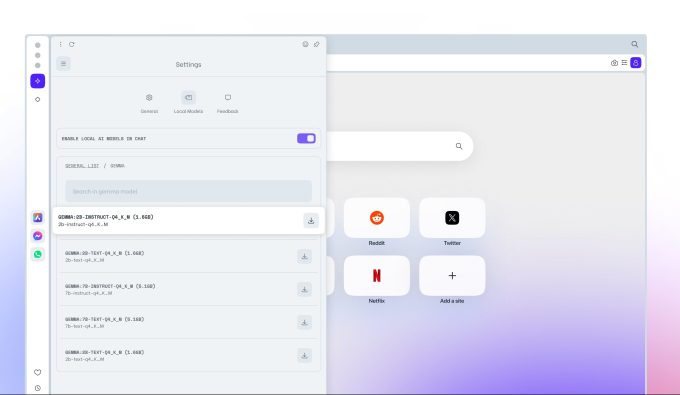

These models include Llama from Meta, Gemma from Google and Vicuna. The feature will be available to users as part of Opera’s AI Feature Drops Program to let users have early access to some of the AI features.

The company said it is using the Ollama open source framework in the browser to run these models on your computer. Currently, all available models are a subset of Ollama’s library, but in the future, the company is looking to include models from different sources.

Image Credits: Opera

The company mentioned that each variant would take up more than 2GB of space on your local system. So you should be careful with your free space to avoid running out of storage. Notably, Opera is not doing any work to save storage while downloading a model.

“Opera has now for the first time ever provided access to a large selection of 3rd party local LLMs directly in the browser. It is expected that they may reduce in size as they get more and more specialized for the tasks at hand,” Jan Standal, VP, Opera told TechCrunch in a statement.

Image Credits: Opera

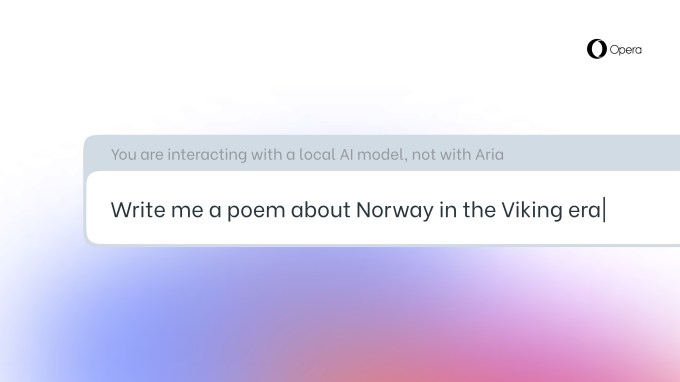

This feature is useful if you plan to test various models locally, but if you want to save space, there are plenty of online tools like Quora’s Poe and HuggingChat to explore different models.

Opera has been toying with AI-powered features since last year. The company launched an assistant called Aria located in the sidebar last May and introduced it to the iOS version in August. In January, Opera said it is building an AI-powered browser with its own engine for iOS as EU’s Digital Market Acts (DMA) asked Apple to shed the mandatory WebKit engine requirement for mobile browsers.