YouTube today announced how it will approach handling AI-created content on its platform with a range of new policies surrounding responsible disclosure as well as new tools for requesting the removal of deepfakes, among other things. The company says that, although it already has policies that prohibit manipulated media, AI necessitated the creation of new policies because of its potential to mislead viewers if they don’t know the video has been “altered or synthetically created.”

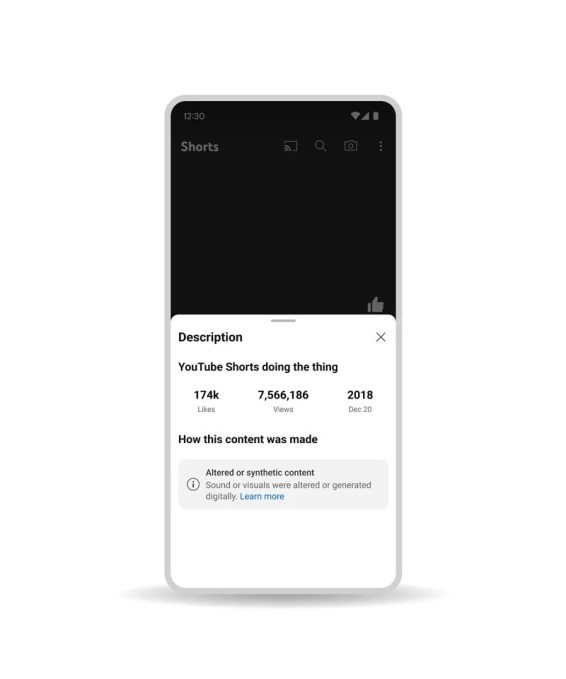

One of the changes that will roll out involves the creation of new disclosure requirements for YouTube creators. Now, they’ll have to disclose when they’ve created altered or synthetic content that appears realistic, including videos made with AI tools. For instance, this disclosure would be used if a creator uploads a video that appears to depict a real-world event that never happened, or shows someone saying something they never said or doing something they never did.

Image Credits: YouTube

It’s worth pointing out that this disclosure is limited to content that “appears realistic,” and is not a blanket disclosure requirement on all synthetic video made via AI.

“We want viewers to have context when they’re viewing realistic content, including when AI tools or other synthetic alterations have been used to generate it,” YouTube spokesperson Jack Malon told TechCrunch. “This is especially important when content discusses sensitive topics, like elections or ongoing conflicts,” he noted.

Image Credits: YouTube

AI-generated content is an area YouTube itself is dabbling in, in fact. The company announced in September it was preparing to launch a new generative AI feature called Dream Screen early next year that would allow YouTube users to create an AI-generated video or image background by typing in what they want to see. All of YouTube’s generative AI products and features will be automatically labeled as altered or synthetic, we’re told.

Image Credits: YouTube

The company also warns that creators who don’t properly disclose their use of AI consistently will be subject to “content removal, suspension from the YouTube Partner Program, or other penalties.” YouTube says it will work with creators to make sure they understand the requirements before they go live. But it notes that some AI content, even if labeled, may be removed if it’s used to show “realistic violence” if the goal is to shock or disgust viewers. That seems to be a timely consideration, given that deepfakes have already been used to confuse people about the Israel-Hamas war.

YouTube’s warning of punitive action, however, follows a recent softening of its strike policy. In late August, the company announced it was giving creators new ways to wipe out their warnings before they turn into strikes that could result in the removal of their channel. The changes could allow creators to get away with carefully disregarding YouTube’s rules by timing when they would post violative content — as they can now complete an educational course to have their warnings removed. For someone determined to post unapproved content, they now know they can take that risk without losing their channel entirely.

If YouTube takes a softer stance on AI by also allowing creators to make “mistakes,” and then return to post more videos, the damage in terms of the spread of misinformation could become a problem. The company also isn’t clear on how “consistently” its AI disclosure rules would have to be broken before it takes punitive actions.

Other changes include the ability for any YouTube user to request the removal of AI-generated or other synthetic or altered content that simulates an identifiable individual — aka a deepfake — including their face or voice. But, the company clarifies that not all flagged content will be removed, making room for parody or satire. It also says that it will consider whether or not the person requesting the removal can be uniquely identified or whether the video features a public official or other well-known individual, in which case “there may be a higher bar,” YouTube says.

Alongside the deepfake request removal tool, the company is introducing a new ability that will allow music partners to request the removal of AI-generated music that mimics an artist’s singing or rapping voice. YouTube said it was developing a system that would eventually compensate artists and rightsholders for AI music, so this seems an intermediary step that would simply allow content takedowns in the meantime. YouTube will make some considerations here as well, noting that content that’s the subject of news reporting, analysis or critique of the synthetic vocals may be allowed to remain online. The content takedown system will also only be available to labels and distributors representing artists participating in YouTube’s AI experiments.

AI is being used in other areas of YouTube’s business, including by augmenting the work of its 20,000 content reviewers worldwide, and identifying new ways abuse and threats emerge, the announcement notes. The company says that it understands bad actors will try to skirt its rules and it will evolve its protections and policies based on user feedback.

“We’re still at the beginning of our journey to unlock new forms of innovation and creativity on YouTube with generative AI. We’re tremendously excited about the potential of this technology, and know that what comes next will reverberate across the creative industries for years to come,” reads the YouTube blog post, jointly penned by VPs of Product Management Jennifer Flannery O’Connor and Emily Moxley. “We’re taking the time to balance these benefits with ensuring the continued safety of our community at this pivotal moment—and we’ll work hand-in-hand with creators, artists and others across the creative industries to build a future that benefits us all.”