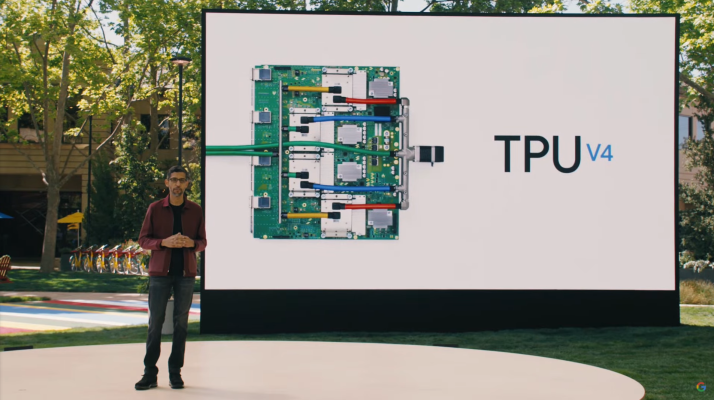

At its I/O developer conference, Google today announced the next generation of its custom Tensor Processing Units (TPU) AI chips. This is the fourth generation of these chips, which Google says are twice as fast as the last version. As Google CEO Sundar Pichai noted, these chips are then combined in pods with 4,096 v4 TPUs. A single pod delivers over one exaflop of computing power.

Google, of course, uses the custom chips to power many of its own machine learning services, but it will also make this latest generation available to developers as part of its Google Cloud platform.

“This is the fastest system we’ve ever deployed at Google and a historic milestone for us,” Pichai said. “Previously to get an exaflop you needed to build a custom supercomputer, but we already have many of these deployed today and will soon have dozens of TPUv4 pods in our data centers, many of which will be operating at or near 90% carbon-free energy. And our TPUv4 pods will be available to our cloud customers later this year.”

The TPUs were among Google’s first custom chips. While others, including Microsoft, decided to go with more flexible FPGAs for its machine learning services, Google made an early bet on these custom chips. They take a bit longer to develop — and quickly become outdated as technologies change — but can deliver significantly better performance.