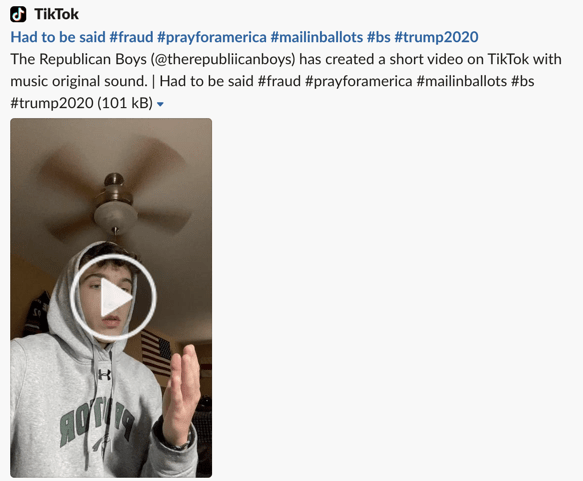

TikTok confirmed today it has taken down videos spreading election misinformation that had been posted to two high-profile Republican-supporting accounts, The Republican Hype House and The Republican Boys. The accounts, popular with young, conservative voters, reach more than a million followers combined, and have the potential to reach even more users who would find their videos through other means — such as hashtags, shares or algorithmic recommendations.

The videos, which had made claims of “election fraud,” were first spotted by Taylor Lorenz, a reporter for The New York Times.

Though TikTok had committed to addressing election misinformation on its network, it was initially unclear to what extent it would challenge video content in cases such as this. However, the company reacted fairly quickly in taking down the disputed videos, as it turned out. It responded to Lorenz’s tweet in less than an hour’s time to confirm the content’s removal.

Reached for comment, TikTok also confirmed to TechCrunch it removed the videos in question for violating its policies against misleading information, but didn’t share any further comment on the decision.

This is not the first time The Republican Hype House has been penalized by TikTok for spreading political misinformation. In August, Media Matters noted it, along with another conservative TikTok account, had published a deceptively edited clip of Democratic presidential candidate Joe Biden. Previously, the TikTok account had also been involved in spreading a conspiracy theory related to the Black Lives Matter (BLM) movement.

As TechCrunch earlier reported, the close U.S. election results have plunged social media platforms into a battle against misinformation and conspiracies. On platforms like Facebook, Twitter and now TikTok, misinformation can go viral quickly, reaching hundreds, thousands or even millions of users before the platforms react.

Today, Twitter has already hidden behind warning labels multiple tweets from President Trump, due to its policies regarding election-related misinformation, for example. Facebook has labeled Trump’s posts, as well, and displayed in-app notifications to remind users the election results weren’t yet final as votes were still being counted.

Image Credits: Screenshot of a banned video on TikTok, courtesy of Media Matters

Less attention has been given to TikTok, however, despite its power to reach around 100 million monthly active U.S. users of a largely younger demographic, who collectively post some 46 million videos daily.

The U.S. elections have been one of the first big tests of TikTok’s ability to quickly enforce its misinformation policies.

Of note, TikTok’s future in the U.S. may also hinge on whether Trump — who banned the Chinese-owned video app citing national security concerns — continues to remain in power when all the votes are counted.