It’s hard enough for a grown human to figure out how to navigate a crowd sometimes — so what chance does a clumsy and naive robot have? To prevent future collisions and awkward “do I go left or right” situations, Stanford researchers are hoping their “Jackrabbot” robot can learn the rules of the road.

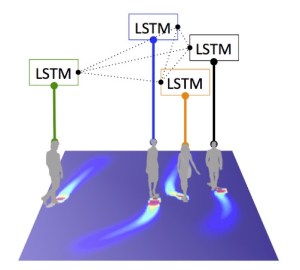

The team, part of the Computational Vision and Geometry Lab, has already been working on computer vision algorithms that track and aim to predict pedestrian movements. But the rules are so complex, and subject to so many variations depending on the crowd, the width of the walkway, the time of day, whether there are bikes or strollers involved — well, like any machine learning task, it takes a lot of data to produce a useful result.

Furthermore, the algorithm they are developing is intended to be based entirely on observed data as interpreted by a neural network; no tweaking by researchers adding cues obvious to them (“in this situation, a person will definitely go left”) is allowed.

Furthermore, the algorithm they are developing is intended to be based entirely on observed data as interpreted by a neural network; no tweaking by researchers adding cues obvious to them (“in this situation, a person will definitely go left”) is allowed.

Their efforts so far are detailed in a paper the team will present at CVPR later this month. The motion prediction algorithm they’ve created outperforms many others, and the model has learned some of the intricacies of how groups of people interact.

At present the gaily-dressed Jackrabbot is manually controlled while the researchers work on how to integrate their model with the robot’s senses. It’s a modified Segway RMP210, equipped with stereo cameras and a laser scanner, as well as GPS. It won’t get the bird’s-eye view that the training data had access to, but if it knows speeds and distances, it should be able to work out the coordinates of individuals in space and predict their motion with similar accuracy.

“We are planning to integrate our socially aware forecasting model in the robot during summer and have a real-time demo by the end of the year,” wrote researcher Alexandre Alahi in an email to TechCrunch. ”

Robots that can navigate human spaces are starting to become, if not everyday encounters, at least somewhere on the near side of sci-fi. But the things we do continually without thinking — scan our surroundings, evaluate obstacles and actors in the scene, and plan accordingly — are very difficult for a computer to do.

Projects like this one add to a pile of research that will, eventually, allow robots to move through houses and cities much like we do: quickly, safely, and with consideration for others. Just as autonomous cars will completely change the streets of our cities, autonomous pedestrian robots (humanoid or not) will change the sidewalks.