Azure Media Services, Microsoft’s collection of cloud-based tools for video workflows, is about to get a lot smarter. As the company announced at the annual NAB show in Las Vegas today, Media Services will now make use of some of the tools Microsoft developed for its machine learning services for video, as well.

This means Media Services can now automatically select the most interesting snippets from a source video, for example, to give you a quick summary of what the full video looks like.

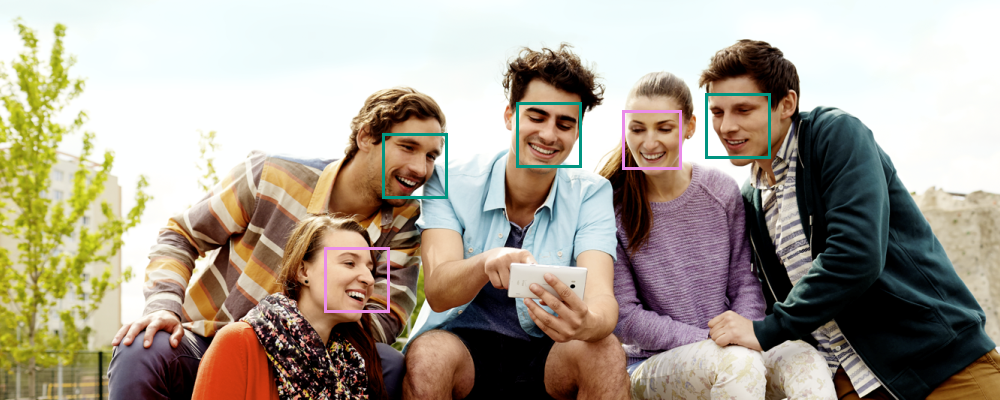

In addition, Microsoft is building face detection into these tools and the company is including its ability to detect people’s emotions (something the company’s Cognitive Services already do for still images). Using this, you could easily see how people reacted to a speech at an event, for example. If your keynote goes on for too long, you will probably see people’s emotions go from happiness to indifference and then to sadness and contempt.

Microsoft is also now building Hyperlapse, its technology for stabilizing and time-lapsing videos right into Azure Media Services. This feature was already available as a public preview, but at the time, it limited users to videos with up to 10,000 frames. This limit is now gone.

Other new features include motion detection, an improved speech-to-text indexer that can now understand six languages (for a total of eight), motion detection and optical character recognition for text displayed in videos (which would be great for search engines, for example).