Toronto photo-sharing startup 500px is reporting today that both of its applications, 500px for iOS and its recent acquisition ISO500, have been pulled from the Apple App Store due to concerns about nude photos. Combined, the apps have over 1 million downloads, 500px COO Evgeny Tchebotarev tells us. The flagship application has just under a million downloads, while ISO500 has a little over 200,000, he says.

The apps were pulled from the App Store this morning around 1 AM Eastern, and had completely disappeared by noon today. The move came shortly after last night’s discussions with Apple related to an updated version of 500px for iOS, which was in the hands of an App Store reviewer.

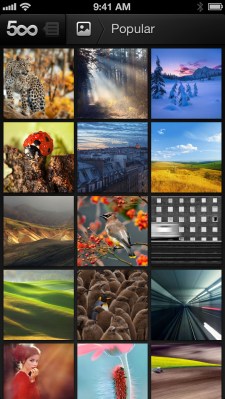

The Apple reviewer told the company that the update couldn’t be approved because it allowed users to search for nude photos in the app. This is correct to some extent, but 500px had actually made it tough to do so, explains Tchebotarev. New users couldn’t just launch the app and locate the nude images, he says, the way you can today on other social photo-sharing services like Instagram or Tumblr, for instance. Instead, the app defaulted to a “safe search” mode where these types of photos were hidden. To shut off safe search, 500px actually required its users to visit their desktop website and make an explicit change.

Tchebotarev said the company did this because they don’t want kids or others to come across these nude photos unwittingly. “Some people are mature enough to see these photos,” he says, “but by default it’s safe.”

More importantly, perhaps, is the fact that the “nude” photos on 500px aren’t necessarily the same types of nude images users may find on other photo-sharing communities. That is, they’re not pornographic in nature. “We don’t allow pornographic images. If something is purely pornographic, it’s against our terms and it’s deleted,” Tchebotarev notes.

Instead, he says that most of the nudes you’ll find on 500px, whose community tends to include professional photographers and other photo enthusiasts, are of an “artistic” nature. “It’s not about pornography, it’s about fine art,” he insists.

Currently, 500px relies on the community to identify the inappropriate images that appear, but it has technology in development which will work something like facial recognition for nudity. That means it will be able to automatically identify and tag images of nudes, so they’ll never appear in search. Although this tech isn’t ready today, nude photos – categorized or not – don’t appear in the app’s main screen because uncategorized images of all sorts are hidden from the apps’ new users, Tchebotarev says.

The company had told Apple yesterday that it could make a change to its apps to address the issue at hand, and this would also automatically take care of the problem in the dozen or so third-party applications using its API, which also include big names like Flipboard and Google Currents. However, Apple couldn’t wait for the change, which was expected to take a day, and pulled the apps.

“The app was in the App Store for 16 months – since October 2011. Can you imagine that?” Tchebotarev exclaims. The app was updated in November with a gorgeous new interface, but no changes were made which would make it easier to find nude images. He says the changes 500px promised Apple should be done now and are being submitted immediately. Hopefully, it’s enough for these apps to return.

User reaction, as expected, is a bit incredulous, considering how easy it is to find nudity in other apps, including in all web browsers.

Update: Apple has provided an official statement on why 500px was removed from the App Store:

The app was removed from the App Store for featuring pornographic images and material, a clear violation of our guidelines. We also received customer complaints about possible child pornography. We’ve asked the developer to put safeguards in place to prevent pornographic images and material in their app.