Today, Google is launching one of its most ambitious and interesting updates to its search engine in recent months. Starting in a few days, you will start to see large panels with additional factual information about the topic you were searching for take over the right side of Google’s search result pages. The panels are powered by what Google calls its new “Knowledge Graph” and they will serve two different functions. Google will use this space to show you a summary of relevant information about your queries (think biographical data about celebrities and historical figures, tour dates for artists, information about books, works of art, buildings, animals etc.) as well as a list of related topics. In addition, Google will now allow you to clarify what exactly you are looking for and will use these boxes for disambiguation. Thanks to this, you will soon be able to tell Google you were looking for the L.A. Kings ice hockey team and not the Sacramento Kings when you searched for ‘kings.’

The company has actually been working on the semantic technology that drives this knowledge graph for quite a few years. This specific project, Google told me earlier this week, has been in the works for about the last two years. During this time, the company has been working hard on creating the vast database of structured knowledge that powers the features it is launching today (though Google’s acquisition of Freebase . Today, the knowledge graph database currently holds information about 500 million people, places and things. More importantly, though, it also indexes over 3.5 billion defining attributes and connections between these items.

The company has actually been working on the semantic technology that drives this knowledge graph for quite a few years. This specific project, Google told me earlier this week, has been in the works for about the last two years. During this time, the company has been working hard on creating the vast database of structured knowledge that powers the features it is launching today (though Google’s acquisition of Freebase . Today, the knowledge graph database currently holds information about 500 million people, places and things. More importantly, though, it also indexes over 3.5 billion defining attributes and connections between these items.

“Strings to Things”

As Google Fellow Ben Gomes told me yesterday, the company really wants to move beyond just understanding the characters you are typing into its search engine to getting a better understanding of what it is you are really looking for (“strings to thing” is what Gomes likes to call it). To do this, Google is using both its own and other freely available sources like Wikipedia, the World CIA Factbook, its own Freebase product, Google Books, online event listings and other data it crawls, but it is also using some commercial datasets (though Google wouldn’t reveal which companies specifically it is working with here).

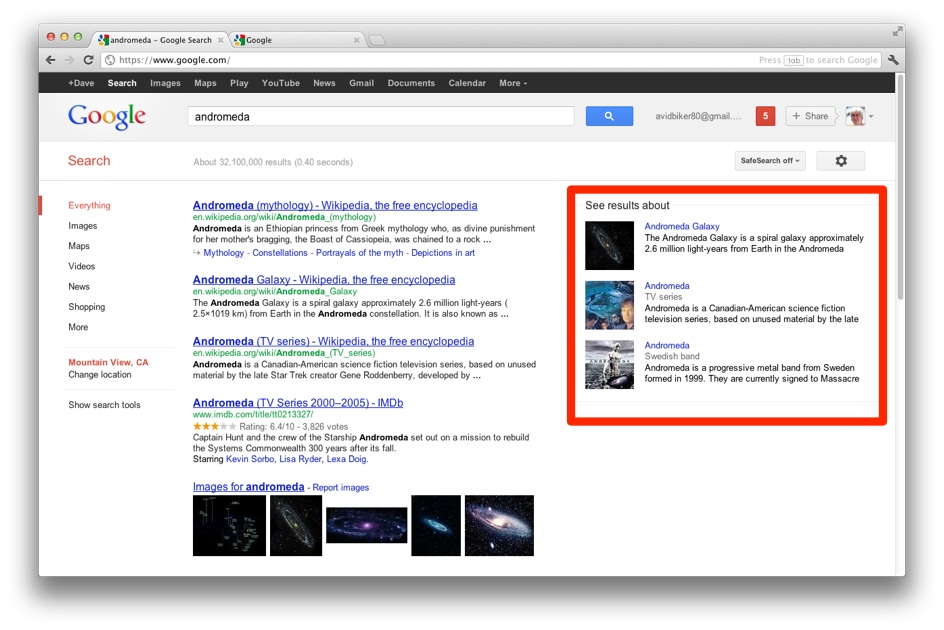

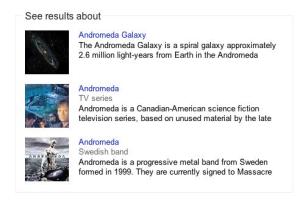

Here is what this will look like in practice. Google is currently pretty good at understanding general search queries, but some terms are just too ambiguous. When you search for ‘andromeda,’ for example, it just can’t know if you are searching for the TV series, galaxy, or this Swedish progressive metal band. Now, whenever you type in one of these queries, Google will show you a box on the right side of the screen that lets you tell it which one of these topics you were really looking for. Once you pick the topic, the search result page will reload and show you the results related to what you were really looking for.

So if you were looking for the TV show Kings, the search result page will show you images related to the show, the right Wikipedia entry and links to episodes that are available for online streaming. If you were looking for the Sacramento Kings, though, you will get the latest box scores and other information related to the basketball team.

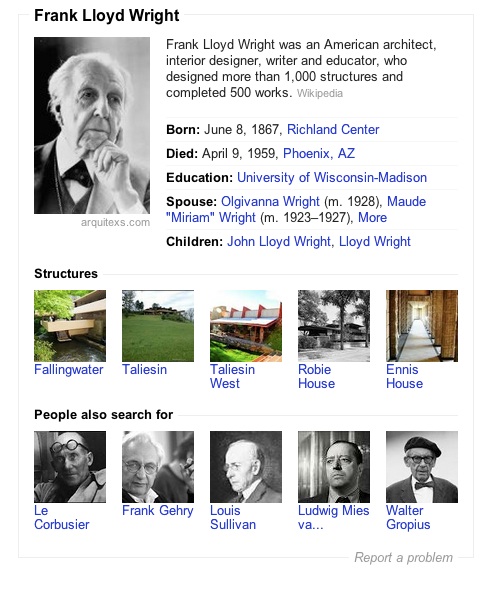

That’s only one part of what the Knowledge Graph now allows Google to do. The second part involves Google’s new automatically created topic summaries that will appear when you look for a topic that’s well defined by the Knowledge Graph. Say you search for the architect Frank Lloyd Wright, for example. Instead of having to click through to Wikipedia to find out when he was born, you will now see his biographical data right there on the search result page. As Gomes told me, Google, of course, knows what kind of facts around a certain person, place or event people usually search for, so it these summaries will also highlight these topics.

That’s only one part of what the Knowledge Graph now allows Google to do. The second part involves Google’s new automatically created topic summaries that will appear when you look for a topic that’s well defined by the Knowledge Graph. Say you search for the architect Frank Lloyd Wright, for example. Instead of having to click through to Wikipedia to find out when he was born, you will now see his biographical data right there on the search result page. As Gomes told me, Google, of course, knows what kind of facts around a certain person, place or event people usually search for, so it these summaries will also highlight these topics.

According to Gomes, you will see these summaries about as often as you currently see Google Maps in your search results. To put this into perspective (and sadly we couldn’t get Google to give us more concrete numbers), this launch is significantly bigger than the entire launch of Universal Search combined – and that was one of the company’s largest launches in this field.

What makes these summaries even more interesting, though, is the fact that they also highlight other relevant information. For Frank Lloyd Wright, for example, the summary will give you links to some of his most famous projects, as well as a short list of related people Google’s users tend to search for. Click on these, and you will get to their respective summaries. Inside the summaries, Google will also highlight other entries that you can use to dig deeper (family members, band members, albums, schools, a TV show’s director etc.). This, says Google, will allow you to search more naturally across a topic.

Google is aggregating this data from a large variety of sources. It will typically feature a short summary from Wikipedia or a similar service at the top of the summary and specifically link to the source. For the rest of the data, though, it will often just draw upon its own Knowledge Graph database and not specifically link to where it found a person’s birth date, for example.

In case Google gets something wrong, by the way, you can report errors with just a few clicks.

Looking Ahead

Google, of course, has been adding bits and pieces of semantic search smarts to its search engine over the last few years (and so has Microsoft after its acquisition of Powerset). With Google Squared, one of its recently shelved experiments, it also once launched a pretty ambitious project to understand information on the web and then display it in a table (some of this technology likely lives on in the Knowledge Graph now). Today’s launch, however, represents Google’s most ambitious move in this direction.

As Gomes as told me, now that Google’s algorithms have access to this structured data and can understand it better, the next step will be to understand more complex questions like “Where can I attend a Lady Gaga concert in warm outdoor weather?” For now, though, it is worth noting that this update isn’t about natural language processing and answering questions so much as about displaying relevant data in

It’ll be interesting to see how this new feature will influence how people search and what links they click on. I wouldn’t be surprised if this had quite a negative influence on traffic to Wikipedia, for example. At the same time, though, the disambiguation feature may just help drive more relevant traffic to the sites Google links to as well.