Social media service Snap said on Tuesday that it plans to add watermarks to AI-generated images on its platform.

The watermark is a translucent version of the Snap logo with a sparkle emoji, and it will be added to any AI-generated image exported from the app or saved to the camera roll.

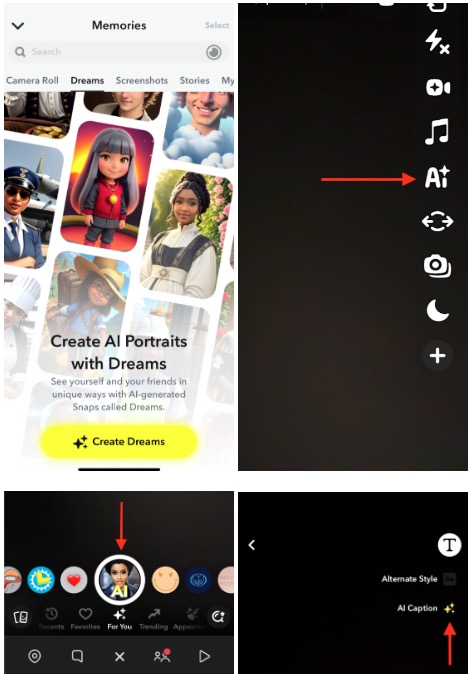

The watermark, which is Snap’s logo with a sparkle, denotes AI-generated images created using Snap’s tools. Image Credits: Snap

On its support page, the company said removing the watermark from images will violate its terms of use. It’s unclear how Snap will detect the removal of these watermarks. We have asked the company for more details and will update this story when we hear back.

Other tech giants like Microsoft, Meta and Google have also taken steps to label or identify images created with AI-powered tools.

Currently, Snap allows paying subscribers to create or edit AI-generated images using Snap AI. Its selfie-focused feature, Dreams, also lets users use AI to spice up their pictures.

In a blog post outlining its safety and transparency practices around AI, the company explained that it shows AI-powered features, like Lenses, with a visual marker that resembles a sparkle emoji.

Snap lists indicators for features powered by generative AI. Image credits: Snap

The company said it has also added context cards to AI-generated images created with tools like Dream to better inform users.

In February, Snap partnered with HackerOne to adopt a bug bounty program aimed at stress-testing its AI image-generation tools.

“We want Snapchatters from all walks of life to have equitable access and expectations when using all features within our app, particularly our AI-powered experiences. With this in mind, we’re implementing additional testing to minimize potentially biased AI results,” the company said at the time.

Snapchat’s efforts to improve AI safety and moderation come after its “My AI” chatbot spurred some controversy upon launch in March 2023, when some users managed to get the bot to respond and talk about sex, drinking and other potentially unsafe subjects. Later, the company rolled out controls in the Family Center for parents and guardians to monitor and restrict their children’s interactions with AI.