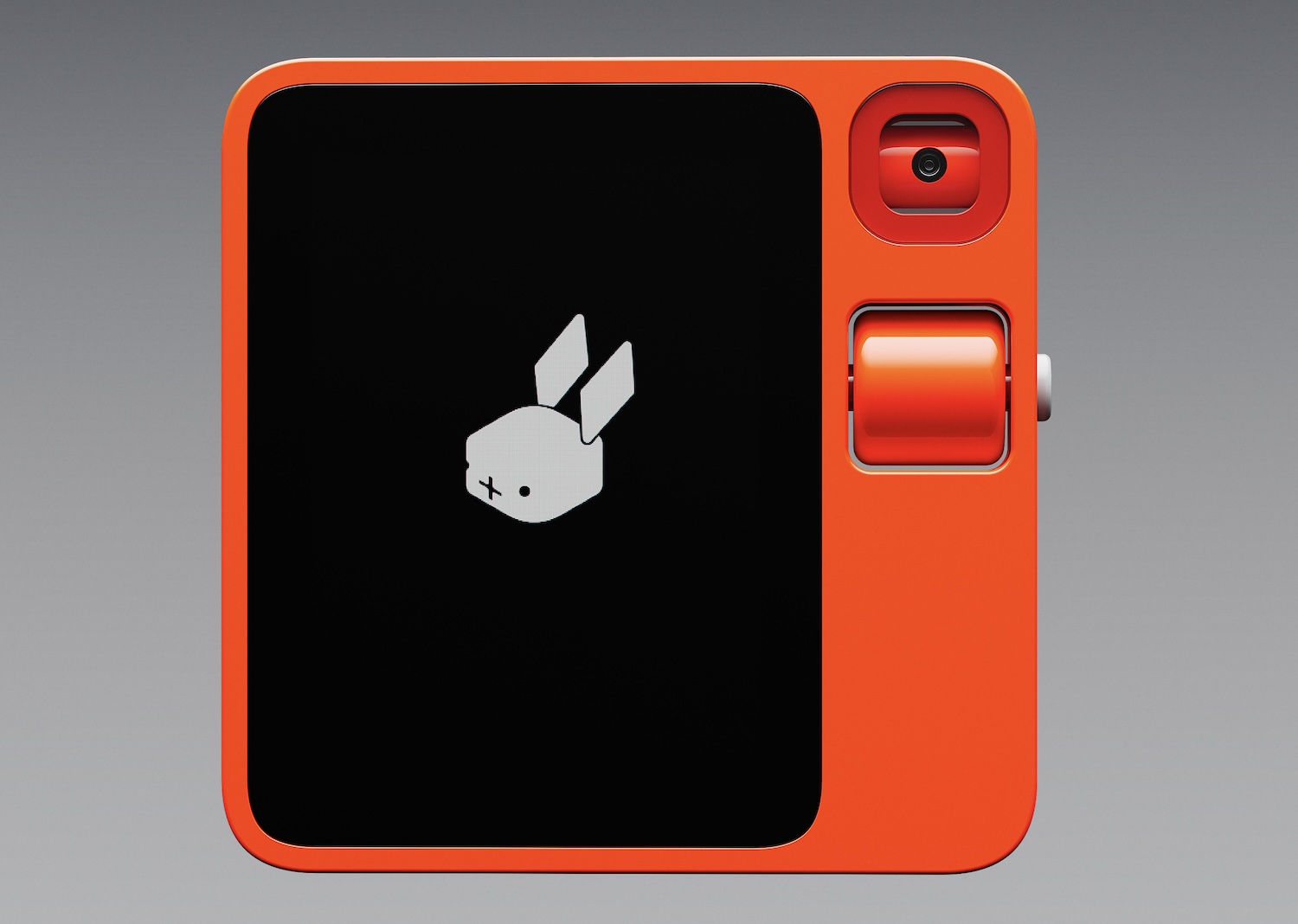

In a sea of AI-enabled gadgets at CES, the rabbit r1 (all lowercase, they insist) stands out not just for its high-vis paint job and unique form factor, but because of its dedication to the bit. The company is hoping you’ll carry a second device around to save yourself the trouble of opening your phone — and has gone to extraordinary technical lengths to make it work.

The idea behind the $200 r1 is simple: It lets you keep your phone in your pocket when you need to do some simple task like ordering a car to your location, looking up a few places to eat where you’re meeting friends or finding some lodging options for a weekend on the coast.

“We’re not trying to kill your phone,” said CEO and founder Jesse Lyu on a call with press ahead of the Las Vegas tech show. “The phone is an entertainment device, but if you’re trying to get something done it’s not the highest efficiency machine. To arrange dinner with a colleague we needed four-five different apps to work together. Large language models are a universal solution for natural language, we want a universal solution for these services — they should just be able to understand you.”

Instead of pulling out your phone, unlocking it, finding the app, opening it and working your way through the UI (so laborious!), you pull out the r1 instead and give it a command in natural language:

“Call an Uber XL to take us to the Museum of Modern Art.”

“Give me a list of five cheap restaurants within a 10-minute walk of there.”

“List the best reviewed cabins for six adults on Airbnb within 10 miles of Seaside, nothing more than $300 a night.”

The r1 does as you bid it and a few seconds later provides confirmation and any content you might have requested.

Sounds familiar, doesn’t it? After all, that’s what our so-called “AI assistants” have supposedly been doing for the last five or six years. “Siri, do this,” “Hey Google, do that.” You’re right! But there’s a single massive difference.

Siri and Google Assistant and Alexa and all the rest would be better described as “voice interfaces for custom mini-apps,” not at all like the language models many of us have begun chatting with over the last year. When you tell Google to fetch you a Lyft to your current location, it uses the official Lyft API to send the relevant information and gets a response back — it’s basically just two machines talking to one another.

Not that there’s anything wrong with that — but what you can do via API is often very limited. And of course there has to be an official relationship between the assistant and the app, an approved and paid-for connection. If an app you like doesn’t work with Siri, or the API Alexa has access to is outdated, you’re just out of luck. And what about some niche app too small to get an official deal with Google?

What rabbit has designed is more along the lines of the “agent” type AIs we’ve seen appear over the last year, machine learning models that are trained on ordinary user interfaces like websites and apps. As a result, they can order a pizza not through some dedicated Domino’s API, but the same way a human would: by clicking on ordinary buttons and fields on an ordinary web or mobile app.

Image Credits: rabbit

The company trained its own “large action model” or LAM on countless screenshots and video of common apps, and as a result when you tell it to play an older Bob Dylan album on Spotify, it doesn’t get lost halfway. It knows to go to Dylan’s artist page, organize the albums by release date, scroll down and queue up one of the oldest. Or however you do it.

You can see the process in rabbit’s video here.

Image Credits: rabbit

It already knows how to do work with a bunch of common apps and services, but if you have one it doesn’t know, rabbit claims the r1 can learn just by watching you use the app for a bit — though this teaching mode won’t be available at launch. (Lyu said they got it working in Diablo 4, so it can probably handle AllTrails.)

But of course the r1 can’t actually press those buttons in the app on its own — for one thing, it doesn’t have any fingers to press them with, and for another, it doesn’t have an account. For the second problem, rabbit set up what it calls “rabbit hole,” a platform where you activate services with your login credentials, which are not saved. After they’re active, the server operates the app using ordinary button presses just like you might, but in an emulated environment of some kind (they were not super specific about this).

“Think of it like passing your phone to your assistant,” said Lyu, generously assuming we are all familiar with that particular convenience. “All we do is have this thing press buttons for you. And all they see in their back end is you trying to do things. It’s perfectly legal and within their terms of service.”

Smaller, cheaper, faster

The company clearly put a lot of work into the technical side, but the real question is whether anyone will actually want to carry this thing around in addition to a phone. It’s priced at $200, with no subscription, though you’ll need to provide a SIM card. That’s cheaper than AirPods, and it does make a lot of fun promises.

Image Credits: rabbit

One thing it clearly has going for it is the look. Like if the Playdate had a startup founder cousin who drove a bright red Tesla with vanity plates (you know the type). It was designed by Teenage Engineering, who make about everything worth having these days.

You may ask, why is there a screen on something you are supposed to talk to? Well, the screen is needed to show you visual stuff like the results of its searches, or confirming your location. I’m of two minds here. One thinks, well how else are you gonna do it? The other thinks, if you need to confirm all this stuff in the first place why not just use the phone in your other pocket?

Clearly the crew at rabbit thinks that popping this small (3″x3″x0.5″) and light (115 grams) gadget up and saying what you want, then using the scroll wheel and button to navigate the results, is a simpler experience than using the app in many cases. And I can see how that might be true — many apps are poorly designed and now also have the added peril of ads.

But why the camera? That’s one feature I couldn’t quite get a straight answer about. It’s got an interesting magnetic/free-floating axle so it spins to be level and pointing whichever direction you want. There seem to be some features coming down the pipe that aren’t quite ready to roll yet — think “how many calories is in this bag of candy?” or “who designed this building?” and that kind of thing. Video calls and social media may be forthcoming.

The device is available for preorder now, and Lyu said they aim to ship to the U.S. at the end of March.

Scary competition

The big question at the end of the day, however, is not whether the rabbit r1 succeeds at what it sets out to do — from what I can tell, it does — but whether that approach is a viable one in the face of extremely powerful competition.

Google, Apple, Microsoft, OpenAI, Anthropic, Amazon, Meta — each of them and many more are working hard to create more powerful machine learning agents every day. The biggest danger to rabbit isn’t that no one will buy it, but that in six months, a hundred-billion-dollar company makes its own action agent that does 80% of what the rabbit does and makes it accessible for free on your smartphone.

I asked Lyu if this was a worry for him and his company, which with 17 employees isn’t quite at the same scale.

“Of course we’re worried,” he replied, “We’re a startup. But just because they can do it doesn’t mean we need to stop.”

He pointed out that despite their vast resources, these companies also lack the agility of a startup, which is shipping today what they might ship part of later, and also the data. Language models, he pointed out, are “based on an open recipe — five papers, that’s it.” There’s little opportunity to create a moat there. But rabbit’s LAM is built on proprietary data and is aimed at a very specific user experience on a very specific device.

Even so, even if the rabbit r1 is better or cuter, people prefer simplicity and convenience. Why would they pay money to carry a second device when their first one does most of those tasks? In the short term, the answer is yes: Lyu said preorders are stacking up. Will rabbit live to produce the next generation, presumably the r2? Even if they don’t, this hot little device may live on in our memory as a suitably ambitious exemplar of the AI hype zeitgeist.