The dust may be starting to settle on the Open AI drama of the weekend — Sam Altman is out and headed to Microsoft; the company is in revolt; people are having a change of heart; and more — so it’s time to sit down and parse the politics of the situation.

Tech folks often eschew talking about politics, dismissing it as a distraction or mistake. But several political perspectives are shaping quite a lot of the work on artificial intelligence, and so we need to understand them, especially now.

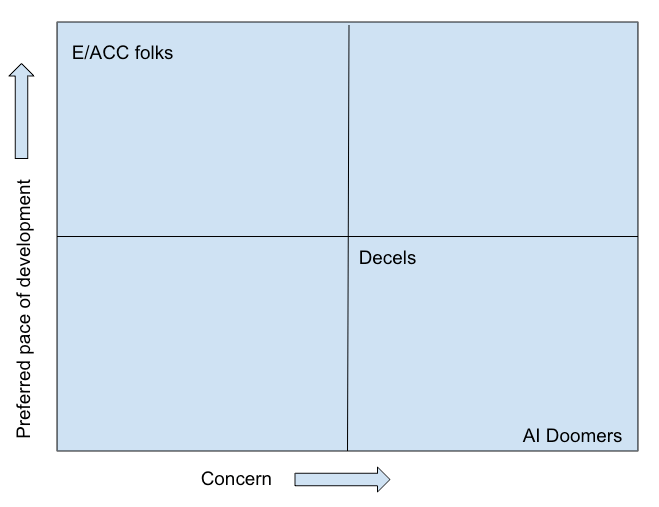

The different political perspectives currently concerning the development of AI lie along two axes: speed and concern. Speed is how quickly different groups want AI technology to progress. As for concern, some folks are worried about what we will not get if we slow AI development, while others are concerned that things could move too quickly and argue that we should be careful about what is being built and how we share and deploy it. Then there are people who think we are digging our own grave with new AI tech.

The different political perspectives currently concerning the development of AI lie along two axes: speed and concern. Speed is how quickly different groups want AI technology to progress. As for concern, some folks are worried about what we will not get if we slow AI development, while others are concerned that things could move too quickly and argue that we should be careful about what is being built and how we share and deploy it. Then there are people who think we are digging our own grave with new AI tech.

I’ve put a few terms into a matrix for us:

Image Credits: Alex Wilhelm/TechCrunch

This chart is imperfect and simplistic, but it’s useful as a lightweight summary.

One key group comprises the E/ACC crew. Short for “effective accelerationism,” E/ACC is a reference and retort of sorts to “effective altruism,” which rose and fell in popularity along with former FTX CEO Sam Bankman-Fried. The EA idea, per the Centre for Effective Altruism, is to “find the best ways to help others, and put them into practice.” So much for that.

E/ACC is different. A recent manifesto by a16z can help us frame our thinking:

We believe in accelerationism — the conscious and deliberate propulsion of technological development — to ensure the fulfillment of the Law of Accelerating Returns. To ensure the techno-capital upward spiral continues forever.

The venture firm added the following in that memo:

Our present society has been subjected to a mass demoralization campaign for six decades — against technology and against life — under varying names like “existential risk,” “sustainability,” “ESG,” “Sustainable Development Goals,” “social responsibility,” “stakeholder capitalism,” “Precautionary Principle,” “trust and safety,” “tech ethics,” “risk management,” “de-growth,” “the limits of growth.”

These paragraphs help us arrive at a good summary of E/ACC: The deliberate pushing forward of society through aggressive technological innovation, largely or completely unconstrained by worries over its near-term impacts on society. The argument in favor of E/ACC is that no matter what disruptions new tech brings to society, the long-term benefits are so great that they outweigh caution.

Another group of tech folks who matter in the AI debate are sometimes called the “decels.” Here’s how the a16z letter described this group:

Our enemy is deceleration, de-growth, depopulation — the nihilistic wish, so trendy among our elites, for fewer people, less energy, and more suffering and death.

Of course, decels are often denizens of the tech world, too. For example, earlier this year, a bunch of tech folks, including Elon Musk, signed an open letter proposing a pause in AI research past the level of GPT-4. It asked:

Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.

The decels are still in favor of building new AI tech; they just want to be more careful about it.

To the E/ACC crowd, this perspective represents the slowing of critical progress, and consequently, the arc of humanity.

For the AI doomers, the decels are akin to attempts to build a third American political party; ineffective at best and insidious at worst. AI doomers exist in tech circles, too, but as there’s little money to be made from doomerism (apart from writing good fiction), it’s more an academic posture than a position that tech companies are taking.

So when you read commentary on the OpenAI saga from folks in tech, it’s worth finding out what they think about AI development in general. As far as I can tell, E/ACC is winning the current conversation, mostly because of how quickly AI tech is advancing and the fact that a huge proportion of the economy wants to use AI to save money and do more.

So, there’s a massive economic tailwind blowing on the back of the E/ACC crowd.

The decels, in contrast, just took out Sam Altman from his perch atop OpenAI, which left the tech and venture camps incensed. Microsoft is putting its dollars behind Altman, so the decel hope of “build something safe or not at all” pretty much failed.

We’ll have more on this, but I hope the above helps you frame your own thoughts about these events.