Much in the same way as companies adapt their software to run across different desktop, mobile and cloud operating systems, businesses also need to configure their software for the fast-moving AI revolution, where large language models (LLMs) have emerged to serve powerful new AI applications capable of interpreting and generating human-language text.

While a company can already create an “LLM-instance” of their software based on their current API documentation, the problem is that they need to ensure that the broader LLM ecosystem can use it properly — and get enough visibility into how well this instance of their product actually works in the wild.

And that, effectively, is what Tidalflow is setting out to solve, with an end-to-end platform that enables developers to make their existing software play nice with the LLM ecosystem. The fledgling startup is emerging out of stealth today with $1.7 million in a round of funding co-led by Google’s Gradient Ventures alongside Dig Ventures, a VC firm set up my MuleSoft founder Ross Mason, with participation from Antler.

Confidence

Consider this hypothetical scenario: An online travel platform decides it wants to embrace LLM-enabled chatbots such as ChatGPT and Google’s Bard, allowing its customers to request airfares and book tickets through natural language prompts in a search engine. So the company creates an LLM-instance for each, but for all they know, 2% of ChatGPT results serve up a destination that the customer didn’t ask for, an error rate that might be even higher on Bard — it’s just impossible to know for sure.

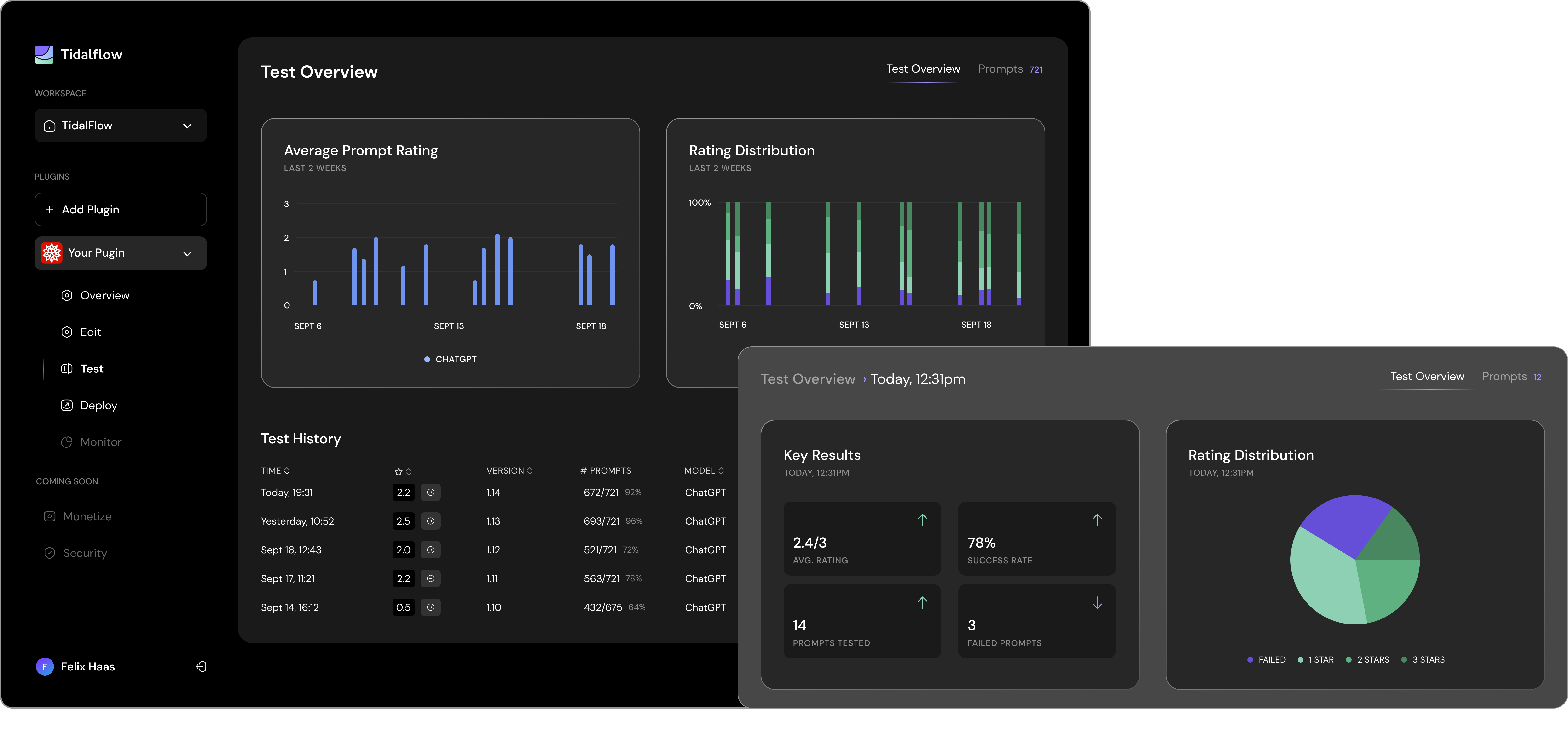

Now, if a company has a fail tolerance of less than 1%, they might just feel safer not going down the generative AI route until they have greater clarity on how their LLM-instance is actually performing. This is where Tidalflow enters the fray, with modules that help companies not only create their LLM-instance, but test, deploy, monitor, secure and — eventually — monetize it. They can also fine-tune the LLM-instance of their product for each ecosystem in a local simulated sandboxed environment, until they arrive at a solution that meets something amenable to their fail-tolerance threshold.

“The big problem is, if you launch on something like ChatGPT, you actually don’t know how the users are interacting with it,” Tidalflow CEO Sebastian Jorna told TechCrunch. “This lack of confidence in the reliability of their software is a major roadblock to rolling out software tooling into LLM ecosystems. Tidalflow’s testing and simulation module builds that confidence.”

Tidalflow can perhaps best be described as an application lifecycle management (ALM) platform that companies plug their OpenAPI specification / documentation into. And out the other end Tidalflow spits out a “battle-tested LLM-instance” of that product, with the front-end serving up monitoring and observability of how that LLM-instance will perform in the wild.

“With normal software testing, you have a specific number of cases that you run through — and if it works, well, the software works,” Jorna said. “Now, because we’re in this stochastic environment, you actually need to throw a lot of volume at it to get some statistical significance. And so that is basically what we do in our testing and simulation module, where we simulate out as if the product is already live, and how potential users might use it.”

Tidalflow dashboard. Image Credits: Tidalflow

In short, Tidalflow lets companies run through myriad edge cases that may or may not break its fancy new generative AI smarts. This will be particularly important for larger businesses where risks around compromising on software reliability are simply too great.

“Bigger enterprise clients just cannot risk putting something out there without the confidence that it works,” Jorna added.

Foundation to funding

Tidalflow’s Coen Stevens (CTO), Sebastian Jorna (CEO) and Henry Wynaendts (CPO). Image Credits: Tidalflow

Tidalflow is officially three months old, with founders Jorna (CEO) and Coen Stevens (CTO) meeting through Antler‘s entrepreneur-in-residence program in Amsterdam. “Once the official program started in the summer, Tidalflow became the quickest company in Antler Netherlands’ history to get funded,” Jorna said.

Today, Tidalflow claims a team of three, including its two co-founders and chief product officer (CPO) Henry Wynaendts. But with a fresh $1.7 million in funding, Jorna said the company is now actively looking to recruit for various front- and back-end engineering roles as they work toward a full commercial launch.

But if nothing else, the fast turnaround from foundation to funding is indicative of the current generative AI gold rush. With ChatGPT getting an API and support for third-party plugins, Google on its way to doing the same for the Bard ecosystem and Microsoft embedding its Copilot AI assistant across Microsoft 365, businesses and developers have a big opportunity to not just leverage generative AI for their own products, but reach a vast amount of users in the process.

“Much like the iPhone ushered in a new era for mobile-friendly software in 2007, we’re now at a similar inflection point, namely for software to become LLM-compatible,” Jorna noted.

Tidalflow will remain in closed beta for now, with plans to launch commercially to the public by the end of 2023.