“You will find that 99.9% of people in this world are actually really good. When they use your product, they use it for all the right reasons. But then once in a while when something bad happens, it also feels like one too many,” Airbnb’s director of Trust Product and Operations, Naba Banerjee, said onstage at TechCrunch Disrupt 2023.

Banerjee knows what she is talking about: Not long before she joined Airbnb’s trust and safety team in 2020, the company had declared a ban on “party houses” and taken other measures after five people died at a Halloween party hosted at a house that was rented on the platform.

Since then, the executive has become a bit of a “party pooper,” in the words of a recent CNBC profile. “As the person in charge of Airbnb’s worldwide ban on parties,” the piece noted, “Naba Banerjee has spent more than three years figuring out how to battle party ‘collusion’ by users, flag ‘repeat party houses’ and, most of all, design an anti-party AI system.”

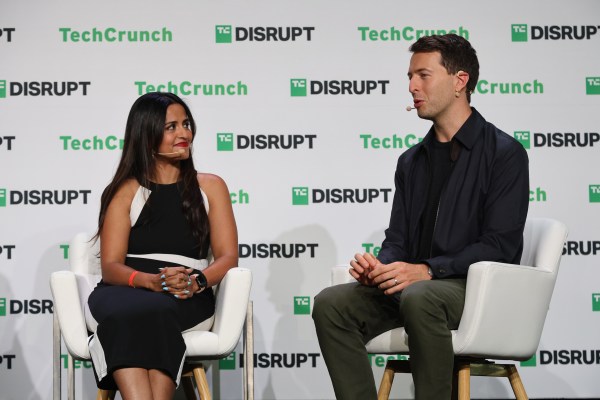

It is this AI element that I found particularly interesting to discuss on Disrupt’s Builders Stage with Banerjee and her fellow panelist, Remote CEO Job van der Voort. While Remote is growing fast, the vast majority of its users still behave exactly as expected. But even a company the size of Airbnb, it turns out, doesn’t have that much data on rule-breaking behavior.

Airbnb knew that some patterns made it much more likely that someone was looking to throw an illegal party: someone under the age of 25, booking a large place not too far from their home, and only for one night. It ended up forbidding some combinations of the above, but it wasn’t long before some users found workarounds, such as booking for two or three nights.

With AI, Airbnb was able to take a much larger number of factors into account as to what might indicate a would-be party thrower — for instance, an impending birthday. But more importantly, the company found it useful to flip the detection problem on its head, just like a startup would.

“We actually have a lot of data about our good users [and] what good behavior looks like,” Banerjee explained. “We know that you check in on time, you check out in time, you leave reviews, you leave your listing really clean, you book in advance, you probably have a lot of history on the platform. So we’ve started working on understanding what great behavior looks like and combining that with our risk models, and that actually makes us a lot smarter.”

Sometimes, however, you can’t tell whether behavior is legit — no matter whether you’re using ML or your own instincts.

Take the case of Remote and its handling of payroll: Is an oversized bonus a sign that an employer has been hacked and that the payout will be redirected to a fraudster’s account? Not necessarily, van der Voort said; some remote workers do get huge bonuses every now and then, and are quite happy about it, thank you very much.

The trick here is not ML; it’s a phone call. “Sometimes we have to call somebody and say: ‘Did you actually mean to send somebody a bonus of this size?’” van der Voort said. “That’s something that we continue to learn and to figure out how to do effectively.”

To hear more from both of them on how to build intelligent operations that will scale with your business, watch the full panel here: