Meta announced today it’s rolling out its first generative AI features for advertisers, allowing them to use AI to create backgrounds, expand images and generate multiple versions of ad text based on their original copy. The launch of the new tools follows the company’s Meta Connect event last week where the social media giant debuted its Quest 3 mixed-reality headset and a host of other generative AI products, including stickers and editing tools, as well as AI-powered smart glasses.

In the case of AI tools for the ad industry, the new products may not be as wild as the celebrity AIs that let you chat with virtual versions of people like MrBeast or Paris Hilton, but they showcase how Meta believes generative AI can assist the brands and businesses that are responsible for delivering the majority of Meta’s revenue.

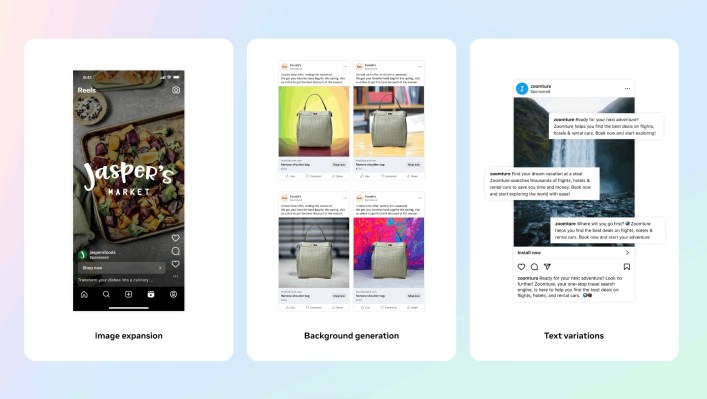

The first among the trio of new features allows an advertiser to customize their creative assets by generating multiple different backgrounds to change the look of their product images. This is similar to the technology that Meta used to create the consumer-facing tool Backdrop, which allows users to change the scene or the background of their image by using prompts. However, in the ad toolkit, the backgrounds are generated for the advertiser based on their original product images and will tend to be “simple backgrounds with colors and patterns,” Meta explains. The feature is available to those advertisers using the company’s Advantage+ catalog to create their sales ads.

Another feature, image expansion, allows advertisers to adjust their assets to fit different aspect ratios required across various products, like Feed or Reels, for example. Also available to Advantage+ creative in Meta’s Mads Manager, the AI feature would allow advertisers to spend less time repurposing their creative assets, including images and video, for different surfaces, Meta claims.

With the text variations feature in Meta Ads Manager, the AI can generate up to six different variations of text based on the advertiser’s original copy. These variations can highlight specific keywords and input phrases the advertiser wants to emphasize, and advertisers can edit the generated output or simply choose the best one or ones that fit their goals. During the campaign, Meta can also display different combinations of text to different people to see which ones drive better responses. However, Meta won’t showcase the performance details for each specific text variation, it says, as the reporting is currently based on a single ad. However, the more options the advertiser selects to run, the more opportunities they’ll have to improve their ad performance, Meta informs them.

Meta says it’s already tested these AI features with a small but diverse set of advertisers earlier this year, and their early results indicate that generative AI will save them five or more hours per week, or a total of one month per year. However, the company admits that there’s still work ahead to better customize the generative AI output to match each advertiser’s style.

In addition, Meta says there are more AI features to come, noting it’s working on new ways to generate ad copy to highlight selling points or generative backgrounds with tailored themes. Plus, as it announced at Meta Connect, businesses will be able to use AI for messaging on WhatsApp and Messenger to chat with customers for e-commerce, engagement and support.