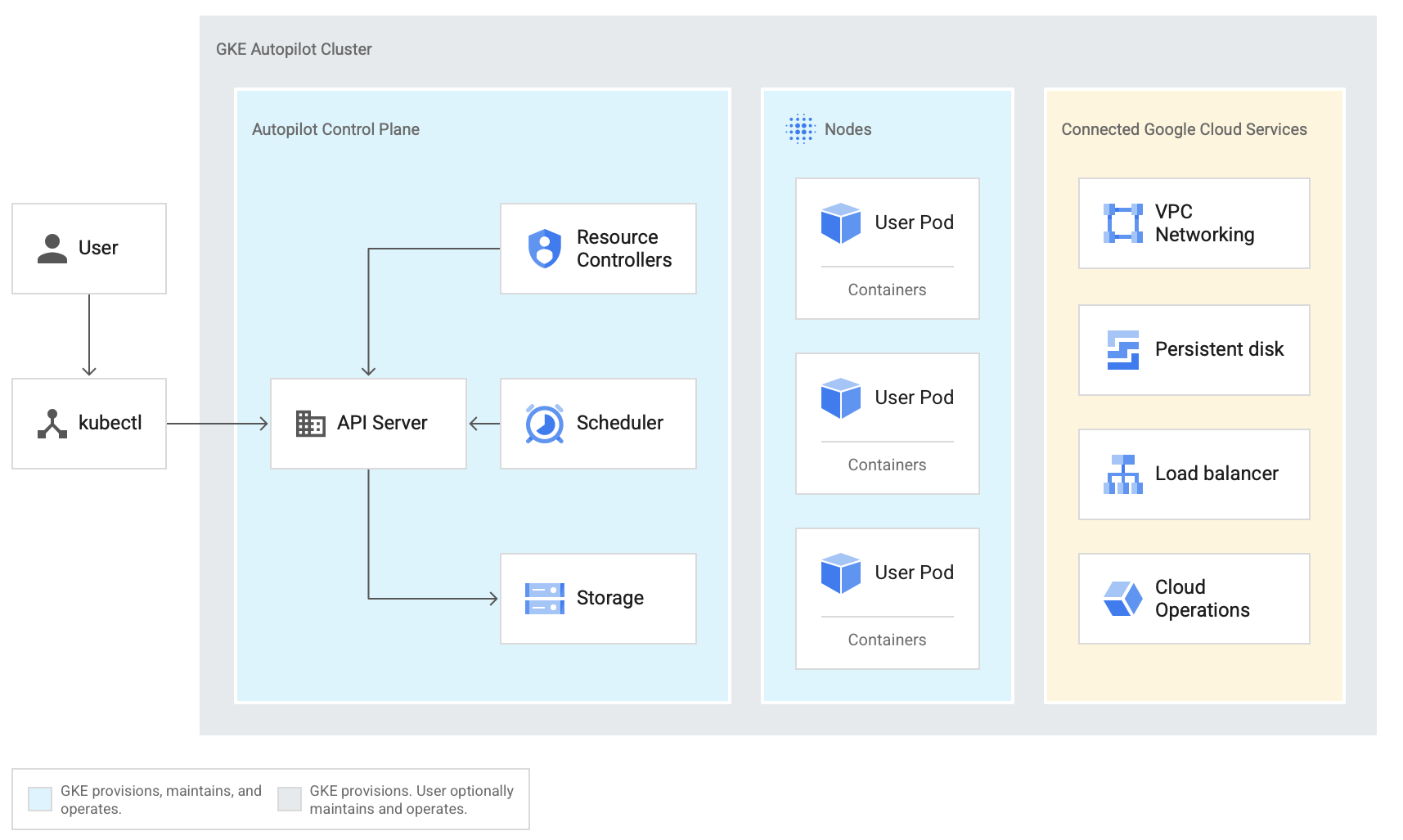

Google Cloud today announced a new operating mode for its Kubernetes Engine (GKE) that turns over the management of much of the day-to-day operations of a container cluster to Google’s own engineers and automated tools. With Autopilot, as the new mode is called, Google manages all of the Day 2 operations of managing these clusters and their nodes, all while implementing best practices for operating and securing them.

This new mode augments the existing GKE experience, which already managed most of the infrastructure of standing up a cluster. This “standard” experience, as Google Cloud now calls it, is still available and allows users to customize their configurations to their heart’s content and manually provision and manage their node infrastructure.

Drew Bradstock, the group product manager for GKE, told me that the idea behind Autopilot was to bring together all of the tools that Google already had for GKE and bring them together with its SRE teams who know how to run these clusters in production — and have long done so inside of the company.

“Autopilot stitches together auto-scaling, auto-upgrades, maintenance, Day 2 operations and — just as importantly — does it in a hardened fashion,” Bradstock noted. “[ … ] What this has allowed our initial customers to do is very quickly offer a better environment for developers or dev and test, as well as production, because they can go from Day Zero and the end of that five-minute cluster creation time, and actually have Day 2 done as well.”

From a developer’s perspective, nothing really changes here, but this new mode does free up teams to focus on the actual workloads and less on managing Kubernetes clusters. With Autopilot, businesses still get the benefits of Kubernetes, but without all of the routine management and maintenance work that comes with that. And that’s definitely a trend we’ve been seeing as the Kubernetes ecosystem has evolved. Few companies, after all, see their ability to effectively manage Kubernetes as their real competitive differentiator.

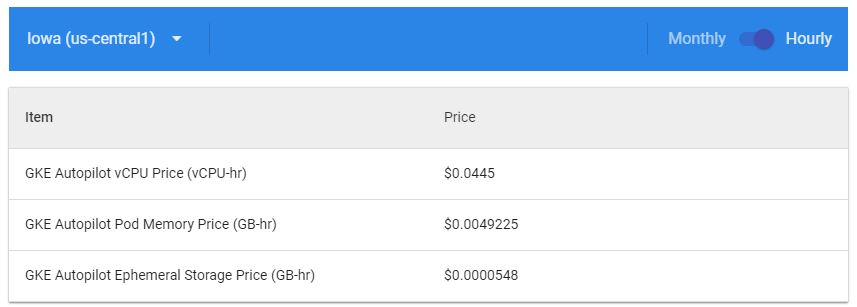

All of that comes at a price, of course, in addition to the standard GKE flat fee of $0.10 per hour and cluster (there’s also a free GKE tier that provides $74.40 in billing credits), plus additional fees for resources that your clusters and pods consume. Google offers a 99.95% SLA for the control plane of its Autopilot clusters and a 99.9% SLA for Autopilot pods in multiple zones.

Autopilot for GKE joins a set of container-centric products in the Google Cloud portfolio that also include Anthos for running in multicloud environments and Cloud Run, Google’s serverless offering. “[Autopilot] is really [about] bringing the automation aspects in GKE we have for running on Google Cloud, and bringing it all together in an easy-to-use package, so that if you’re newer to Kubernetes, or you’ve got a very large fleet, it drastically reduces the amount of time, operations and even compute you need to use,” Bradstock explained.

And while GKE is a key part of Anthos, that service is more about brining Google’s config management, service mesh and other tools to an enterprise’s own data center. Autopilot of GKE is, at least for now, only available on Google Cloud.

“On the serverless side, Cloud Run is really, really great for an opinionated development experience,” Bradstock added. “So you can get going really fast if you want an app to be able to go from zero to 1,000 and back to zero — and not worry about anything at all and have it managed entirely by Google. That’s highly valuable and ideal for a lot of development. Autopilot is more about simplifying the entire platform people work on when they want to leverage the Kubernetes ecosystem, be a lot more in control and have a whole bunch of apps running within one environment.”