Over the past two decades, humanoid robots have greatly improved their ability to perform functions like grasping objects and using computer vision to detect things since Honda’s release of the ASIMO robot in 2000. Despite these improvements, their ability to walk, jump and perform other complex legged motions as fluidly as humans has continued to be a challenge for roboticists.

In recent years, new advances in robot learning and design are using data and insights from animal behavior to enable legged robots to move in much more human-like ways.

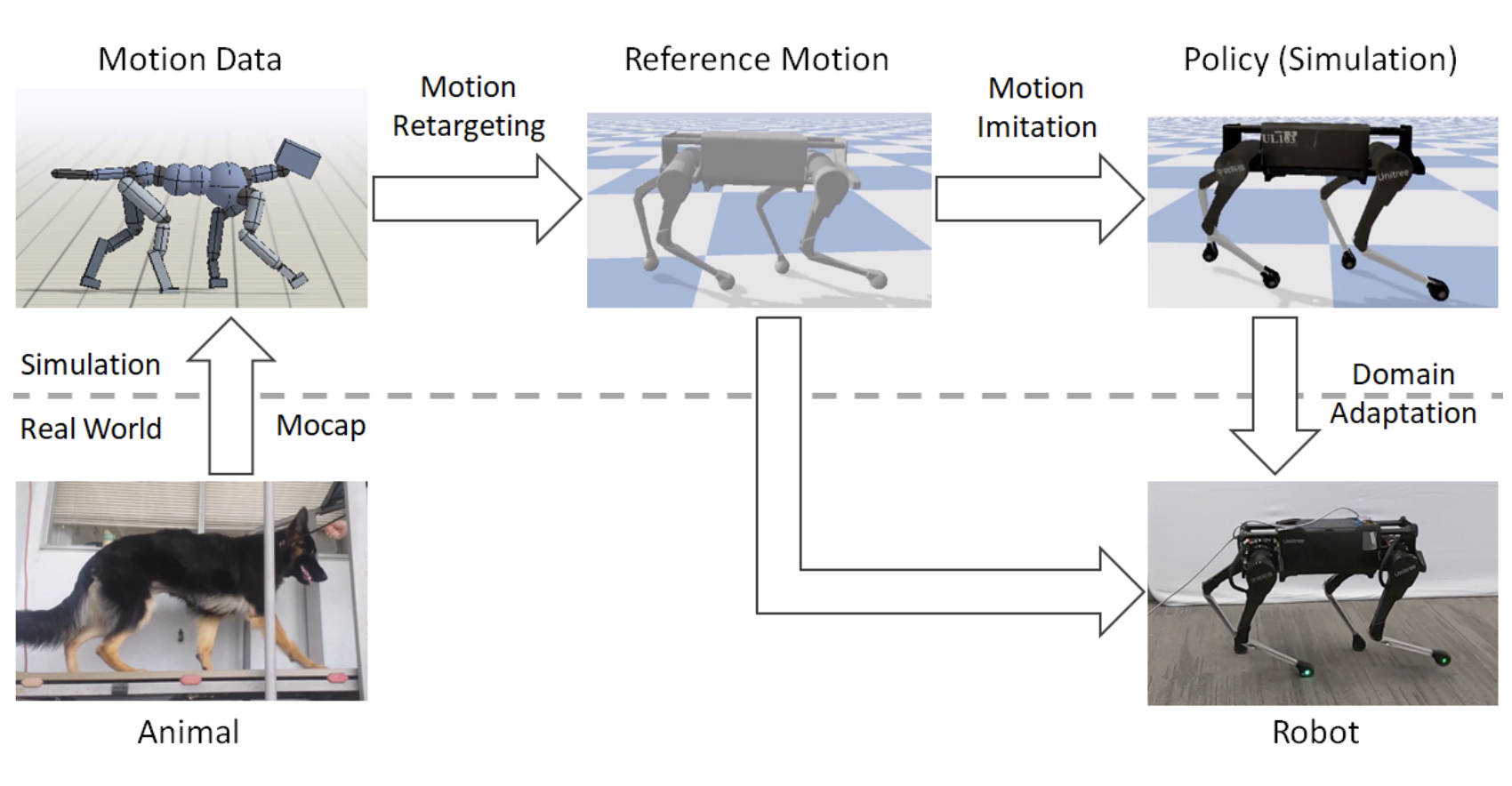

Researchers from Google and UC Berkeley published work earlier this year that showed a robot learning how to walk by mimicking a dog’s movements using a technique called imitation learning. Separate work showed a robot successfully learning to walk by itself through trial and error using deep reinforcement learning algorithms.

Imitation learning in particular has been used in robotics for various use cases, such as OpenAI’s work in helping a robot grasp objects by imitation, but its use in robot locomotion is new and encouraging. It can enable a robot to take input data generated by an expert performing the actions to be learned, and combine it with deep learning techniques to enable more effective learning of movements.

Much of the recent work using imitation and broader deep learning techniques has involved small-scale robots, and there will be many challenges to overcome to apply the same capabilities to life-size robots, but these advances open new pathways for innovation in improving robot locomotion.

The inspiration from animal behaviors has also extended to robot design, with companies such as Agility Robotics and Boston Dynamics incorporating force modeling techniques and integration of full-body sensors to help their robots more closely mimic how animals execute complex movements.

Humanoids are being used increasingly today in settings as diverse as health systems to oil and construction sites. Improving their ability to move, including enabling actions like running, traveling over rough terrain and helping them get up from falls, will greatly improve what they can accomplish in these tasks and power new abilities such as helping in rescue missions, military operations and security monitoring.

Imitation and deep learning are redefining how robots can learn to move

The use of imitation learning in ML applications isn’t entirely new. The approach involves collecting reference data from an expert, which could be a human, animal or a simulation, performing a desired motion and training a system to learn from it.

One of the earliest projects that successfully used imitation learning was an autonomous driving project called ALVINN at CMU in 1989. The project involved a system being taught how to direct a car to stay within its lane by using images from actual driving footage as training data, and it was able to successfully drive a car across the U.S., despite the use of a rudimentary neural network architecture.

In the field of robotics more specifically, imitation learning started being used in the 1990s. The early use cases involved point actions such as picking up objects, and later evolved into slightly more complex ones such as trajectory planning for longer movement sequences.

The past two decades have established imitation learning as an important technique in robotics, particularly for modeling actions such as robot arm movements, which can often be learned based on demonstrations by a human or computer simulation.

Applying it to complex tasks such as legged robot movement has, however, proved to be difficult. Legged movement typically involves a range of motions that need to be learned and applied simultaneously, and can involve many degrees of freedom.

Recently, advances in deep learning have yielded promising results in helping robots learn how to perform legged movement.

The previously mentioned published work from Google and UC Berkeley researchers involved the approach of putting sensors on a dog to track its movement, teaching a virtual robot to imitate this motion in a simulated environment and then transferring the learning to a real-life robot.

Image Credits: Source

The real-life robot was able to learn a range of motions, including walking forward, spinning and taking steps in place. In playing the reference motion data clips backward, the team was also able to seamlessly enable the robot to walk backward. S

These capabilities are compelling since they are difficult to learn with hand-engineered ML techniques, which typically help a robot learn a specified set of movements, but don’t lead to a more varied set of motions. They further require custom design for a given robot and are difficult to generalize to different robot and environment setups.

On the other hand, pure deep reinforcement learning techniques, which involve learning by trial and error through exploration, are able to generalize well to help a robot learn different motions, but carry a lot of algorithmic complexity.

Imitation learning can help reduce this complexity, allowing deep reinforcement learning techniques to perform much better when used in conjunction, and at the same time retain better adaptiveness to learning different movements as compared to hand-engineered ML techniques.

Interestingly, separate research published by teams at UC Berkeley, Google and Georgia Tech demonstrated a small, four-legged robot learning how to walk on its own by trial and error using deep reinforcement learning, without any motion reference data. This is akin to how a baby might learn to walk by trial and error, and involves the system using its own learning as opposed to learning to imitate motions based on external data.

It remains to be seen how well such approaches, with varying degrees of imitation, can extend to life-size robots.

Capturing and using reference motion data for real-life robots can be difficult, particularly for complex motions such as jumping or walking on rough terrain, and it seems logical a full repertoire of movement might require continuous learning and improvement.

At the same time, innovation in capturing and using reference motion data can enable imitation learning to improve how motions are learned, as opposed to use of pure self-learning techniques. Research work in 2017 showed a two-legged robot’s ability to improve its balance by using imitation learning with the use of human motion capture data.

It’s likely that advances on many of these fronts will enable both imitation learning and broader deep reinforcement learning techniques to play increasingly important roles in helping humanoid robots better perform movements, likely in conjunction with existing computer vision and motion planning techniques.

Drawing inspiration for humanoid robot design from animal behaviors

The theme of imitation is also pervading how roboticists think about designing robots based on understanding of animal movement, particularly around design of legs, forces and coordination across different body areas.

Dr. Jonathan Hurst, a professor at Oregon State University who leads the Digital Robotics Laboratory, has focused extensively on adopting learnings from animal motion in building humanoid robots. He writes that research groups are increasingly working on building robots that are “less stiff and that can move in a more dynamic, humanlike fashion.”

Humanoid robots built to date have legs that resemble human legs in joint formation and can mimic positions to a decent extent, but their force control capabilities in enabling less rigid and more flexible and elastic motions are limited.

His team, along with collaborators from CMU and University of Michigan, created a robot called ATRIAS that uses simple spring-mass design to capture the complexity of forces that animals exert while executing movements. ATRIAS has been able to walk in a much more human-like fashion than past humanoid robot designs, and has shown the importance that factors such as the design and modeling of forces play, beyond learning capabilities themselves, in enabling robots to move better.

Agility Robotics, co-founded by Dr. Hurst, has been developing robots innovating on such design principles inspired by animals.

One of their robots, Cassie, is powered by two legs that were artfully designed to enable fast walking and travel through rough terrain. The legs were built with many axes of motion, including hips with three degrees of freedom similar to humans to enable them to swing in any direction. They required a more sophisticated controller to be built, which consisted of more complex mathematical modeling of torques, but enabled a much wider range of motion.

Cassie’s resulting legs are relatively power efficient and light, almost like those of an ostrich, and its movements look fluid. Cassie is able to walk outdoors, including in dirt and grass, and is able to climb steps a lot more naturally while maintaining balance. The team has extended this design to build a new robot, Digit, by adding arms that help with balance by integrating many more sensors to approximate forces more effectively.

This approach of integrating full-body areas in movement, commonly referred to as perception integration, also has its roots in the way animals make movements, and is being used increasingly more in building robots.

Boston Dynamics, one of the leading robot developers, has adopted this concept in creating many of its robots including Atlas, which is able to perform an array of impressive movements such as jumps and somersaults despite its full-size structure.

Its advanced control system enables this by coordinating complex interactions across its entire body consisting of 28 hydraulic joints. Its parts are further 3D-printed and optimized to give it the necessary strength-to-weight ratio to enable such movements despite its size.

Humanoid robots have not stood up to the expectation set forth by Rosie from “The Jetsons” in the 1960s, but they’re finally becoming good enough to come to market. Ford announced plans earlier this year to purchase Agility Robotics’ Digit robot for use in their factories, Boston Dynamics announced plans to commercialize its Spot robot in the past year.

Improvements in humanoid robot locomotion are necessary to power the many applications they could ultimately serve, and the potential of how approaches such as imitation learning and deep learning techniques, along with improvements in robot design, could create improvements is compelling.