Prosthetic limbs are getting better every year, but the strength and precision they gain doesn’t always translate to easier or more effective use, as amputees have only a basic level of control over them. One promising avenue being investigated by Swiss researchers is having an AI take over where manual control leaves off.

To visualize the problem, imagine a person with their arm amputated above the elbow controlling a smart prosthetic limb. With sensors placed on their remaining muscles and other signals, they may fairly easily be able to lift their arm and direct it to a position where they can grab an object on a table.

But what happens next? The many muscles and tendons that would have controlled the fingers are gone, and with them the ability to sense exactly how the user wants to flex or extend their artificial digits. If all the user can do is signal a generic “grip” or “release,” that loses a huge amount of what a hand is actually good for.

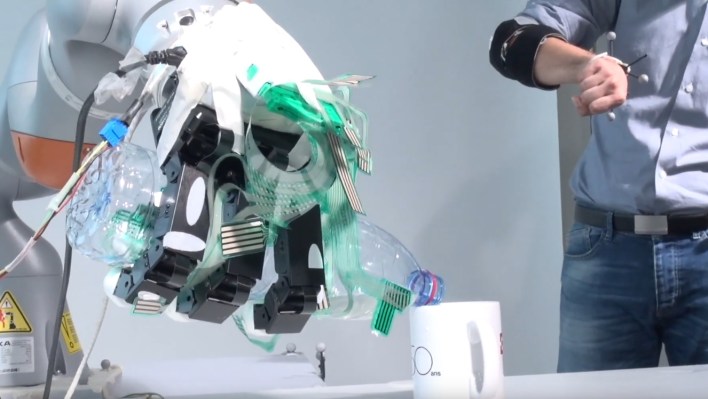

Here’s where researchers from École polytechnique fédérale de Lausanne (EPFL) take over. Being limited to telling the hand to grip or release isn’t a problem if the hand knows what to do next — sort of like how our natural hands “automatically” find the best grip for an object without our needing to think about it. Robotics researchers have been working on automatic detection of grip methods for a long time, and it’s a perfect match for this situation.

Prosthesis users train a machine learning model by having it observe their muscle signals while attempting various motions and grips as best they can without the actual hand to do it with. With that basic information the robotic hand knows what type of grasp it should be attempting, and by monitoring and maximizing the area of contact with the target object, the hand improvises the best grip for it in real time. It also provides drop resistance, being able to adjust its grip in less than half a second should it start to slip.

The result is that the object is grasped strongly but gently for as long as the user continues gripping it with, essentially, their will. When they’re done with the object, having taken a sip of coffee or moved a piece of fruit from a bowl to a plate, they “release” the object and the system senses this change in their muscles’ signals and does the same.

It’s reminiscent of another approach, by students in Microsoft’s Imagine Cup, in which the arm is equipped with a camera in the palm that gives it feedback on the object and how it ought to grip it.

It’s all still very experimental, and done with a third-party robotic arm and not particularly optimized software. But this “shared control” technique is promising and could very well be foundational to the next generation of smart prostheses. The team’s paper is published in the journal Nature Machine Intelligence.