Augmented reality may not be an iOS feature consumers are dying to get their hands on, but Apple’s latest tools are going to make its long-shot dream of AR ubiquity a little bit easier for developers to build.

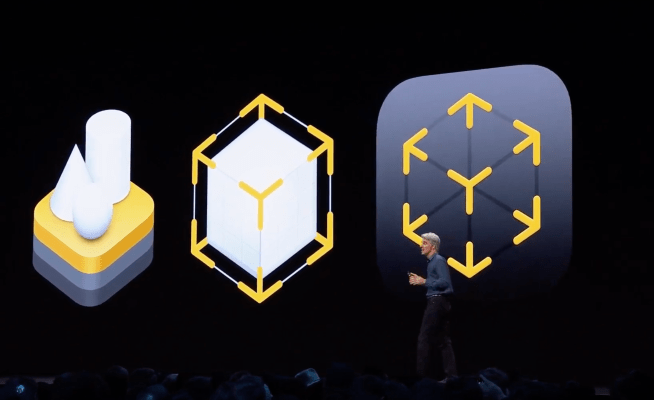

The company delivered some interesting updates to ARKit for the latest version and introduced a new framework for developers called RealityKit.

Apple’s moves with RealityKit could start to invade territory currently swept up by Unity and Unreal, which allow developers to build 3D content, but they understandably really weren’t built up with AR from the beginning. That makes integrations with how the real world functions a bit of a chore, the goal with RealityKit and RealityComposer is to make this relationship smoother.

The tools lets you set the scene, importing 3D assets and sound sources, while detailing how those objects will interact with user input and their environment. It’s all very custom-designed for iOS and can allow developers to test and demo AR scenes on an iPhone or iPad to get a real sense of how a finished product will look.

For ARKit 3, the big announcement is that the latest version supports real-time occlusion, a computer vision problem that is very very hard. Basically, it means the system has to keep an eye on where human figures begin and end so that digital content can be account for people wandering in front of them.

This is likely one of the hardest problems that Apple has tackled yet and judging by one of the onstage demos, it doesn’t look like it’s quite perfect yet and handling environmental object occlusion isn’t supported in this update.

Another addition to ARKit is full-body motion capture, something that probably builds on a lot of the same tech advances for occlusion. You can probably expect to see selfie filters on Instagram and Snapchat expand from the face to entire bodies with this new functionality.

RealityKit is in beta now and ARKit 3 is on the way in iOS 13.