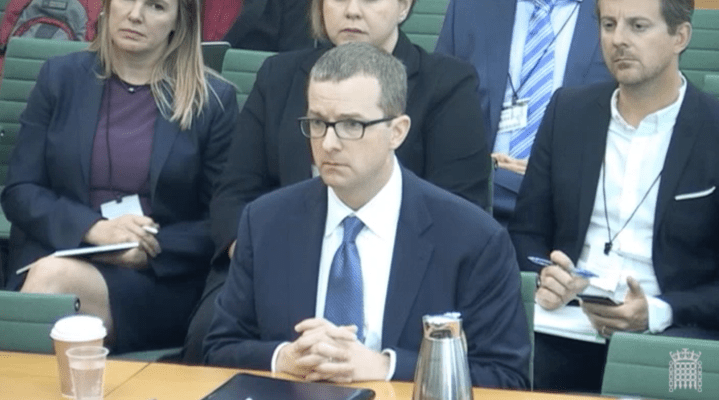

Facebook’s CTO Mike Schroepfer has just undergone almost five hours of often forensic and frequently awkward questions from members of a UK parliament committee that’s investigating online disinformation, and whose members have been further fired up by misinformation they claim Facebook gave it.

The veteran senior exec, who’s clocked up a decade at the company, also as its VP of engineering, is the latest stand-in for CEO Mark Zuckerberg who keeps eschewing repeat requests to appear.

The DCMS committee’s enquiry began last year as a probe into ‘fake news’ but has snowballed in scope as the scale of concern around political disinformation has also mounted — including, most recently, fresh information being exposed by journalists about the scale of the misuse of Facebook data for political targeting purposes.

During today’s session committee chair Damian Collins again made a direct appeal for Zuckerberg to testify, pausing the flow of questions momentarily to cite news reports suggesting the Facebook founder has agreed to fly to Brussels to testify before European Union lawmakers in relation to the Cambridge Analytica Facebook data misuse scandal.

“We’ll certainly be renewing our request for him to give evidence,” said Collins. “We still do need the opportunity to put some of these questions to him.”

Committee members displayed visible outrage during the session, accusing Facebook of concealing the truth or at very least concealing evidence from it at a prior hearing that took place in Washington in February — when the company sent its UK head of policy, Simon Milner, and its head of global policy management, Monika Bickert, to field questions.

During questioning Milner and Bickert failed to inform the committee about a legal agreement Facebook had made with Cambridge Analytica in December 2015 — after the company had learned (via an earlier Guardian article) that Facebook user data had been passed to the company by the developer of an app running on its platform.

Milner also told the committee that Cambridge Analytica could not have any Facebook data — yet last month the company admitted data on up to 87 million of its users had indeed been passed to the firm.

Schroepfer said he wasn’t sure whether Milner had been “specifically informed” about the agreement Facebook already had with Cambridge Analytica — adding: “I’m guessing he didn’t know”. He also claimed he had only himself become aware of it “within the last month”.

“Who knows? Who knows about what the position was with Cambridge Analytica in February of this year? Who was in charge of this?” pressed one committee member.

“I don’t know all of the names of the people who knew that specific information at the time,” responded Schroepfer.

“We are a parliamentary committee. We went to Washington for evidence and we raised the issue of Cambridge Analytica. And Facebook concealed evidence to us as an organization on that day. Isn’t that the truth?” rejoined the committee member, pushing past Schroepfer’s claim to be “doing my best” to provide it with information.

A pattern of evasive behavior

“You are doing your best but the buck doesn’t stop with you does it? Where does the buck stop?”

“It stops with Mark,” replied Schroepfer — leading to a quick fire exchange where he was pressed about (and avoided answering) what Zuckerberg knew and why the Facebook founder wouldn’t come and answer the committee’s questions himself.

“What we want is the truth. We didn’t get the truth in February… Mr Schroepfer I remain to be convinced that your company has integrity,” was the pointed conclusion after a lengthy exchange on this.

“What’s been frustrating for us in this enquiry is a pattern of behavior from the company — an unwillingness to engage, and a desire to hold onto information and not disclose it,” said Collins, returning to the theme at another stage of the hearing — and also accusing Facebook of not providing it with “straight answers” in Washington.

“We wouldn’t be having this discussion now if this information hadn’t been brought into the light by investigative journalists,” he continued. “And Facebook even tried to stop that happening as well [referring to a threat by the company to sue the Guardian ahead of publication of its Cambridge Analytica exposé]… It’s a pattern of behavior, of seeking to pretend this isn’t happening.”

The committee expressed further dissatisfaction with Facebook immediately following the session, emphasizing that Schroepfer had “failed to answer fully on nearly 40 separate points”.

“Mr Schroepfer, Mark Zuckerberg’s right hand man whom we were assured could represent his views, today failed to answer many specific and detailed questions about Facebook’s business practices,” said Collins in a statement after the hearing.

“We will be asking him to respond in writing to the committee on these points; however, we are mindful that it took a global reputational crisis and three months for the company to follow up on questions we put to them in Washington D.C. on February 8.

“We believe that, given the large number of outstanding questions for Facebook to answer, Mark Zuckerberg should still appear in front of the Committee… and will request that he appears in front of the DCMS Committee before the May 24.”

We reached out to Facebook for comment — but at the time of writing the company had not responded.

Palantir’s data use under review

Schroepfer was questioned on a wide range of topics during today’s session. And while he was fuzzy on many details, giving lots of partial answers and promises to “follow up”, one thing he did confirm was that Facebook board member Peter Thiel’s secretive big data analytics firm, Palantir, is one of the companies Facebook is investigating as part of a historical audit of app developers’ use of its platform.

Have there ever been concerns raised about Palantir’s activity, and about whether it has gained improper access to Facebook user data, asked Collins.

“I think we are looking at lots of different things now. Many people have raised that concern — and since it’s in the public discourse it’s obviously something we’re looking into,” said Schroepfer.

“But it’s part of the review work that Facebook’s doing?” pressed Collins.

“Correct,” he responded.

The historical app audit was announced in the wake of last month’s revelations about how much Facebook data Cambridge Analytica was given by app developer (and Cambridge University academic), Dr Aleksandr Kogan — in what the company couched as a “breach of trust”.

However Kogan, who testified to the committee earlier this week, argues he was just using Facebook’s platform as it was architected and intended to be used — going so far as to claim its developer terms are “not legally valid”. (“For you to break a policy it has to exist. And really be their policy, The reality is Facebook’s policy is unlikely to be their policy,” was Kogan’s construction, earning him a quip from a committee member that he “should be a professor of semantics”.)

Schroepfer said he disagreed with Kogan’s assessment that Facebook didn’t have a policy, saying the goal of the platform has been to foster social experiences — and that “those same tools, because they’re easy and great for the consumer, can go wrong”. So he did at least indirectly confirm Kogan’s general point that Facebook’s developer and user terms are at loggerheads.

“This is why we have gone through several iterations of the platform — where we have effectively locked down parts of the platform,” continued Schroepfer. “Which increases friction and makes it less easy for the consumer to use these things but does safeguard that data more. And been a lot more proactive in the review and enforcement of these things. So this wasn’t a lack of care… but I’ll tell you that our primary product is designed to help people share safety with a limited audience.

“If you want to say it to the world you can publish it on a blog or on Twitter. If you want to share it with your friends only, that’s the primary thing Facebook does. We violate that trust — and that data goes somewhere else — we’re sort of violating the core principles of our product. And that’s a big problem. And this is why I wanted to come to you personally today to talk about this because this is a serious issue.”

“You’re not just a neutral platform — you are players”

The same committee member, Paul Farrelly, who earlier pressed Kogan about why he hadn’t bothered to find out which political candidates stood to be the beneficiary of his data harvesting and processing activities for Cambridge Analytica, put it to Schroepfer that Facebook’s own actions in how it manages its business activities — and specifically because it embeds its own staff with political campaigns to help them use its tools — amounts to the company being “Dr Kogan writ large”.

“You’re not just a neutral platform — you are players,” said Farrelly.

“The clear thing is we don’t have an opinion on the outcome of these elections. That is not what we are trying to do. We are trying to offer services to any customer of ours who would like to know how to use our products better,” Schroepfer responded. “We have never turned away a political party because we didn’t want to help them win an election.

“We believe in strong open political discourse and what we’re trying to do is make sure that people can get their messages across.”

However in another exchange the Facebook exec appeared not to be aware of a basic tenet of UK election law — which prohibits campaign spending by foreign entities.

“How many UK Facebook users and Instagram users were contacted in the UK referendum by foreign, non-UK entities?” asked committee member Julie Elliott.

“We would have to understand and do the analysis of who — of all the ads run in that campaign — where is the location, the source of all of the different advertisers,” said Schroepfer, tailing off with a “so…” and without providing a figure.

“But do you have that information?” pressed Elliott.

“I don’t have it on the top of my head. I can see if we can get you some more of it,” he responded.

“Our elections are very heavily regulated, and income or monies from other countries can’t be spent in our elections in any way shape or form,” she continued. “So I would have thought that you would have that information. Because your company will be aware of what our electoral law is.”

“Again I don’t have that information on me,” Schroepfer said — repeating the line that Facebook would “follow up with the relevant information”.

The Facebook CTO was also asked if the company could provide it with an archive of adverts that were run on its platform around the time of the Brexit referendum by Aggregate IQ — a Canadian data company that’s been linked to Cambridge Analytica/SCL, and which received £3.5M from leave campaign groups in the run up to the 2016 referendum (and has also been described by leave campaigners as instrumental to securing their win). It’s also under joint investigation by Canadian data watchdogs, along with Facebook.

In written evidence provided to the committee today Facebook says it has been helping ongoing investigations into “the Cambridge Analytica issue” that are being undertaken by the UK’s Electoral Commission and its data protection watchdog, the ICO. Here it writes that its records show AIQ spent “approximately $2M USD on ads from pages that appear to be associated with the 2016 Referendum”.

Schroepfer’s responses on several requests by the committee for historical samples of the referendum ads AIQ had run amounted to ‘we’ll see what we can do’ — with the exec cautioning that he wasn’t entirely sure how much data might have been retained.

“I think specifically in Aggregate IQ and Cambridge Analytica related to the UK referendum I believe we are producing more extensive information for both the Electoral Commission and the Information Commissioner,” he said at one point, adding it would also provide the committee with the same information if it’s legally able to. “I think we are trying to do — give them all the data we have on the ads and what they spent and what they’re like.”

Collins asked what would happen if an organization or an individual had used a Facebook ad account to target dark ads during the referendum and then taken down the page as soon as the campaign was over. “How would you be able to identify that activity had ever taken place?” he asked.

“I do believe, uh, we have — I would have to confirm, but there is a possibility that we have a separate system — a log of the ads that were run,” said Schroepfer, displaying some of the fuzziness that irritated the committee. “I know we would have the page itself if the page was still active. If they’d run prior campaigns and deleted the page we may retain some information about those ads — I don’t know the specifics, for example how detailed that information is, and how long retention is for that particular set of data.”

Dark ads a “major threat to democracy”

Collins pointed out that a big part of UK (and indeed US) election law relates to “declaration of spent”, before making the conjoined point that if someone is “hiding that spend” — i.e. by placing dark ads that only the recipient sees, and which can be taken offline immediately after the campaign — it smells like a major risk to the democratic process.

“If no one’s got the ability to audit that, that is a major threat to democracy,” warned Collins. “And would be a license for a major breach of election law.”

“Okay,” responded Schroepfer as if the risk had never crossed his mind before. “We can come back on the details on that.”

On the wider app audit that Facebook has committed to carrying out in the wake of the scandal, Schroepfer was also asked how it can audit apps or entities that are no longer on the platform — and he admitted this is “a challenge” and said Facebook won’t have “perfect information or detail”.

“This is going to be a challenge again because we’re dealing with historic events so we’re not going to have perfect information or detail on any of these things,” he said. “I think where we start is — it very well may be that this company is defunct but we can look at how they used the platform. Maybe there’s two people who used the app and they asked for relatively innocuous data — so the chance that that is a big issue is a lot lower than an app that was widely in circulation. So I think we can at least look at that sort of information. And try to chase down the trail.

“If we have concerns about it even if the company is defunct it’s possible we can find former employees of the company who might have more information about it. This starts with trying to identify where the issues might be and then run the trail down as much as we can. As you highlight, though, there are going to be limits to what we can find. But our goal is to understand this as best as we can.”

The committee also wanted to know if Facebook had set a deadline for completing the audit — but Schroepfer would only say it’s going “as fast as we can”.

He claimed Facebook is sharing “a tremendous amount of information” with the UK’s data protection watchdog — as it continues its (now) year-long investigation into the use of digital data for political purposes.

“I would guess we’re sharing information on this too,” he said in reference to app audit data. “I know that I personally shared a bunch of details on a variety of things we’re doing. And same with the Electoral Commission [which is investigating whether use of digital data and social media platforms broke campaign spending rules].”

In Schroepfer’s written evidence to the committee Facebook says it has unearthed some suggestive links between Cambridge Analytica/SCL and Aggegrate IQ: “In the course of our ongoing review, we also found certain billing and administration connections between SCL/Cambridge Analytica and AIQ”, it notes.

Both entities continue to deny any link exists between them, claiming they are entirely separate entities — though the former Cambridge Analytica employee turned whistleblower, Chris Wylie, has described AIQ as essentially the Canadian arm of SCL.

“The collaboration we saw was some billing and administrative contacts between the two of them, so you’d see similar people show up in each of the accounts,” said Schroepfer, when asked for more detail about what it had found, before declining to say anything else in a public setting on account of ongoing investigations — despite the committee pointing out other witnesses it has heard from have not held back on that front.

Another piece of information Facebook has included in the written evidence is the claim that it does not believe AIQ used Facebook data obtained via Kogan’s apps for targeting referendum ads — saying it used email address uploads for “many” of its ad campaigns during the referendum.

“The data gathered through the TIYDL [Kogan’s thisisyourdigitallife] app did not include the email addresses of app installers or their friends. This means that AIQ could not have obtained these email addresses from the data TIYDL gathered from Facebook,” Facebook asserts.

Schroepfer was questioned on this during the session and said that while there was some overlap in terms of individuals who had downloaded Kogan’s app and who had been in the audiences targeted by AIQ this was only 3-4% — which he claimed was statistically insignificant, based on comparing with other Facebook apps of similar popularity to Kogan’s.

“AIQ must have obtained these email addresses for British voters targeted in these campaigns from a different source,” is the company’s conclusion.

“We are investigating Mr Chancellor’s role right now”

The committee also asked several questions about Joseph Chancellor, the co-director of Kogan’s app company, GSR, who became an employee of Facebook in 2015 after he had left GSR. Its questions included what Chancellor’s exact role at Facebook is and why Kogan has been heavily criticized by the company yet his GSR co-director apparently remains gainfully employed by it.

Schroepfer initially claimed Facebook hadn’t known Chancellor was a director of GSR prior to employing him, in November 2015 — saying it had only become aware of that specific piece of his employment history in 2017.

But after a break in the hearing he ‘clarified’ this answer — adding: “In the recruiting process, people hiring him probably saw a CV and may have known he was part of GSR. Had someone known that — had we connected all the dots to when this thing happened with Mr Kogan, later on had he been mentioned in the documents that we signed with the Kogan party — no. Is it possible that someone knew about this and the right other people in the organization didn’t know about it, that is possible.”

A committee member then pressed him further. “We have evidence that shows that Facebook knew in November 2016 that Joseph Chancellor had formed the company, GSR, with Aleksandr Kogan which obviously then went on to provide the information to Cambridge Analytica. I’m very unclear as to why Facebook have taken such a very direct and critical line… with Kogan but have completely ignored Joseph Chancellor.”

At that point Schroepfer revealed Facebook is currently investigating Chancellor as a result of the data scandal.

“I understand your concern. We are investigating Mr Chancellor’s role right now,” he said. “There’s an employment investigation going on right now.

In terms of the work Chancellor is doing for Facebook, Schroepfer said he thought he had worked on VR for the company — but emphasized he has not been involved with “the platform”.

The issue of the NDA Kogan claimed Facebook had made him sign also came up. But Schroepfer counter claimed that this was not an NDA but just a “standard confidentiality clause” in the agreement to certify Kogan had deleted the Facebook data and its derivatives.

“We want him to be able to be open. We’re waiving any confidentiality there if that’s not clear from a legal standpoint,” he said later, clarifying it does not consider Kogan legally gagged.

Schroepfer also confirmed this agreement was signed with Kogan in June 2016, and said the “core commitments” were to confirm the deletion of data from himself and three others Kogan had passed it to: Former Cambridge Analytica CEO Alexander Nix; Wylie, for a company he had set up after leaving Cambridge Analytica; and Dr Michael Inzlicht from the Toronto Laboratory for Social Neuroscience (Kogan mentioned to the committee earlier this week he had also passed some of the Facebook data to a fellow academic in Canada).

Asked whether any payments had been made between Facebook and Kogan as part of the contract, Schroepfer said: “I believe there was no payment involved in this at all.”

‘Radical’ transparency is its fix for regulation

Other issues raised by the committee included why Facebook does not provide an overall control or opt-out for political advertising; why it does not offer a separate feed for ads but chooses to embed them into the Newsfeed; how and why it gathers data on non-users; the addictiveness engineered into its product; what it does about fake accounts; why it hasn’t recruited more humans to help with the “challenges” of managing content on a platform that’s scaled so large; and aspects of its approach to GDPR compliance.

On the latter, Schroepfer was queried specifically on why Facebook had decided to shift the data controller of ~1.5BN non-EU international users from Ireland to the US. On this he claimed the GDPR’s stipulation that there be a “lead regulator” conflicts with Facebook’s desire to be more responsive to local concerns in its non-EU international markets.

“US law does not have a notion of a lead regulator so the US does not become the lead regulator — it opens up the opportunity for us to have local markets have them, regions, be the lead and final regulator for the users in that area,” he claimed.

Asked whether he thinks the time has come for “robust regulation and empowerment of consumers over their information”, Schroepfer demurred that new regulation is needed to control data flowing over consumer platforms. “Whether, through regulation or not, making sure consumers have visibility, control and can access and take their information with you, I agree 100%,” he said, agreeing only to further self-regulation not to the need for new laws.

“In terms of regulation there are multiple laws and regulatory bodies that we are under the guise of right now. Obviously the GDPR is coming into effect just next month. We have been regulated in Europe by the Irish DPC whose done extensive audits of our systems over multiple years. In the US we’re regulated by the FTC, Privacy Commissioner in Canada and others. So I think the question isn’t ‘if’, the question is honestly how do we ensure the regulations and the practices achieve the goals you want. Which is consumers have safety, they have transparency, they understand how this stuff works, and they have control.

“And the details of implementing that is where all the really hard work is.”

His stock response to the committee’s concerns about divisive political ads was that Facebook believes “radical transparency” is the fix — also dropping one tidbit of extra news on that front in his written testimony by saying Facebook will roll out an authentication process for political advertisers in the UK in time for the local elections in May 2019.

Ads will also be required to be labeled as “political” and disclose who paid for the ad. And there will be a searchable archive — available for seven years — which will include the ads themselves plus some associated data (such as how many times an ad may have been seen, how much money was spent, and the kinds of people who saw it).

Collins asked Schroepfer whether Facebook’s ad transparency measures will also include “targeting data” — i.e. “will I understand not just who the advertiser was and what other adverts they’d run but why they’d chose to advertise to me”?

“I believe among the things you’ll see is spend (how much was spent on this ad); you will see who they were trying to advertise to (what is the audience they were trying to reach); and I believe you will also be able to see some basic information on how much it was viewed,” Schroepfer replied — avoiding yet another straight answer.