Creating avatars. Who’s got time for it?! Computers, that’s who. You’ll never have to waste another second selecting your hair style, skin tone or facial hair length if this research from Facebook finds its way into product form.

In a paper (PDF) presented at the International Conference on Computer Vision, Lior Wolf et al. show how they created a machine learning system that creates the best possible match of your real face to one in a custom emoji generator.

You may be thinking: Wait, didn’t Google do this earlier in the year? Yes, kind of. But there’s a critical difference. Google’s version, while cool, used humans to rate and describe various features found in common among various faces: curly hair, nose types, eye shapes. These were then illustrated (quite well, I thought) as representations of that particular feature.

You may be thinking: Wait, didn’t Google do this earlier in the year? Yes, kind of. But there’s a critical difference. Google’s version, while cool, used humans to rate and describe various features found in common among various faces: curly hair, nose types, eye shapes. These were then illustrated (quite well, I thought) as representations of that particular feature.

Essentially, the computer looks for the tell-tale signs of a feature like freckles, then grabs the corresponding piece of art from its database. It works, but it’s largely reliant on human input for defining the features.

Facebook’s approach was different. The idea being pursued was a system that really makes the best possible representation of a given face, using whatever tools it has at hand. So whether it’s emoji, Bitmoji (shudder), Mii, a VR face generator or anything else, it could still accomplish its task. To paraphrase the researchers, humans do it all the time, so why not AI?

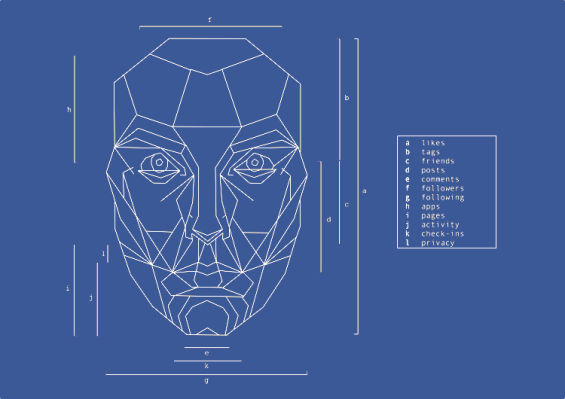

The system accomplishes this (to some degree) by judging both the face and the generated representation by the same analysis and feature identification algorithm, as if they were simply two pictures of the same person. When the resulting numbers generated by the two are as close as they seem likely to get, that means the two are visually similar to a sufficient degree. (At some point with these cartoon faces it isn’t going to get much better.)

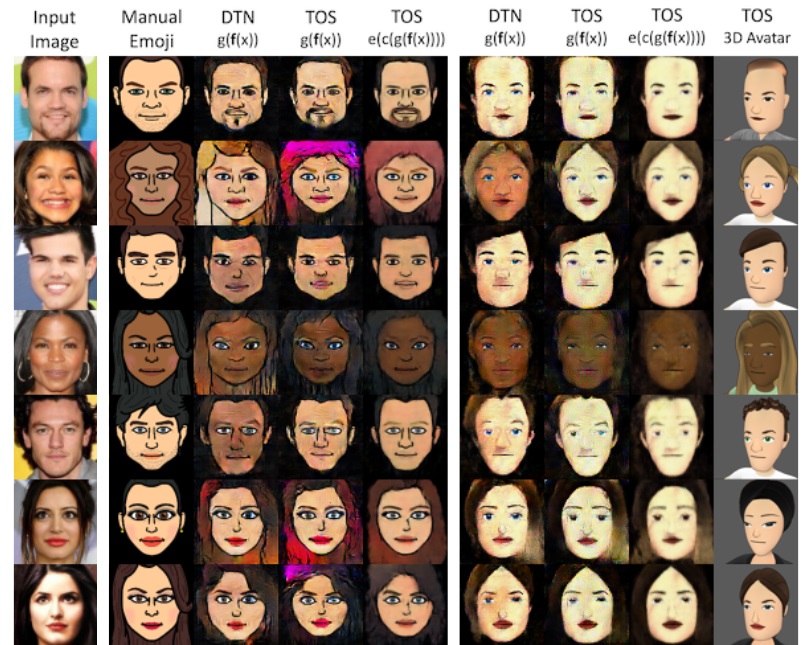

In this figure from the paper, the source images are at left, then manually configured emoji (not used in the system, just for comparison), then attempts by several variations of the algorithm, then similar attempts in a 3D avatar system.

What’s great about this technique is that because it isn’t tied to any particular avatar type, it works (theoretically) on any of them. As long as there are good representations and bad ones, the system will match them with the actual face and figure out which is which.

Facebook could use this information for many useful purposes — perhaps most immediately a bespoke emoji system. It could even update automatically when you put up a picture with a new haircut or trimmed beard. But the avatar-matching work could also be done for other sites — sign into whatever VR game with Facebook and have it immediately create a convincing version of yourself. And plenty of people out there surely wouldn’t mind if, at the very least, their emoji defaulted to their actual skin color instead of yellow.

The full paper is pretty technical, but it was presented at an AI conference, so that’s to be expected.