The reveal of Google Lens at I/O was one of the most exciting moments of the developer conference, with the tool promising to be a new type of visual browser identifying the world around users and giving them easy access to a web of information and context.

I’ve gotten to take a look at a beta of Lens on the Pixel 2 XL and it’s clear that we’re a long way from realizing its true utility. That’s perfectly fine; there are very rarely massive leaps in tech capability and computer vision-powered identification has always struggled in conditions that are less than ideal. The issue is that Google is selling a computer vision tool without a ton of consumer use as a fairly significant selling point of its flagship device.

Lens has a lot of potential but it’s going to take time, and it’s not immediately clear why Google rushed this one out of the gate. Assistant obviously hasn’t been perfect since launch, there’s still a lot it can’t do, but users are able to determine the queries it is helpful with and move on from there. It’s harder to take this approach with Lens which further isolates the ideal conditions it needs in order to function as intended.

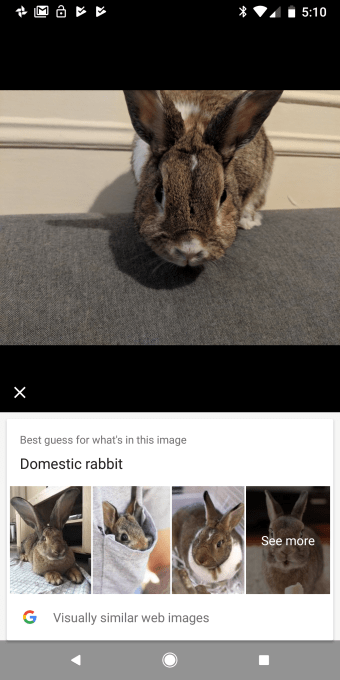

In the few days I’ve had access to Lens (currently available in the Google Photos app, coming later to the camera), it hasn’t proven itself an indispensable feature by any means, but with more fine-tuning it’s clear that Google’s efforts to inject machine learning into user-friendly processes are going to lead to some very important advances in how we interact with the world digitally.

Google’s approach at launch really doesn’t seem to be as much about discovery as it is simply touting that the Pixel 2 can come to the same realizations with AI as we can. When taken in the grand scheme of things this is amazing, but… it isn’t all that useful.

When it came to Lens identifying things like albums or books, the tool operated at a similar level to Amazon’s visual search inside its native mobile app which already does so in a live capacity, a feature Google will not be launching with on the Pixel 2. Even the “Now Playing” feature was generally only able to identify songs that were fairly obvious, negating the clear use case of tools like Shazam in the first place — to discover those hidden gem songs at the tip of your tongue.

While smartphone platforms like ARKit and ARCore would make it seem like we’re closing in on some really awesome AR use cases, the fact is that efficiently finding a stable plane to balance digital objects on or sensing the geometry of an area is small potatoes compared to actually garnering meaningful data as to what’s in view. Lens showcases just how far we have to go before users get utility out of some of these features.

Right now AI-powered assistants are largely defined by what they stumble at. Siri is the product of what it can’t do as much as what it can. It’s a foregone conclusion that the favorite answer of Google Home and Amazon Echo is “Hmm I didn’t quite get that,” but we expect them to be fairly dumb. When it comes to Lens this is no different. The fact is that the payoff for Google intimately integrating AI tools into our lives won’t be immediate. For a long, long while it’s going to feel fairly gimmicky. The issue is that Google doesn’t seem to be doing much to contain expectations on what they can and can’t deliver, they’re happy to convince you that the future is built into its latest flagship phone, albeit a future in beta.

There are a lot of good reasons to go for the Pixel 2, most of which are the camera, but Lens shouldn’t be part of the consideration. It’s a future bet that’s got a long road ahead of it.