If voice-based assistants are to become the primary way we interact with our devices now and in the future, then Google has just upped the ante in the battle between its own voice search functionality and that of rival mobile assistants like Apple’s Siri or Microsoft’s Cortana. While Google’s app has historically done a better job at understanding its users and answering queries accurately, the company says today that its app has now gotten better at actually understanding the meaning behind users’ questions, as well.

That is, the app has been improved so that it better understands natural language and more complex questions.

The company first rolled out voice search in 2008, and later tied that into its Knowledge Graph in 2012, in order to answer users’ questions with factual information about persons, places or things. At first, it was able to provide information on entities like “Barack Obama,” and then later was able to answer questions like “How old is Stan Lee?,” Google explains.

Its ability to answer questions improved again after a while, as it learned to understand words in different contexts. For instance, if you asked what ingredients were in a screwdriver, Google knew you meant the drink, not the tool.

Now Google says its voice assistant baked into the Google app is able to understand the meaning of what you’re asking, so you’ll be able to ask more complex questions than in the past. In order to do so, Google is breaking down your query into its pieces to better understand the semantics involved with each piece, it says.

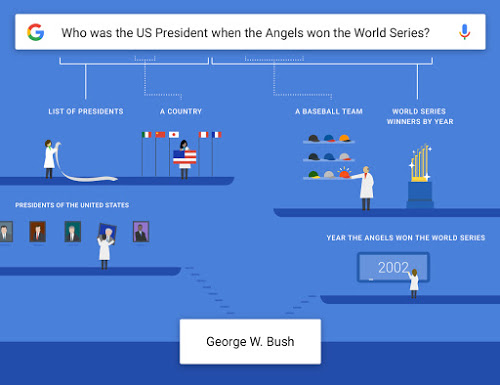

That means you could ask Google a question like “Who was the U.S. President when the Angels won the World Series?” and it could respond, “George Bush.”

Though any person could easily understand such a question, to get a machine to do the same is more of a feat. The system has to determine that the “Angels” are a baseball team, figure out when that team won a particular game (“the World Series”), then check the year of that win against a list of presidents for a given country (“U.S.”)

This “complex combination” is just one of Google’s new abilities, the company says. It can also answer more complicated queries about a point in time (e.g. “What songs did Taylor Swift record in 2014?”), as well as those that involve superlatives (e.g. “What are the largest cities in Texas?”)

In the former case, that means you can ask Google questions that have dates in them and it will answer better than it did in the past. And in the latter case, Google now understands superlatives like “tallest,” “largest,” and ordered items.

If you tried to answer questions like these before, Google would have only returned search results matching the keywords, without properly identifying which entities you were really asking about, the company tells us. In other words, this is the beginning of Google becoming a true semantic search engine – one that understands, as humans naturally and easily do, what a person really means when they ask a question.

But Google says it’s still working on the technology, which is not perfect. There will be some questions it doesn’t quite answer correctly for the time being.

In a blog post detailing the news, Google offers an example of the kind of mistake it will still make, saying that if you ask “Who was Dakota Johnson’s mom in the movie?” it would respond with Dakota Johnson’s real-life mother Melanie Griffith. The answer the user wanted, however, was the actor Jennifer Ehle who played Anastasia’s mother Carla in the “50 Shades of Grey” movie.

That being said, the ability to speak more naturally and ask complicated questions advances Google’s voice search technology quite a bit further than its rivals, who still sometimes struggle to understand simple questions and often just dump you to a keyword search on the web when they fail.

For Google, bettering its voice search isn’t just an academic challenge – it can have a real impact on the company’s bottom line. The more people rely on Google, the more they’ll continue to use its site and services, and the more they’ll be exposed to ads. That’s important especially as users transition an increasing portion of their web usage to native apps instead of the browser as mobile phones become our primary computers. In addition, Google’s voice search technology is integrated deeply into its own mobile platform, Android, as one of the competitive advantages it offers over Apple’s iPhone and iOS operating system.

The improved voice search is rolling out now on the company’s flagship Google application for both iOS and Android.