Movidius isn’t exactly a household name (yet), but chances are you came across the company’s name when Google launched its Project Tango smartphones and tablets earlier this year. Those are Google‘s testbeds for seeing what developers can achieve when mobile devices have advanced 3D sensing on a phone and Movidius provided the specialized vision processor for those devices.

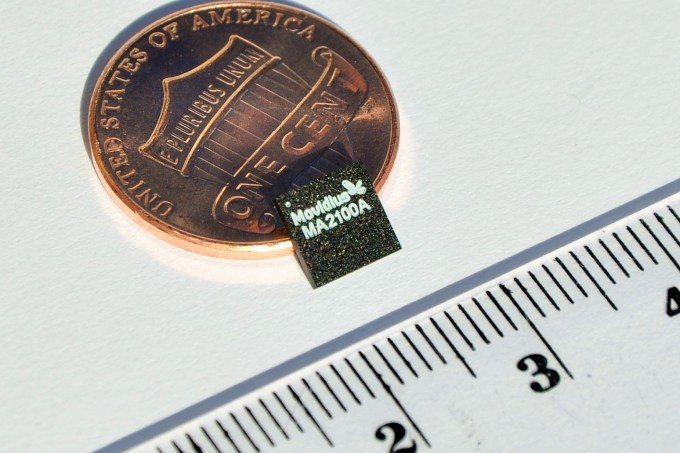

Movidius made its public debut with Project Tango, but at the time, the company was already working on the second version of its chip. The Myriad 2 is a much more energy-efficient and powerful version of the original chip.

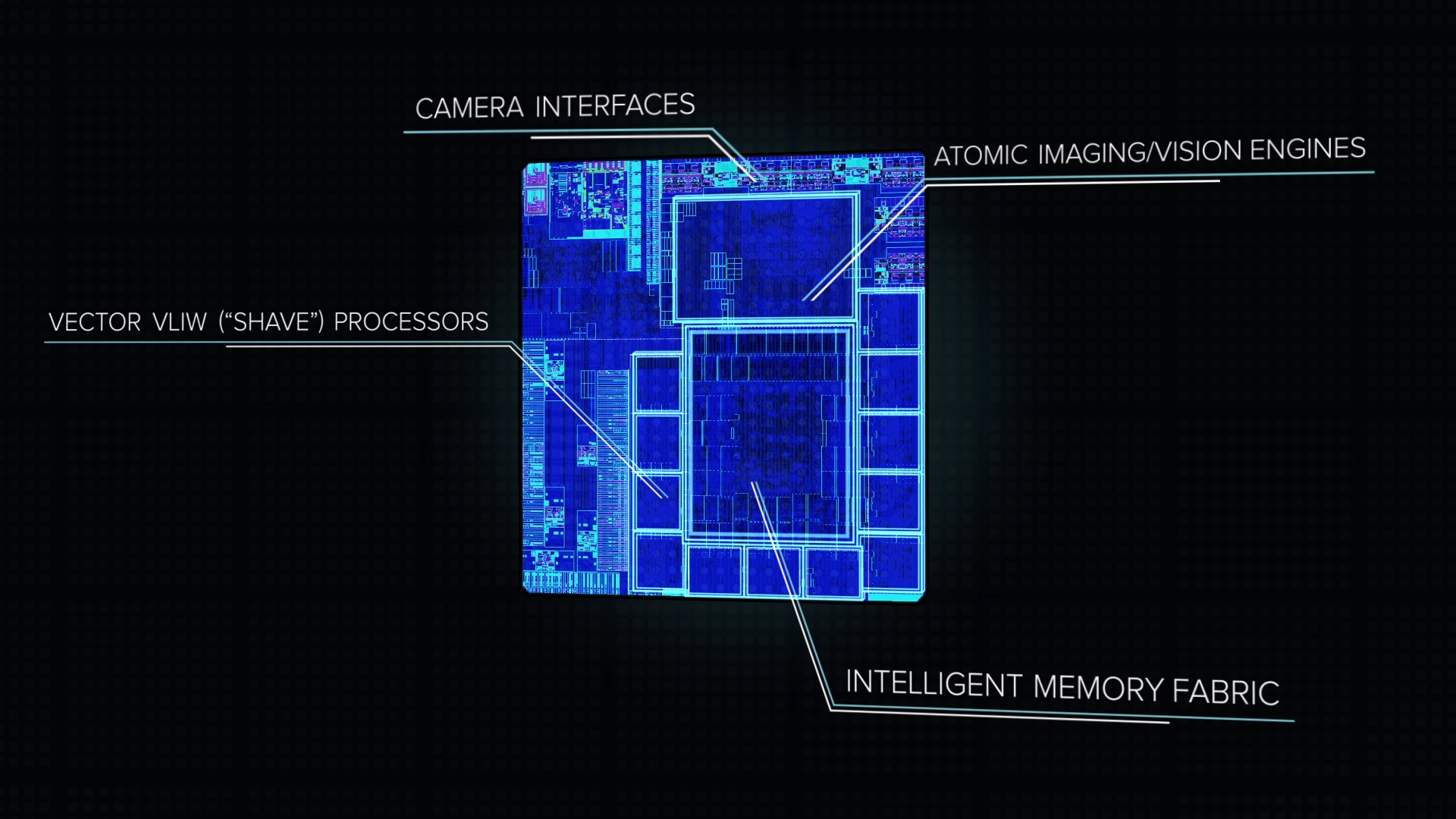

As Movidius CEO Remi El-Ouazzane told me earlier this month, the new version is based on what he called a “radically improved architecture” and a new memory architecture that can deliver 20x more processing efficiency per watt than the Myriad 1. The chip can get to about 3 teraflops of computing power and pulls about half a watt of power. The 28-nanometer chip features 12 programmable vector processors and can handle up to six full HD 60-frames-per-second inputs.

According to El-Ouazzane, that was one of the lessons the company learned from its work with Project Tango. Its customers were asking for the ability to pull in data from more sources simultaneously.

As El-Ouazzane rightly argues, though, what’s even more exciting than the chips themselves are the user experiences they will enable in the long run. For Project Tango, the focus was on 3D sensing, but one area El-Ouazaane is especially excited about is computational photography that takes inputs from multiple cameras on a phone for a more DSLR-like experience and photo quality. “This will enable mobile devices to cross the chasm between point-and-shoot camera and SLR quality,” he told me.

As El-Ouazzane rightly argues, though, what’s even more exciting than the chips themselves are the user experiences they will enable in the long run. For Project Tango, the focus was on 3D sensing, but one area El-Ouazaane is especially excited about is computational photography that takes inputs from multiple cameras on a phone for a more DSLR-like experience and photo quality. “This will enable mobile devices to cross the chasm between point-and-shoot camera and SLR quality,” he told me.

Devices that use Movidus‘s processor could feature faster auto-focus, for example, or the phone could feature an infrared sensor to extract more information from a scene and combine that information with data from the other photo sensors on the device. That takes quite a bit more computational power than traditional chips in phones today can offer, so Movidius is positioning its solutions for this space, too.

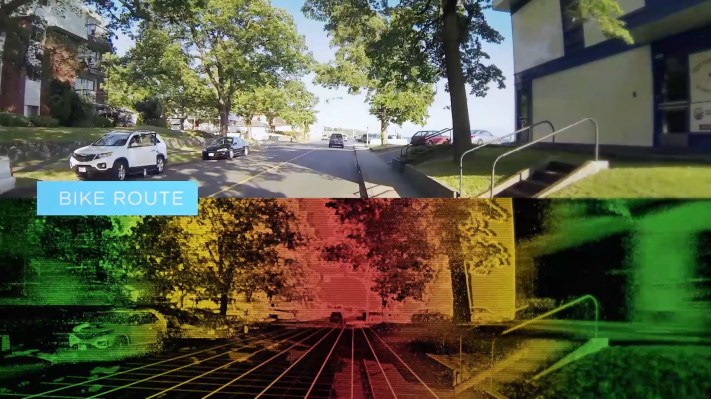

Here is what Mantis Vision — another partner in Google’s Project Tango — believes this kind of experience could look like:

That’s just one use-case for Movidus’s chips, of course. El-Ouazzane believes that for at least the next year or so, the company’s focus will mainly be on mobile devices and 3D sensing for gaming, indoor navigation and similar experiences.

This is something on the minds of many smartphone OEMs. While Amazon’s Fire Phone may have received relatively middling reviews, El-Ouazzane rightly pointed out to me that the Fire Phone introduction featured a CEO who spent quite a bit of time talking about computer vision in an event like this.

In the long run, Movidius expects to see sensors like the Myriad 2 and its successors in everything from social robots to autonomous drones and cars.

The only way it can get there, however, is by creating an ecosystem around its processors that doesn’t just include OEMs but also developers. To spur the creation of this developer community, the company also today released its Myriad Development Kit (MDK) with a development environment with tools and frameworks for building applications around its vision-processing chips. The kit comes with a full reference board and support for multiple cameras and sensors. Sadly, it’s only available to a select group of developers for now and those will have to sign an NDA to get access, so we likely won’t be hearing all that much about it anytime soon.