Facebook’s Safety Check feature was activated today, following news that a fire had engulfed a 24-storey block of flats in West London. At least six people are reported to have died in the blaze, with police expecting the death toll to rise. The Grenfell tower contains 120 flats.

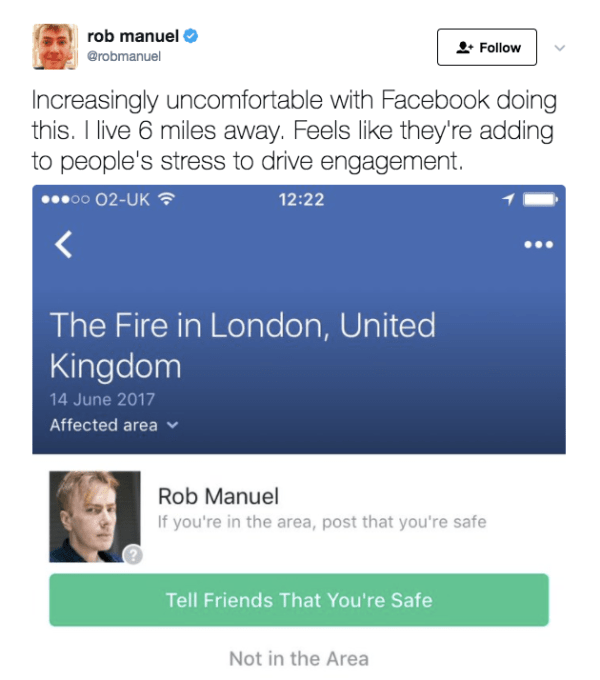

Clearly this is a tragedy. But should Facebook be reacting to a tragedy by sending push alerts — including to users who are miles away from the building in question?

Is that helpful? Or does it risk generating more stress than it is apparently supposed to relieve…

Being six miles away from a burning building in a city with a population of circa 8.5 million should not be a cause for worry — yet Facebook is actively encouraging users to worry by using emotive language (“your friends”) to nudge a public declaration of individual safety.

And if someone doesn’t take action to “mark themselves safe”, as Facebook puts it, they risk their friends thinking they are somehow — against all rational odds — caught up in the tragic incident.

Those same friends would likely not have even thought to consider there was any risk prior to the existence of the Facebook feature.

This is the paradoxical panic of ‘Safety Check’.

(A paradox Facebook itself has tacitly conceded even extends to people who mark themselves “safe” and then, by doing so, cause their friends to worry they are still somehow caught up in the incident — yet instead of retracting Safety Check, Facebook is now retrenching; bolting on more features, encouraging users to include a “personal note” with their check mark to contextualize how nothing actually happened to them… Yes, we are really witnessing feature creep on something that was billed as apparently providing passive reassurance… O____o )

Here’s the bottom line: London is a very large city. A blaze in a tower block is terrible, terrible news. It is also very, very unlikely to involve anyone who does not live in the building. Yet Facebook’s Safety Check algorithm is apparently unable to make anything approaching a sane assessment of relative risk.

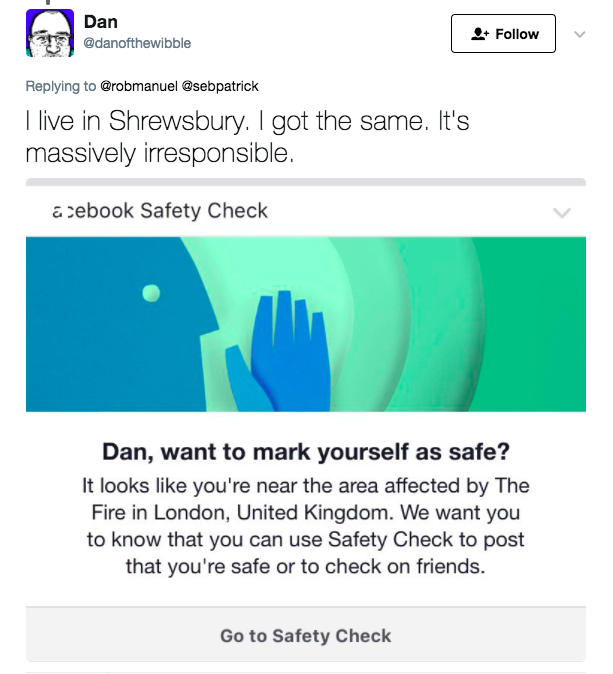

To compound matters, the company’s reliance on its own demonstrably unreliable geolocation technology to determine who gets a Safety Check prompt results in it spamming users who live hundreds of miles away — in totally different towns and cities (even apparently in different countries) — pointlessly pushing them to push a Safety Check button.

This is indeed — as one Facebook user put it on Twitter — “massively irresponsible”.

As Tausif Noor has written, in an excellent essay on the collateral societal damage of a platform controlling whether we think our friends are safe or not, by “explicitly and institutionally entering into life-and-death matters, Facebook takes on new responsibilities for responding to them appropriately”.

And, demonstrably, Facebook is not handling those responsibilities very well at all — not least by stepping away from making evidence-based decisions, on a case-by-case basis, of whether or not to activate Safety Check.

The feature did start out as something Facebook manually switched on. But Facebook soon abandoned that decision-making role (sound familiar?) — including after facing criticism of Western bias in its assessment of terrorist incidents.

Since last summer, the feature has been so-called ‘community activated’.

What does that mean? It means Facebook relies on the following formula for activating Safety Check: First, global crisis reporting agencies NC4 and iJET International must alert it that an incident has occurred and give the incident a title (in this case, presumably, “the fire in London”); and secondly there has to be an unspecified volume of Facebook posts about the incident in an unspecified area in the vicinity of the incident.

It is unclear how near an incident area a Facebook user has to be to trigger a Safety Check prompt, nor how many posts they have to have personally posted relating to the incident. We’ve asked Facebook for more clarity on its algorithmic criteria — but (as yet) received none.

NC4 and iJET International also declined to provide specific details of how they work with Facebook to power the feature, with the latter company telling us: “This is proprietary information.”

Putting Safety Check activation in this protective, semi-algorithmic swaddling means the company can cushion itself from blame when the feature is (or is not) activated — since it’s not making case-by-case decisions itself — yet also (apparently) sidestep the responsibility for its technology enabling widespread algorithmic stress. As is demonstrably the case here, where it’s been activated across London and beyond.

People talking about a tragedy on Facebook seems a very noisy signal indeed to send a push notification nudging users to make individual declarations of personal safety.

Add to that, as we can see from how hit and miss the London fire-related prompts are, Facebook’s geolocation smarts are very far from perfect. If your margin of location-positioning error extends to triggering alerts in other cities hundreds of miles away (not to mention other countries!) your technology is very clearly not fit for purpose.

Even six miles in a city of ~8.5M people indicates a ridiculously blunt instrument being wielded here. Yet one that also has an emotional impact.

The wider question is whether Facebook should be seeking to control user behavior by manufacturing a featured ‘public safety’ expectation at all.

There is zero need for a Safety Check feature. People could still use Facebook to post a status update saying they’re fine if they feel the need to — or indeed, use Facebook (or WhatsApp or email etc) to reach out directly to friends to ask if they’re okay — again if they feel the need to.

By making Safety Check a default expectation Facebook flips the norms of societal behavior and suddenly no one can feel safe unless everyone has manually checked the Facebook box marked “safe”.

But by making Safety Check a default expectation Facebook flips the norms of societal behavior and suddenly no one can feel safe unless everyone has manually checked the Facebook box marked “safe”.

This is ludicrous.

Facebook itself says Safety Check has been activated more than 600 times in two years — with more than a billion “safety” notifications triggered by users over that period. Yet how many of those notifications were really merited? And how many salved more worries than they caused?

It’s clear the algorithmically triggered Safety Check is a far more hysterical creature than the manual version. Last November CNET reported that Facebook had only turned on Safety Check 39 times in the prior two years vs 335 events being flagged by the community-based version of the tool since it had started testing it in June.

The problem is social media is intended as — and engineered to be — a public discussion forum. News events demonstrably ripple across these platforms in waves of public communication. Those waves of chatter should not be misconstrued as evidence of risk. But it sure looks like that’s what Facebook’s Safety Check is doing.

While the company likely had the best of intentions in developing the feature, which after all grew out of organic site usage following the 2011 earthquake and tsunami in Japan, the result at this point looks like an insensible hair-trigger that encourages people to overreact to tragic events when the sane and rational response would actually be the opposite: stay calm and don’t worry unless you hear otherwise.

Aka: Keep calm and carry on.

Safety Check also compels everyone, willing or otherwise, to engage with a single commercial platform every time some kind of major (or relatively minor) public safety incident occurs — or else worry about causing unnecessary worry for friends and family.

This is especially problematic when you consider Facebook’s business model benefits from increased engagement with its platform. Add to that, it also recently stepped into the personal fundraising space. And today, as chance would have it, Facebook announced that Safety Check will be integrating these personal fundraisers (starting in the US).

An FAQ for Facebook’s Fundraisers notes that the company levies a fee for personal donations of 6.9% + $.30, while fees for nonprofit donations range from 5% to 5.75%. (The company claims these fees are to cover credit card processing and to fund internal teams conducting fraud review of fundraisers.)

It’s not clear whether Facebook will be levying the same fee structure on Fundraisers that are specifically associated with incidents where Safety Check has also been triggered — we’ve asked but at the time of writing the company had not responded.

If so, Facebook is directly linking its behavioral nudging of users, via Safety Check, with a revenue generating feature that will let it take a cut of any money raised to help victims of the same tragedies. That makes its irresponsibility in apparently encouraging public worry look like something rather more cynically opportunistic.

Checking in on my own London friends, Facebook’s Safety Check informs me that three are “safe” from the tower block fire.

However 97 are worryingly labelled “not marked as safe yet”.

The only sane response to that is: Facebook Safety Check, close your account.

This post was updated with comment from iJET International and with additional detail about Facebook’s Fundraiser feature