The other day I was writing an article and I wanted to link to a piece I wrote when I was at CITEworld in 2013 — just two years ago. I went searching for it, but soon discovered that IDG, the publication’s owners had taken the site down — and all of its content with it. While this was one small example, it illustrates the issues we have around content preservation on the internet

In fact, on a personal level much of my writing from this entire century is simply gone. I could have preserved each article I’ve written, of course, if I could keep up and remember to do it. Evernote or similar service provides a way for me to capture web pages — but the archiving onus shouldn’t be on individuals like me.

If the internet is at its core is a system of record, then it is failing to complete that mission. Sometime in 2014, the internet surpassed a billion websites, while it has since fallen back a bit, it’s quite obviously an enormous repository. When websites disappear, all of the content is just gone as though it never existed, and that can have a much bigger impact than you imagine on researchers, scholars or any Joe or Josephine Schmo simply trying to follow a link.

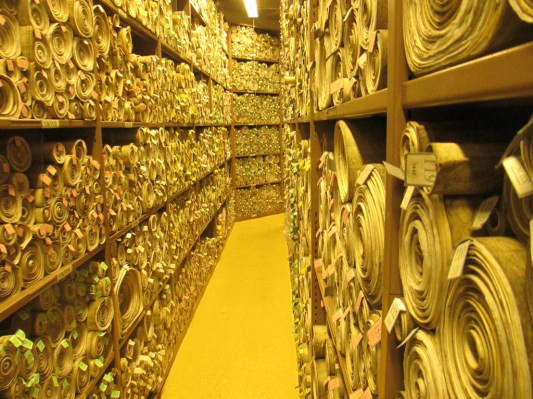

Granted, some decent percentage of those pages probably aren’t worth preserving (and some are simply bad information), but that really shouldn’t be our call. It should all be automatically archived, a digital Library of Congress to preserve and protect all of the content on the internet.

Don’t Expect Publishers To Do It

As my experience shows, you can’t rely on publishers to keep a record. When it no longer serves a website owner’s commercial purposes, the content can disappear forever. The trouble with that approach is it leaves massive holes in the online record.

Cheryl McKinnon, an analyst with Forrester Research, who covers content management, has been following this issue for many years. She says the implications of lost content on the internet could be quite profound.

“So much of our communication and content consumption now – whether as individuals, as citizens, as employees – is online and in digital form. We rely on publishers (whether entertainment, corporate, scientific, political) that have moved to predominantly, if not exclusively, digital formats. Once gone or removed from online access we incur black holes in our [online] memory,” she said.

This [Internet] memory loss extends to science, law, education, and all sorts of other cultural aspects that depend on referencing a stable and informative past to build our knowledge for the present. Jason Scott, Internet Archive

This leads directly to another problem: link rot. You click a link to an article and get a message that the page is gone or you get directed to an entirely different site from the same content owner. This has actually become a serious issue, McKinnon said. “The footnotes and backup evidence we’ve been relying on for the last decade is very precarious and subject to the [whim of] third parties hosting it,” she said.

In fact, in 2013 the ABA Journal, a publication for the legal professional, reported that link rot has become such a widespread problem in the legal profession that it’s having an enormous impact on legal research. A 2013 study (PDF), “published in the Yale Journal of Law and Technology, found that nearly one-third of the websites cited by the U.S. Supreme Court were nonfunctioning, many of which linked to government or education domains,” the ABA article reported.

And it’s not just law, this online amnesia can extend across disciplines, says Jason Scott, Free Range Archivist at the Internet Archive. “This [Internet] memory loss extends to science, law, education, and all sorts of other cultural aspects that depend on referencing a stable and informative past to build our knowledge for the present. The loss is pernicious, because we don’t immediately notice it — it’s only over time we realize what we have lost,” he said.

The Internet Archive Offers A Partial Answer

It’s not all bleak, however, The organization Scott works for, The Internet Archive is attempting to capture, at least a snapshot of the content on the Internet. I’ve found some, of my CITEworld content on its Wayback Machine, the archive it keeps of web pages dating back to its earliest days.

McKinnon calls the folks at Internet Archive “heroes” for what they are doing (and I agree). The Internet Archive’s efforts to archive social media and other sites in shut-down mode are simply awe inspiring. They act when the founders/investors can’t or won’t,” she said.

“The Internet Archive’s efforts to archive social media and other sites in shut-down mode are simply awe inspiring. They act when the founders/investors can’t or won’t.” Cheryl McKinnon, Forrester

Scott seems to recognize that it’s going to take more than his organization’s efforts, and that we need to formalize archiving as part of the website lifecycle. “Whether it’s laws, expectations, or serving by example; the ability to export and store one’s own generated data, and for companies to have shutdown policies that recognize they’ve left history, bold moves must be taken. In the meantime, it’s still somewhat luck and opportunity that saves sites before they disappear,” he said.

Finding More Permanent Solutions

The Internet Archive is a valiant effort, but it isn’t perfect. While it’s a trivial matter finding an archived site if you know its name, it’s more of a challenge finding individual authors or content by keywords and the internal search tools of archived sites don’t seem to work.

McKinnon says there are companies and individuals working on this problem. She points to companies like Preservica, which offers digital archiving solutions and Hanzo, a company founded to commercialize web archiving technology as a couple of examples.

In some cases, individual organizations have taken it upon themselves, as The Museum of Modern Art in New York is doing. It has a plan to archive all of its exhibition websites, dating back to its earliest attempts in 1995. This has taken a huge fundraising effort to make happen, however.

While all of these are game attempts, what we really need is an organization backed by the internet community with funding and tools to formalize the archiving and preservation process. This would allow every website that wants to participate to easily back up their sites to a central repository with a few lines of code.

Nobody should experience what I did the other day, going to look for a page that’s only a couple of years old, and finding that it’s disappeared. Content preservation should not be the sole responsibility of individuals or businesses. We have to find a way to make it an official part of the coding process.

If we can send bots out to index the internet, it seems we should be able to find an automated technological solution to preserve content for future generations. At the very least, we are duty bound to try.