Every day throughout the United States, there are thousands of patients waiting for a compatible organ. Supply never meets demand, a function of our voluntary system of organ donation as well as the consistent ban on paid organs. Some patients may get lucky and get an organ just in time, while others never do.

Luck, though, is only a small part of the probability of receiving an organ. UNOS, the United Network for Organ Sharing, is the non-profit organization in charge of the Organ Procurement and Transplantation Network, which develops the policies and procedures for allocating this insufficient supply of organs.

These algorithms determine who lives. Sometimes, there may be only one compatible patient within the serviceable distance of a procured organ, and thus the algorithm does no work. In many heavily-populated metropolitan areas though, there are likely dozens or more people on organ waiting lists, and the algorithm’s role in the distribution of these prized objects becomes much more prominent.

Algorithms are not just determining who lives, but also who dies. The Defense Department, taking advantage of incredible advancements in autonomous technologies, is increasingly advocating for self-flying drones. The hope is that machine learning and better algorithms can reduce the human error in drone-based assassinations, potentially allowing these technologies to make the decision to shoot without human intervention.

Ever since the development of computers in the mid–20th century, algorithms have been used to increase business efficiency. Today, algorithms run our world. UPS and FedEx use algorithms to optimize the routes of its drivers, Procter & Gamble and Walmart use learning algorithms to ensure that sufficient products are on store shelves, and banks use algorithms not only for securities trading but also to predict cash flows.

These algorithms improve efficiency in the economic arena, a boon for all of us. However, algorithms have a very different meaning in the political sphere, where the optimal policy may be contested.

Organ donations have been based on algorithms for years now, but now warfare is becoming increasingly automated. Underlying speeding and red-light cameras are algorithms which automatically ticket cars for violating traffic laws. Algorithms at the TSA determine who is “randomly” stopped for additional screenings at airports. These algorithms are becoming increasingly sophisticated, not just implementing a set of rules, but adapting to previous outcomes and improving.

As we move away from policy-based algorithms toward next-generation machine learning algorithms, we are increasingly transferring important political considerations away from human influence. This approach is at odds with democracy and the function of our elected representatives, and we must be wary of allowing political debates to be swept up by technical arguments.

Vote For Algo!

Politics may make for emotional conversation, but its utility is precisely that it forces us to confront issues and take a stand on them. Just take the debate underway right now about bulk collection of metadata in the U.S. Congress. This is a complicated issue, but its prominence in the current political debate means that journalists are heavily covering the story. Citizens are informed through media as well as their social networks, and can start to form their own opinion on the matter.

Politicians are an important vehicle for expressing different views in our democratic society. Since we utilize a republican form of government in the United States with indirect voting through representatives, politicians act as aggregators, ideally learning the voters in their districts and their preferences, and then attempting to vote on bills as effectively as possible to represent those views.

There is a belief that moving such decisions away from humans and into the machine-learning black box is the solution to many of our political problems. That is precisely wrong.

Unsurprisingly, the reality is far away from this ideal conception. Given the complexity of bills these days in Congress, many voters don’t know their own preferences on bills currently being debated on the House and Senate floors. Even if they have a general view of their opinion, voters often lack strong conviction about specific elements, and can be swayed by the right rhetoric.

For politicians, there is an incredibly difficult interface between faithfully representing the views of their electorate, while also shaping those views to fit their own opinions.

Advocacy organizations are certainly aware of the incredible complexity of politics, and the difficulty that voters have of understanding different bills. That’s why there are so-called “scorecards” that rank legislators on a subset of votes that are considered crucial to their cause. The National Rifle Association, through its Political Victory Fund, grades nearly every politician in America at the national and state-wide level.

There are now hundreds of scorecards just like this, for everything from environmental awareness to business regulation. One startup that I have written about called Quorum will even allow non-profits to quickly build new scorecards and populate them with data from its politics database.

These scorecards are algorithms. They take a set of criteria and assign a grade to each politician based off of inputted data. These scorecards have become widespread because of their ease-of-use as well as their effectiveness.

One reason (among others) for the increasing polarization of U.S. politics is the use of these sorts of algorithms in politics. Few politicians are going to risk voting on a bill that will lower their grade from a major advocacy organization, even if that is not the position they would otherwise take.

This is problematic because politics is an immensely complex space, but it is being represented by binary values. Either you vote yes or you vote no, often on a bill that is longer than Encyclopedia Brittanica. It provides politicians with almost no room to compromise, to hash out deals, or to come to an agreed consensus that might make some progress today but not all of it. Instead, algorithms in politics today are absolutes.

Congress is increasingly polarized.

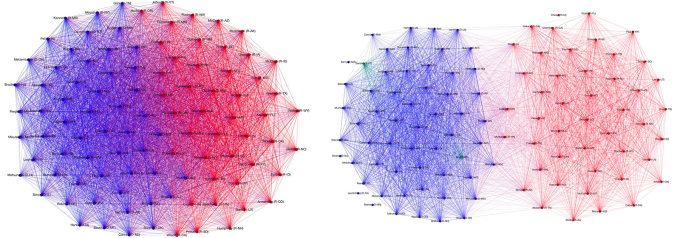

The results have been seen in any number of polarization graphs, but this is one visualization from Renzo Lucioni (also, see this rebuttal from another researcher). The connections between senators in the U.S. have declined significantly over the past few decades, to the point that there are clearly two sides to Washington when there was a rather amorphous network before.

Given that we are forcing the complexity out of politics, does it even make sense to vote for a human in office? Political votes are increasingly based around interest groups and polls rather than any form of human judgment. Wouldn’t the same argument the Pentagon uses around human-error in drones also apply to legislators, or judges?

Algorithm 2.0

One reason that algorithms are preferred to humans is simply our own perception of the two. Algorithms are ultimately computers, and are thus emotionless and “unbiased.” We recoil at a judgment from a human in a way we don’t seem to do so with a computer.

That’s why we use algorithms with organ donations, or to assign residencies to aspiring medical doctors. There is an objective quality about the computer that is lacking in a human being, even though they are both ultimately political. It is just that the human seems political at the time the decision is made, while the algorithm was political when it was designed and implemented.

The algorithms we are using though are changing, and not for the better of our political system.

Algorithms will not save us. They cannot make the decisions we have to make any easier, and in fact, can massively increase the level of polarization that takes place in our country.

In the past, algorithms invoked well-defined and deterministic procedures to calculate their end result. Political scorecards are deterministic –- they are literally the binary values of a couple of votes translated into some sort of endorsement or letter grade. That is too simplistic for our politics, but it does have the benefit of being simple and transparent.

In matching doctors to medical residencies, a version of the Gale-Shapley algorithm is used to optimize the allocation, so that we maximize the preferences of both the doctors and the hospitals in the final result. (To be fair, the algorithm at that scale is not precisely deterministic, but decently close). An important result in economics is that this algorithm incentivizes applicants to tell the truth in their preferences, preventing the procedure from being gamed.

These deterministic algorithms seem appropriate in public life, since they effectively act as implemented policies. We can almost see the code for the algorithm written in English, and indeed, the organ allocation algorithm is written precisely that way. There is nothing technical, complicated, or even surprising about the outcomes here. We could have humans assign residencies using the same procedure after all, but it simply is more efficient with a computer.

The algorithms we are using today are evolving. Machine learning allows algorithms to improve their outcomes over time, by taking advantage of new data to tune parameters. Rather than writing a policy that is implemented in code, algorithm designers instead develop a system for constantly evaluating and updating a set of beliefs about the decisions it should make.

This evolution has started to take place in credit scores, for instance. The traditional credit score designed by FICO is essentially deterministic, based off of numbers such as number of accounts open, their age, and the debt-to-credit ratio. Today, new startups like Affirm and others are trying to use machine learning and big data to predict credit in more nuanced ways.

One example of this kind of nuance is that people who properly capitalize their sentences are lower risk than those who do not. Now, credit is being allocated based off of grammar rules rather than some other metric, because the algorithm has learned from data that this is a meaningful distinction.

Is that fair? Does that meet our tests for equality that are required in credit markets? If we learned that capitalization was correlated with a protected class, such as race or gender, would we still say that this is a good metric, and ultimately, a good algorithm to use to assign risk and allocate capital? Unfortunately, we will never have that debate so long as the algorithm itself is calling the shots.

There is a belief that moving such decisions away from humans and into the machine-learning black box is the solution to many of our political problems. That is precisely wrong. We aren’t avoiding the politics of our decisions, but rather giving up human agency itself to allow the computer to make it for us. This is the fundamental difference between first and second generation algorithms.

Developing policies in our society is the forcing function to ensure that we come together, debate, and ultimately work toward a usable consensus on how we want our government to function. The more we give up on those sorts of arenas for debate, the more we will increasingly find that our society simply cannot work together whatsoever.

Algocracy?

Algorithms will not save us. They cannot make the decisions we have to make any easier, and in fact, can massively increase the level of polarization that takes place in our country. Using an algorithm in immigration to predict economic performance may sound like a way to avoid a hard debate about immigration, but that is precisely why it is so dangerous. Who exactly do we want to allow into our country? Computer code can’t make us content.

That is the strength of the model at UNOS. Since it is based on first generation algorithms, its policies are developed through committees with a variety of stakeholders involved and only the final agreement is implemented in code. I personally disagree with some of those decisions, but ultimately, the algorithm is designed by humans to be a compromise we can all live with.

I have previously argued that algorithms would replace labor unions as the key terrain for labor rights this century. I still believe that when the algorithms themselves are part of the political debate, we can increase the efficiency of government while also improving the lives of citizens.

Ultimately, we live with each other in a human society. We should never give up our seat at the table in order to allow the algorithms to just do their work. The data we allow them to use and the models we allow them to calculate should all be areas where humans have a voice. Our politicians need to see the algorithm as where politics is increasingly centered, and engage with them accordingly.