Editor’s note: Jarno M. Koponen is a designer, humanist and co-founder of media discovery startup Random. His passion is to explore and create audacious human-centered digital experiences.

A lot has been written on how algorithms are manipulating this and that in today’s Internet. However, there haven’t been many concrete proposals about how to create more human-centered algorithmic solutions. For example, do we need algorithms that are on our side?

The lost algorithmic me

Digital products are moving from our pockets to our skin and finally inside of us. Today algorithms affect what we see online; tomorrow they’ll modify our physical reality at home, in a car or in an urban environment.

Through personalization we become parts of algorithmic systems that we don’t control. Indeed, there lies a great paradox in the very core of personalization. Contrary to what the concept suggests, we can’t actually personally control personalization. We can’t control our algorithmic selves. There are several reasons for this.

Personalization algorithms are not accessible for an individual. The personalization process isn’t transparent or comprehensible. You can’t see what affects what, how the algorithms work or how your data is being used. Your interactions and your algorithmic self turn into inaccessible data.

Personalization algorithms are used to affect and guide your behavior. Personalization happens because someone else would like to know what you think and do. Because someone else would like to know better than you what you might need now or want next.

Personalization algorithms are neither neutral nor objective. Personalization needs to serve first its creator’s interests. Your personal interest comes second. There’s usually an interest gap between you and the third party that paid for the algorithms to prioritize something for you. That gap can lead to conflicting interests and obtrusive personalization.

Personalization algorithms don’t capture or understand you as a complex individual. Your algorithmic self is scattered. Different digital environments serve different interests (e.g. Amazon versus OkCupid) and thus capture different areas of preferences. Algorithms generalize and simplify. They continuously filter out things that are considered to be irrelevant and useless. In many cases, algorithms use other people’s data to fill in missing bits and pieces. Your algorithmic self is a fragmented digital Frankenstein’s monster existing in multiple places simultaneously.

Today our algorithmic selves are beyond our control. Our agency in digital environments is diminishing. That leaves us very vulnerable. Could this be changed?

Your algorithmic self is a fragmented digital Frankenstein’s monster existing in multiple places simultaneously.

Algorithmic angels

Is it really helpful to know exactly how personalization algorithms work? Or wouldn’t it be more important and powerful to affect them directly?

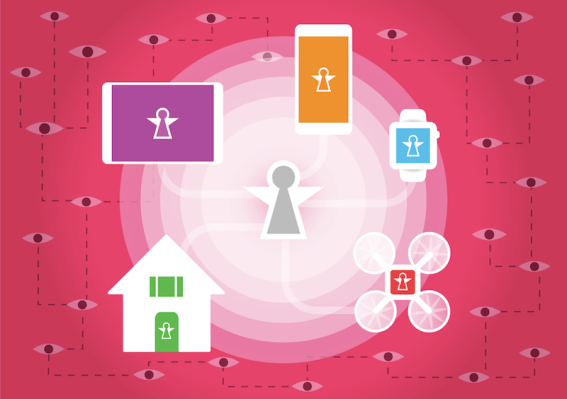

Maybe it would be more practical to have algorithms that would be on our side and in our control — personal algorithms, or algorithmic angels if you will (for a lack of a better word). They’d be a combination of an aid, guardian and assistant, helping us out in various digital and physical environments.

Not clumsy Clippies, clueless Siris, weird Watsons or malevolent HALs but something different. Something more. Something that exists with us by learning from us. A counter-force to balance our algorithmic existence. Here are some examples how an algorithmic angel could serve you:

Your intelligent digital guardian protects you from algorithmic manipulation that restricts your personal freedom. An algorithmic guide helps you by pointing out or negating the effects of obtrusive personalization. It reveals and challenges the algorithms that are serving someone else’s interests too aggressively.

Algorithmic aid exposes you to alternative choices and diverse worldviews. We’re cognitively and socially biased beings, vulnerable to algorithmic manipulation. Your angel makes sure that you’re not stuck on repeating behavioral loops, filter bubbles or virtual echo-chambers. It creates an adaptive information interface that is fresh and well-aged, relevant and serendipitous.

Algorithmic guardians shield you from intrusive surveillance. Your angel makes you either recognizable or anonymous when you choose to be. The guardian alters your appearance according to your wishes so that you can use different services with different sets of personal preferences and identities. You’re able to choose your own appearances and identity.

Algorithmic angels give you control over your personal data and improve your online security. Your guardian ensures that you control your own data flows. It helps you to decide who can access your digital trails. Your scattered and fragmented algorithmic self is in your control when needed. Your aid keeps your personal data safe and in your own hands by making sure your backups and passwords are safe. You decide what is remembered and what is forgotten.

Additionally, algorithmic angels could ensure that different environments and devices stay in your control. Your future home works the way you want and doesn’t turn into another hyper-contextual marketing platform. Angels let you connect or disconnect your various digital devices and wearables. Could such a guardian also help you to keep your digital life in check so that you can find a balance between physical and digital presence?

And you can turn your algorithmic angel off. To see how the world around you would change.

Our digital angel doesn’t need to be intelligent in the same way that we humans are intelligent. It would need to be smart in relation to the environments it inhabits — to other algorithms it would encounter. It would be a different kind of intelligence. We do the human thinking, evaluation and choosing. And it would do its machine thinking.

The more valuable your algorithmic self becomes and the more dependent you are of various algorithmic systems, the more important it is to have more control over your digitized self. One could say that , in order to remain an autonomous individual , it would be your right to have such an algorithmic angel. Without such a guardian you’ll be prey to various personalization algorithms and technologies that are beyond your direct control, eating away your personal agency.

Who would build such an angel for us?