In a time when X (formerly Twitter) and Reddit have sequestered API access behind steep paywalls, Discord is taking the opposite approach. A few weeks ago, Discord enabled U.S. developers to sell their apps to Discord users in a centralized hub, and today, that feature expands to include developers in the U.K. and Europe.

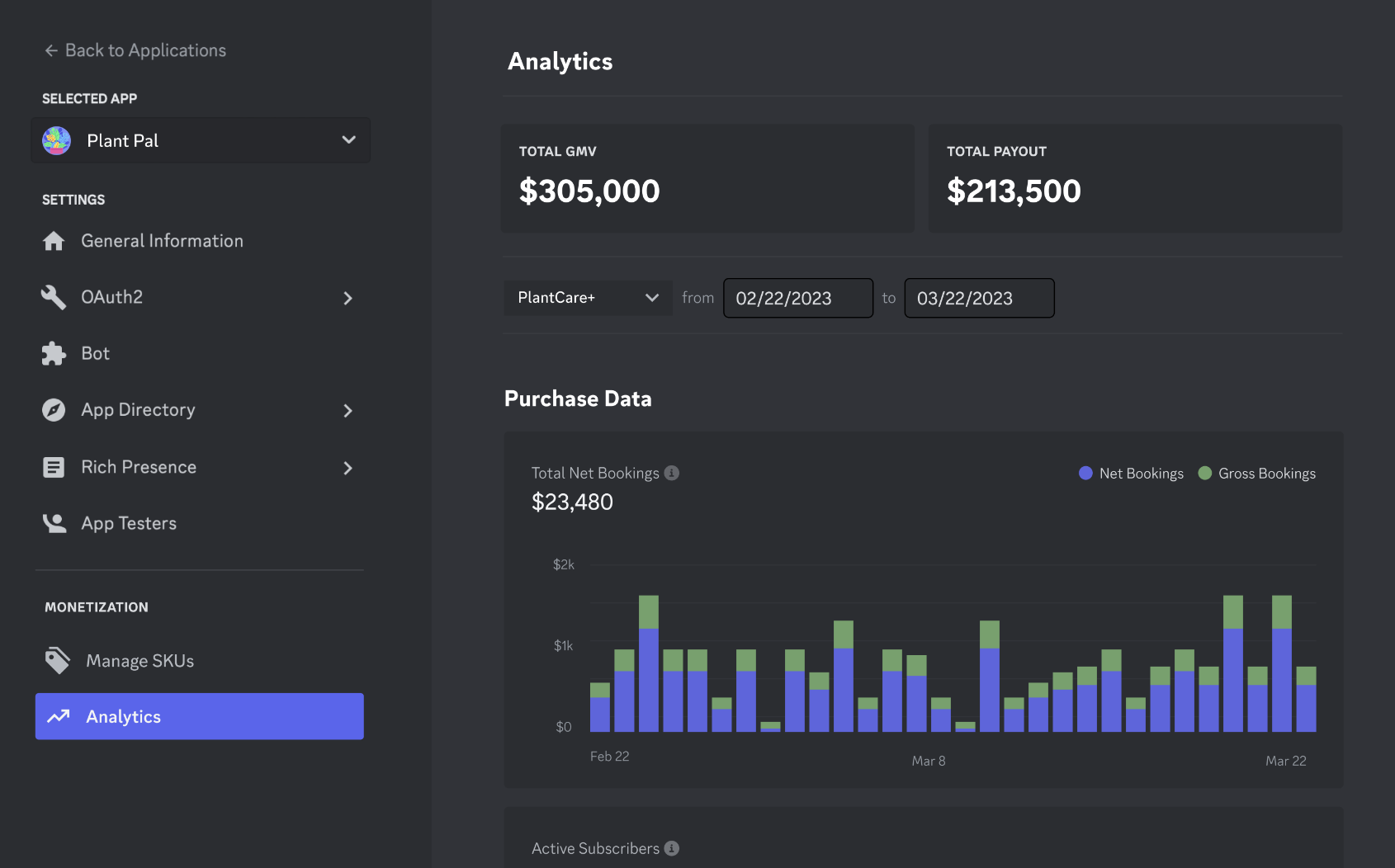

Developers who make apps on Discord — which range from mini-games, to generative AI tools, to moderation bots — earn a 70% cut of sales, with the other 30% going toward Discord platform fees.

“We’ve had an open API since the beginning of our platform back in 2015, and we’ve known the benefits of that since day one,” said Mason Sciotti, a senior product manager in Discord’s platform ecosystem organization.

When Sciotti started at Discord, his team was just himself and two engineers. Now, more than 100 people at Discord are building products for external developers.

“Sometimes, it’s still hard to imagine being more than just the three that we started with,” he told TechCrunch.

According to Discord, the platform hosts over 750,000 third-party apps, which are used by more than 45 million people each month. Right now, eligible developers can monetize by selling app subscriptions, but in the future, the platform plans to offer tipping and one-time purchases.

Image Credits: Discord

In order to sell access to an app, developers must meet certain requirements to make sure they’re in good standing on Discord. But to proactively monitor the open API system for bad actors, Discord has a developer compliance team, which accounts for some of the 100+ new team members who have joined Sciotti’s organization since he started in 2017.

“We’ve got a number of automated checks that happen along the way at all the different stages, but the big ones are our verification processes, where an app can only grow so big before Discord takes a look at it and makes sure that everything is on the up and up,” he told TechCrunch. “Especially when it comes to data privacy, we want to make sure that users understand that by using [an app], this is what you’re consenting to.”

On the safety side, Discord also announced that it’s launching Teen Safety Assist, an initiative to keep teens on the platform safe.

The first two features from this initiative are safety alerts and sensitive content filters. If a teen receives a direct message from someone for the first time, Discord may send a safety alert to the teen to double-check if they want to reply to this person. They’ll also be directed toward safety tools that show how to block a user or update their privacy settings if need be. And if Discord thinks that certain media in direct messages may be sensitive, it will automatically blur the content, so that the user has to click the image if they actually want to view it. This feature, which is opt-in for adult users, is similar to a new measure that Apple added to iOS 17.

Discord is also launching a warning system, which gives users more transparency into the standing of their account.

“We’ve found that if someone knows exactly how they broke the rules, it gives them a chance to reflect and change their behavior, helping keep Discord safer,” the company said in a blog post.