For researchers, reading scientific papers can be immensely time-consuming. According to one survey, scientists spend seven hours each week searching for information. Another survey suggests that systematic reviews of literature — scholarly syntheses of the evidence on a particular topic — take an average of 41 weeks for a five-person research team.

But it doesn’t have to be this way.

At least, that’s the message from Andreas Stuhlmüller, the co-founder of an AI startup, Elicit, that’s designed a “research assistant” for scientists and R&D labs. With backers including Fifty Years, Basis Set, Illusion and angel investors Jeff Dean (Google’s chief scientist) and Thomas Ebeling (the former Novartis CEO), Elicit is building an AI-powered tool to abstract away the more tedious aspects of literature review.

“Elicit is a research assistant that automates scientific research with language models,” Stuhlmüller told TechCrunch in an email interview. “Specifically, it automates literature review by finding relevant papers, extracting key information about the studies and organizing the information into concepts.”

Elicit is a for-profit venture spun out from Ought, a nonprofit research foundation launched in 2017 by Stuhlmüller, a former researcher at Stanford’s computation and cognition lab. Elicit’s other co-founder, Jungwon Byun, joined the startup in 2019 after leading growth at online lending firm Upstart.

Using a variety of models both first- and third-party, Elicit searches and discovers concepts across papers, allowing users to ask questions like “What are all of the effects of creatine?” or “What are all of the datasets that have been used to study logical reasoning?” and get a list of answers from the academic literature.

“By automating the systematic review process, we can immediately deliver cost and time savings to the academic and industry research organizations producing these reviews,” Stuhlmüller said. “By lowering the cost enough, we unlock new use cases that were previously cost-prohibitive, such as just-in-time updates when the state of knowledge in a field changes.”

But wait, you might say — don’t language models have a tendency to make things up? Indeed they do. Meta’s attempt at a language model to streamline scientific research, Galactica, was taken down only three days after launch, once it was discovered that the model frequently referred to fake research papers that sounded right but weren’t actually factual.

Stuhlmüller claims Elicit has taken steps to ensure its AI is more reliable than many of the purpose-built platforms out there, however.

For one, Elicit breaks down into “human-understandable” pieces the complex tasks that its models perform. This enables Elicit to know, for instance, how often different models are making things up when they generate summaries, and subsequently help users identify what answers to check — and when.

Elicit also attempts to compute a scientific paper’s overall “trustworthiness,” taking into account factors like whether the trials conducted in the research were controlled or randomized, the source of the funding and potential conflicts and the size of the trials.

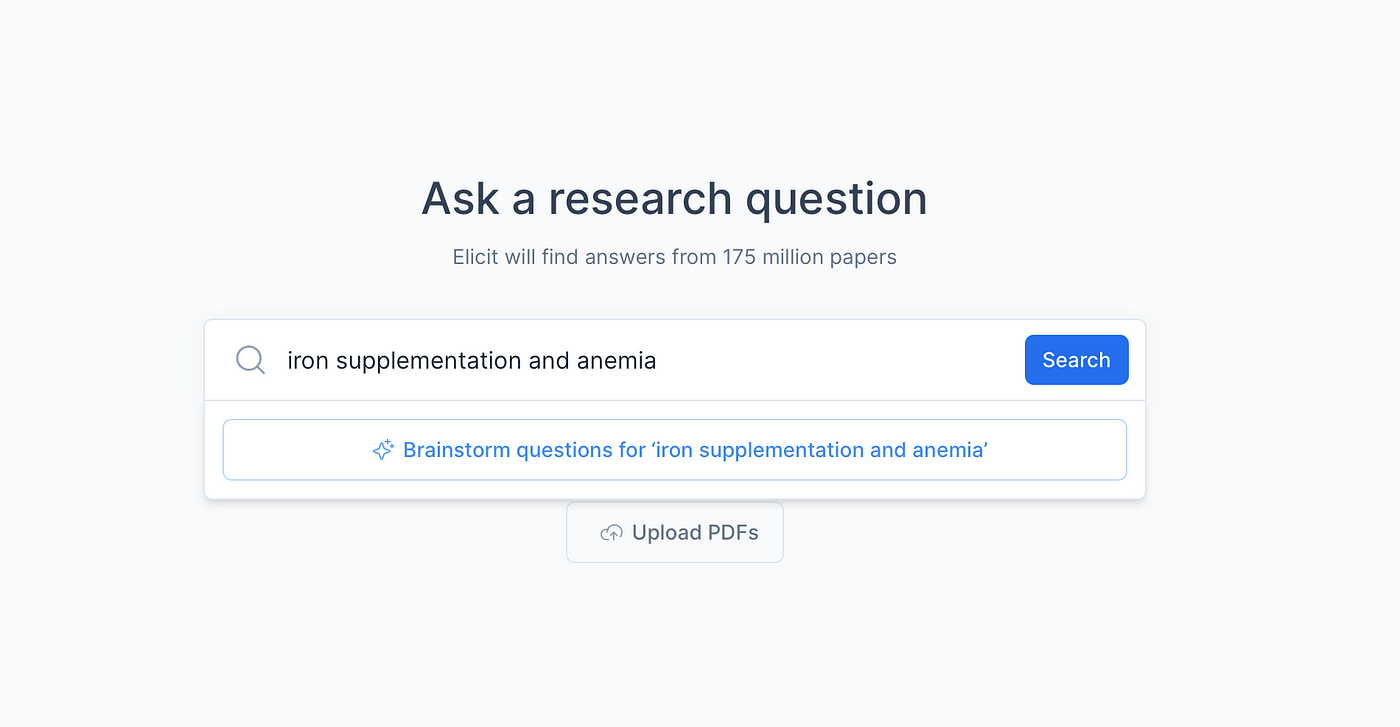

Elicit’s search tool for AI literature. Image Credits: Elicit

“We don’t do chat interfaces,” Stuhlmüller said. “Elicit users apply language models as batch jobs … We never just generate answers using models, we always link the answers back to the scientific literature to reduce hallucination and make it easy to check models’ work.”

I’m not necessarily convinced that Elicit has solved some of the major issues plaguing language models today, given their intractability. But its efforts certainly appear to have garnered interest — and perhaps even trust — from the research community.

Stuhlmüller claims that more than 200,000 people are using Elicit every month, representing 3x year-over-year growth (starting from January 2023), from organizations including The World Bank, Genentech and Stanford. “Our users are asking to pay for more powerful features and to run Elicit at larger scales,” he added.

Presumably, it’s this momentum that led to Elicit’s first funding round — a $9 million tranche led by Fifty Years. The plan is to put the bulk of the new cash toward further developing Elicit’s product as well as expanding Elicit’s team of product managers and software engineers.

But what’s Elicit’s plan to make money? Good question — and one I asked Stuhlmüller point blank. He pointed to Elicit’s paid tier, launched this week, that lets users search papers, extract data and summarize concepts at a larger scale than the free tier supports. The longer-term strategy is to build Elicit into a general tool for research and reasoning — one that whole enterprises would shell out for.

One possible roadblock to Elicit’s commercial success are open source efforts like the Allen Institute for AI’s Open Language Model, which aim to develop a free-to-use large language model optimized for science. But Stuhlmüller says that he sees open source more as complementary than threatening.

“The primary competition right now is human labor — research assistants that are hired to painstakingly extract data from papers,” Stuhlmüller said. “Scientific research is a huge market and research workflow tooling has no major incumbents. This is where we’ll see entirely new AI-first workflows emerge.”