While a medical student at UC San Francisco, Dereck Paul grew concerned that innovation in medical software was lagging behind other sectors, like finance and aerospace. He came to believe that patients are best served when doctors are equipped with software that reflects the cutting edge of tech, and dreamed of starting a company that’d prioritize the needs of patients and doctors over hospital administrators or insurance companies.

So Paul teamed up with Graham Ramsey, his friend and an engineer at Modern Fertility, the women’s health tech company, to launch Glass Health in 2021. Glass Health provides a notebook physicians can use to store, organize and share their approaches for diagnosing and treating conditions throughout their careers; Ramsey describes it as a “personal knowledgement management system” for learning and practicing medicine.

“During the pandemic, Ramsey and I witnessed the overwhelming burdens on our healthcare system and the worsening crisis of healthcare provider burnout,” Paul said. “I experienced provider burnout firsthand as a medical student on hospital rotations and later as an internal medicine resident physician at Brigham and Women’s Hospital. Our empathy for frontline providers catalyzed us to create a company committed to fully leveraging technology to improve the practice of medicine.”

Glass Health gained early traction on social media, particularly X (formerly Twitter), among physicians, nurses and physicians-in-training, and this translated to the company’s first funding tranche, a $1.5 million pre-seed round led by Breyer Capital in 2022. Glass Health was then accepted into Y Combinator’s Winter 2023 batch. But early this year, Paul and Ramsey decided to pivot the company to generative AI — embracing the growing trend.

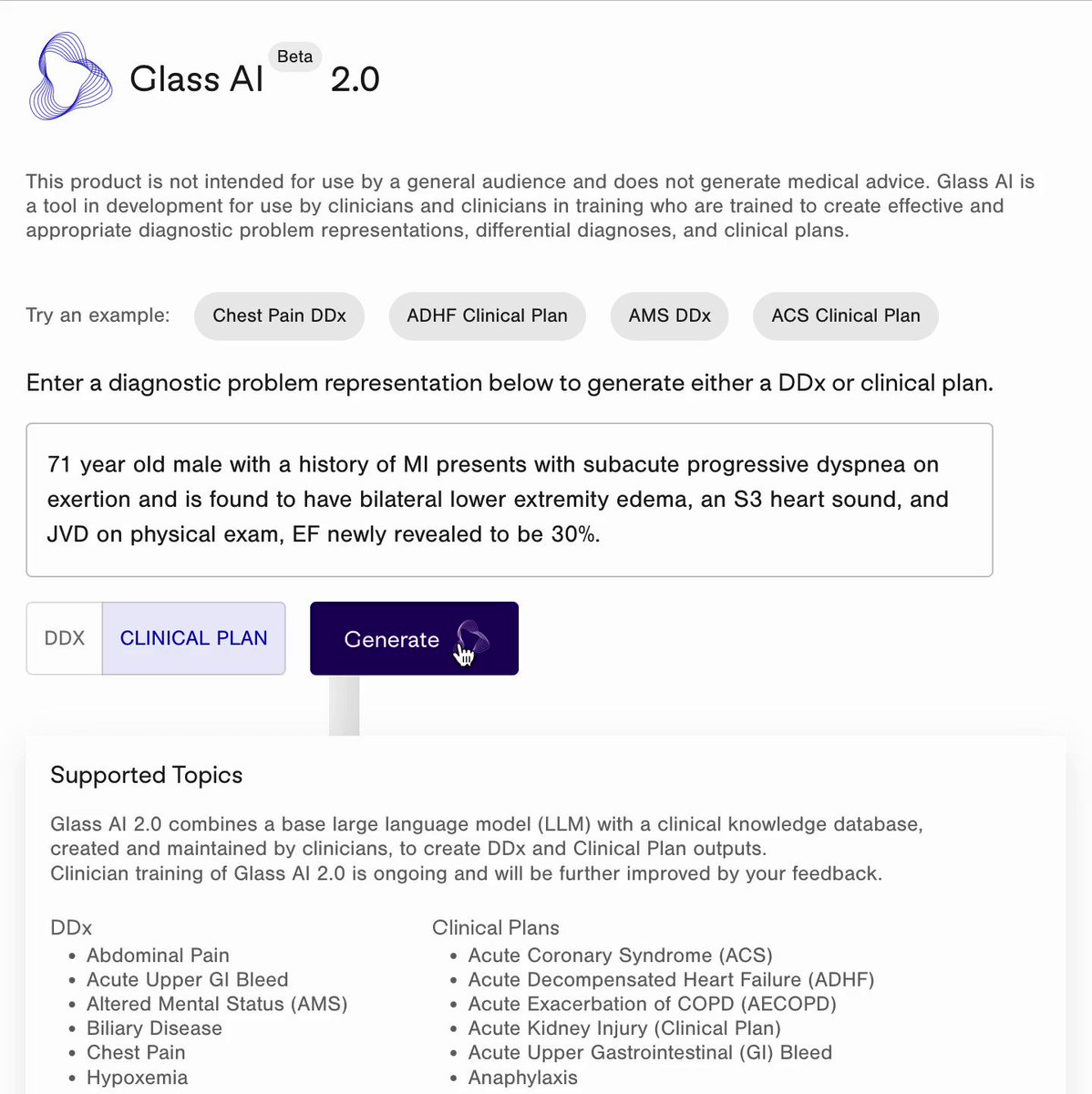

Glass Health now offers an AI tool powered by a large language model (LLM), tech similar to that behind OpenAI’s ChatGPT, to generate diagnoses and “evidence-based” treatment options to consider for patients. Physicians can type in descriptions like “71-year-old male with a history of myocardial infarction presents with subacute progressive dyspnea on exertion” or “65-year-old women with a history of diabetes and hyperlipidemia presents with acute-onset chest pain and diaphoresis,” and Glass Health’s AI will provide a likely prognosis and clinical plan.

“Clinicians enter a patient summary, also known as a problem representation, that describes the relevant demographics, past medical history, signs and symptoms and descriptions of laboratory and radiology findings related to a patient’s presentation — the information they might use to present a patient to another clinician,” Paul explained. “Glass analyzes the patient summary and recommends five to 10 diagnoses that the clinician may want to consider and further investigate.”

Glass Health can also draft a case assessment paragraph for clinicians to review, which includes explanations about possibly relevant diagnostic studies. These explanations can be edited and used for clinical notes and records down the line, or shared with the wider Glass Health community.

In theory, Glass Health’s tool seems incredibly useful. But even cutting-edge LLMs have proven to be exceptionally bad at giving health advice.

Babylon Health, an AI startup backed by the U.K.’s National Health Service, has found itself under repeated scrutiny for making claims that its disease-diagnosing tech can perform better than doctors.

In an ill-fated experiment, the National Eating Disorders Association (NEDA) launched a chatbot in partnership with AI startup Cass to provide support to people suffering from eating disorders. As a result of a generative AI systems upgrade, Cass began to parrot harmful “diet culture” suggestions like calorie restriction, leading NEDA to shut down the tool.

Elsewhere, Health News recently recruited a medical professional to evaluate the soundness of ChatGPT’s health advice in a wide range of subjects. The expert found that the chatbot missed recent studies, made misleading statements (like that “wine might prevent cancer” and that prostate cancer screenings should be based on “personal values”) and plagiarized content from several health news sources.

In a more charitable piece in Stat News, a research assistant and two Harvard professors found that ChatGPT listed the correct diagnosis (within the top three options) in 39 of 45 different vignettes. But the researchers caveated that the vignettes were the kind typically used to test medical students and might not reflect how people — particularly those for whom English is a second language — describe their symptoms in the real world.

It hasn’t been studied much. But I wonder if bias, too, could play a role in causing an LLM to incorrectly diagnose a patient. Because medical LLMs like Glass’ are often trained on health records, which only show what doctors and nurses notice (and only in patients who can afford to see them), they could have dangerous blind spots that aren’t immediately apparent. Moreover, doctors may unwittingly encode their own racial, gender or socioeconomic biases in writing the records LLMs train on, leading the models to prioritize certain demographics over others.

Paul seemed well aware of the scrutiny surrounding generative AI in medicine — and asserted that Glass Health’s AI is superior to many of the solutions already on the market.

Image Credits: Glass Health

“Glass connects LLMs with clinical guidelines that are created and peer-reviewed by our academic physician team,” he said. “Our physician team members are from major academic medical centers around the country and work part-time for Glass Health, like they would for a medical journal, creating guidelines for our AI and fine-tuning our AI’s ability to follow them … We ask our clinician users to supervise all of our our LLM application’s outputs closely, treating it like an assistant that can offer helpful recommendations and options that they may consider but never replaces directs or replaces their clinical judgment.”

In the course of our interview, Paul repeatedly emphasized that Glass Health’s AI — while focused on providing potential diagnoses — shouldn’t be interpreted as definitive or prescriptive in its answers. Here’s my guess as to the unspoken reason: If it were, Glass Health would be subject to greater legal scrutiny — and potentially even regulation by the FDA.

Paul isn’t the only one hedging. Google, which is testing a medical-focused language model called Med-PaLM 2, has been careful in its marketing materials to avoid suggesting that the model can supplant the experience of health professionals in a clinical setting. So has Hippocratic, a startup building an LLM specially tuned for healthcare applications (but not diagnosing).

Still, Paul argues that Glass Health’s approach gives it “fine control” over its AI’s outputs and guides its AI to “reflect state-of-the-art medical knowledge and guidelines.” Part of that approach involves collecting user data to improve Glass’ underlying LLMs — a move that might not sit well with every patient.

Paul says that users can request the deletion of all of their stored data at any time.

“Our LLM application retrieves physician-validated clinical guidelines as AI context at the time it generates outputs,” he said. “Glass is different from LLMs applications like ChatGPT that rely solely on their pre-training to produce outputs, and can more easily produce medical information that is inaccurate or out of date … We have tight control over the information and guidelines used by our AI to create outputs and the ability to apply a rigorous editorial process to our guidelines that aims to address bias and align our recommendations with the goal of achieving health equity.”

We’ll see if that turns out to be the case.

In the meantime, Glass Health isn’t struggling to find early adopters. To date, the platform has signed up more than 59,000 users and already has a “direct-to-clinician” offering for a monthly subscription. This year, Glass will begin to pilot an electronic health record-integrated enterprise offering with HIPAA compliance; 15 unnamed health systems and companies are on the waitlist, Paul claims.

“Institutions like hospitals and health systems will be able to provide an instance of Glass to their doctors to empower their clinicians with AI-powered clinical decision support in the form of recommendations about diagnoses, diagnostic studies and treatment steps they can consider,” Paul said. “We’re also able to customize Glass AI outputs to a health system’s specific clinical guidelines or care delivery practices.”

With a total of $6.5 million in funding, Glass plans to spend on physician creation, review and updating of the clinical guidelines used by the platform, AI fine-tuning and general R&D. Paul claims that Glass has four years of runway.