Since the release of ChatGPT at the end of last year, we’ve seen companies developing generative AI tooling to help customers interact with their products and services in a more natural way. Yet in many cases, these vendors have no idea how well the underlying large language models are performing, or how good the answers are.

Context.ai launched earlier this year to help companies better understand how users are interacting with their LLMs. Today, the company announced a $3.5 million seed investment to fully develop the idea.

CEO Henry Scott-Green and his co-founder, CTO Alex Gamble, spent several years working at Google: Scott-Green on product and Gamble as a software engineer. Together, they recognized the need for a service that measures how well these models are behaving, and there was very little tooling out there to help.

“We’ve spoken to hundreds of developers who are building LLMs, and they have a really consistent set of problems. Those problems are that they don’t understand how people are using their model, and they don’t understand how their model is performing. The phrase that I always hear is that ‘my model is a black box,’” Scott-Green told TechCrunch.

In many ways, it’s not unlike product analytics tools such as Amplitude or Mixpanel, which measure how users are interacting with a product interface such as where they click or how long they stay on a page. In Context’s case, however, it’s about digging into the data generated by the LLM, and figuring out if it is producing truly useful content that helps users answer customer questions. The ultimate goal is building a more effective model.

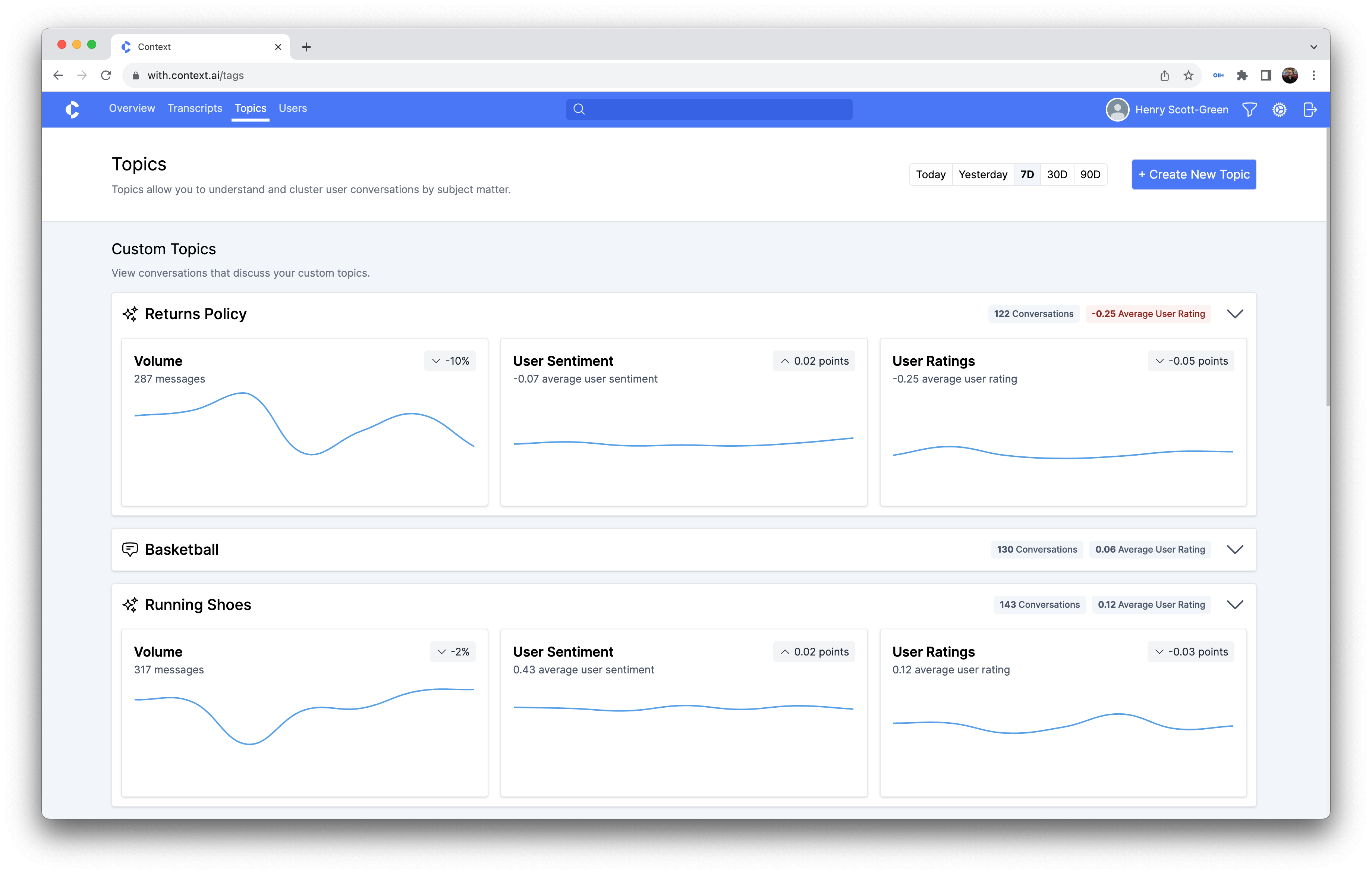

The way it works is customers share chat transcripts with Context via an API. It then analyzes the information using natural language processing (NLP). The software groups and tags conversations based on topic, and then analyzes each conversation to determine from the signals available if the customer was satisfied with the response.

After it analyzes the text from chat transcripts, Context.ai delivers an analysis like this. Image Credits: Context.ai

“We believe there is a big shift happening [with the rise of LLMs], and there’s going to be a huge number of these chat experiences built over the next few years. And in that new world, where there is a huge amount of textual interface that users are engaging with via text, rather than graphical user interfaces, there is a need for a different set of tools,” he said.

They began by building an initial prototype and shared it with early customers and design partners, and have been iterating to improve and refine the product ever since. Scott-Green indicates it is an ongoing process, but they have been generating a lot of interest and have paying customers.

It’s worth noting for those concerned about security and privacy that Context strips out PII at ingestion. It doesn’t use the content for model building or marketing purposes, and it holds content for no more than180 days, after which it is deleted, according to Scott-Green.

The company is small right now, with six employees, but he sees a future with a growing organization, and he believes it’s never too early to be thinking about building a diverse company.

“It’s obviously a challenge that the startup ecosystem has, and the tech ecosystem has in general when it comes to building representative, diverse, inclusive teams. It’s something we both believe strongly in, and I think more importantly, it’s something that we’re both acting on as well, and really making efforts to ensure that we have an inclusive representative diversity [in our employee base],” he said.

Today’s investment was co-led by GV (Google’s venture arm) and Theory Ventures.