Resemble AI, a platform that uses generative AI to clone realistic-sounding voices, today announced that it raised $8 million in a Series A round led by Javelin Venture Partners, with participation from Craft Ventures and Ubiquity Ventures.

The tranche, which brings the startup’s total raised to $12 million, will be put toward further developing Resemble’s enterprise products and doubling the size of its team to more than 40 people by the end of the year, co-founder and CEO Zohaib Ahmed says.

“Resemble’s technology is being used by some of the largest media companies in the world to create content that was previously impossible,” Ahmed told TechCrunch in an email interview.

Resemble was founded in 2019 by Ahmed and Saqib Muhammad after the two observed that voices in video games couldn’t keep up with frequent version updates to the games themselves. Ahmed formerly worked at Magic Leap as a lead software engineer, fresh off of stints at BlackBerry and Hipmunk.

Resemble started small, focusing mostly on gaming use cases. But the platform grew to offer AI tech that can “transfer” voices to other languages, generate personalized messages from voice actors and create real-time conversational agents.

Resemble is but one player in the fast-growing market for generative voice AI. Papercup, Deepdub, ElevenLabs, Respeecher, Acapela and Voice.ai are among the more notable startup vendors providing AI tools to clone and generate voices, not to mention Big Tech incumbents like AWS, Azure and Google Cloud.

It’s controversial tech, though — and not without good reason.

Motherboard writes about how voice actors are increasingly being asked to sign away rights to their voices, so that clients can leverage AI to generate synthetic versions that could eventually replace them — sometimes without compensation.

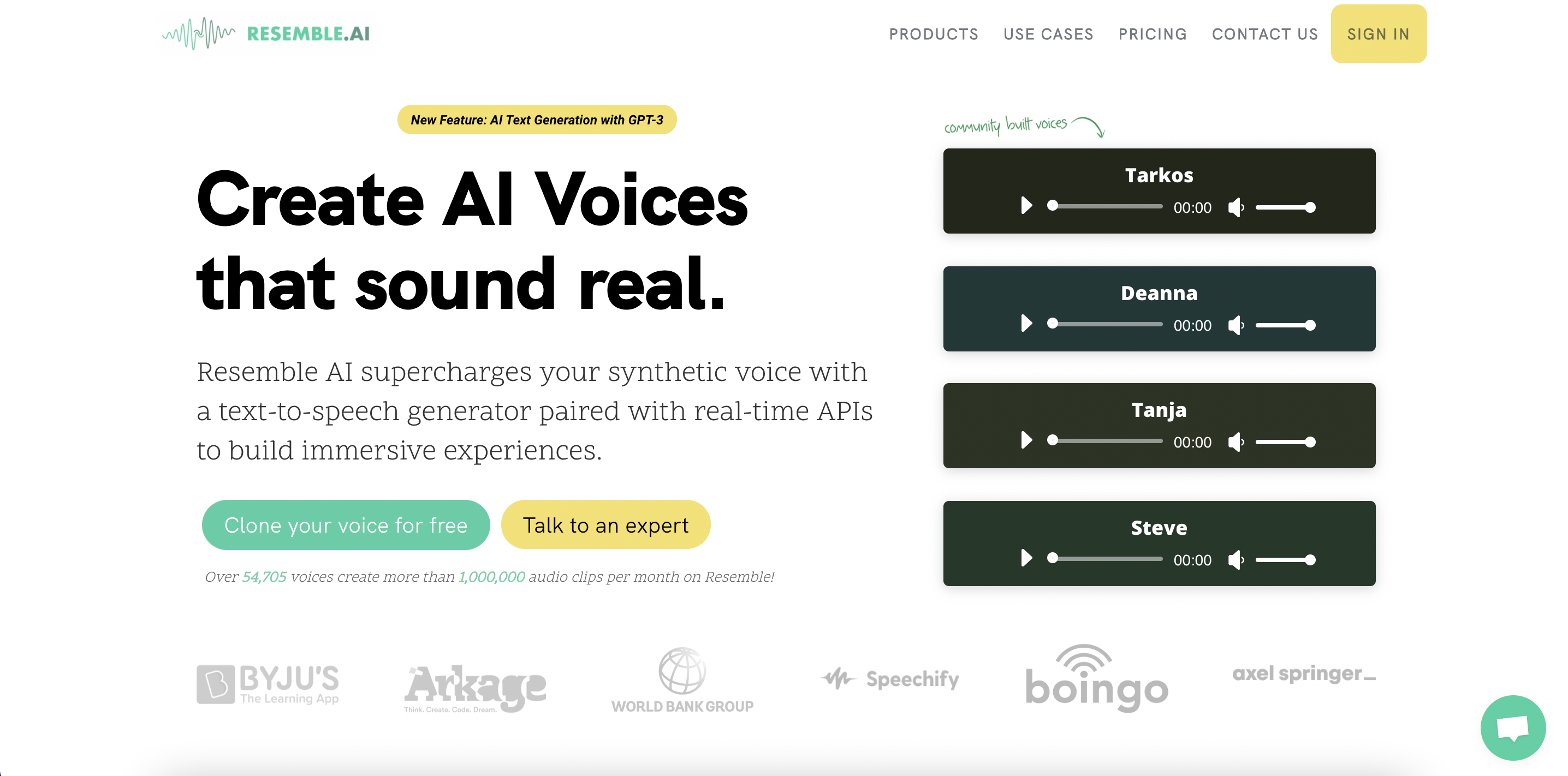

Image Credits: Resemble AI

Deepfakes are another issue.

Malicious actors are using AI to clone people’s voices, tricking victims into thinking that they’re talking to a relative or customer. And it’s not just the criminal potential that’s setting off alarm bells. In 2021, a documentarian came under fire for hiring a company to clone Anthony Bourdain’s voice posthumously — with the consent of Bourdain’s estate. The intervening years have seen voice deepfakes take over social media, mostly to harmless effect — but sometimes not.

Ahmed asserts that Resemble stands out in the area of ethics, though.

“In addition to requiring explicit user consent to clone voices, strict usage guidelines are enforced to prevent malicious use,” he said.

To this end, Resemble requires users to provide a recording of a “consent clip” in the voice they’re attempting to clone. If the voice in the clip doesn’t match the other clips, Resemble blocks the user from creating the AI voice.

In addition, to prevent misuse when recording, Resemble forces users to say an array of specific sentences in their own voice. If they deviate from the script, Resemble flags the recording as potential misuse.

“Once the voice is created, the user owns all rights to that voice,” Ahmed said. “We don’t use that voice data to train other models, nor do we resell the voice data to third-party companies … For customized solutions, we work with companies through a rigorous process to make sure that the voice they are cloning is usable by them and have the proper consents in place with voice actors.”

Resemble has also developed a product, Resemble Detect, that’s designed to validate the authenticity of audio data using an AI model trained to distinguish fakes from real audio. The model essentially “sees” different frequencies where artifacts resulting from the editing or manipulation of sound could be contained, making a prediction from 0% to 100% confidence as to the clip’s “realness.”

Detect is meant to complement Resemble’s audio watermarking tech, PerTh Watermarker, which uses an AI model to produce and insert imperceptible-to-the-human-ear audio tones that carry identifying information. (It’s worth noting that PerTh Watermarker is a bit of a platform lock-in play — it can only mark and detect Resemble’s own generated speech, and isn’t compatible with other commercial or open source voice-generating AI tools.)

Ahmed sees these tools as major contributors to Resemble’s success. The platform has more than a million users, he says, who’ve generated 35 years’ worth of audio in the last 12 months.

“With regulation of AI top of mind for government officials, Resemble is providing insights and recommendations about the responsible use of generative audio,” Ahmed said. “With Resemble, creating engaging and high-quality voice content is now easier than ever, enabling content creators to add a whole new level of authenticity to their work, and will add a new level of immersion for the audience.”