DeepMind’s health app being gobbled by parent Google is both unsurprising and deeply shocking.

First thoughts should not be allowed to gloss over what is really a gut punch.

It’s unsurprising because the AI galaxy brains at DeepMind always looked like unlikely candidates for the quotidian, margins-focused business of selling and scaling software as a service. The app in question, a clinical task management and alerts app called Streams, does not involve any AI.

The algorithm it uses was developed by the UK’s own National Health Service, a branch of which DeepMind partnered with to co-develop Streams.

In a blog post announcing the hand-off yesterday, “scaling” was the precise word the DeepMind founders chose to explain passing their baby to Google. And if you want to scale apps Google does have the well oiled machinery to do it.

At the same time Google has just hired Dr. David Feinberg, from US health service organization Geisinger, to a new leadership role which CNBC reports as being intended to tie together multiple, fragmented health initiatives and coordinate its moves into the $3TR healthcare sector.

The company’s stated mission of ‘organizing the world’s information and making it universally accessible and useful’ is now seemingly being applied to its own rather messy corporate structure — to try to capitalize on growing opportunities for selling software to clinicians.

That health tech opportunities are growing is clear.

In the UK, where Streams and DeepMind Health operates, the minister for health, Matt Hancock, a recent transplant to the portfolio from the digital brief, brought his love of apps with him — and almost immediately made technology one of his stated priorities for the NHS.

Last month he fleshed his thinking out further, publishing a future of healthcare policy document containing a vision for transforming how the NHS operates — to plug in what he called “healthtech” apps and services, to support tech-enabled “preventative, predictive and personalised care”.

Which really is a clarion call to software makers to clap fresh eyes on the sector.

In the UK the legwork that DeepMind has done on the ‘apps for clinicians’ front — finding a willing NHS Trust to partner with; getting access to patient data, with the Royal Free passing over the medical records of some 1.6 million people as Streams was being developed in the autumn of 2015; inking a bunch more Streams deals with other NHS Trusts — is now being folded right back into Google.

And this is where things get shocking.

Trust demolition

Shocking because DeepMind handing the app to Google — and therefore all the patient data that sits behind it — goes against explicit reassurances made by DeepMind’s founders that there was a firewall sitting between its health experiments and its ad tech parent, Google.

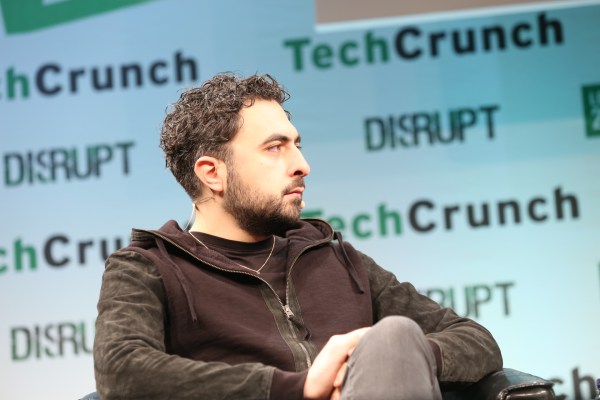

“In this work, we know that we’re held to the highest level of scrutiny,” wrote DeepMind co-founder Mustafa Suleyman in a blog post in July 2016 as controversy swirled over the scope and terms of the patient data-sharing arrangement it had inked with the Royal Free. “DeepMind operates autonomously from Google, and we’ve been clear from the outset that at no stage will patient data ever be linked or associated with Google accounts, products or services.”

As law and technology academic Julia Powles, who co-wrote a research paper on DeepMind’s health foray with the New Scientist journalist, Hal Hodson, who obtained and published the original (now defunct) patient data-sharing agreement, noted via Twitter: “This isn’t transparency, it’s trust demolition.”

Turns out DeepMind’s patient data firewall was nothing more than a verbal assurance — and two years later those words have been steamrollered by corporate reconfiguration, as Google and Alphabet elbow DeepMind’s team aside and prepare to latch onto a burgeoning new market opportunity.

Any fresh assurances that people’s sensitive medical records will never be used for ad targeting will now have to come direct from Google. And they’ll just be words too. So put that in your patient trust pipe and smoke it.

The Streams app data is also — to be clear — personal data that the individuals concerned never consented to being passed to DeepMind. Let alone to Google.

Patients weren’t asked for their consent nor even consulted by the Royal Free when it quietly inked a partnership with DeepMind three years ago. It was only months later that the initiative was even made public, although the full scope and terms only emerged thanks to investigative journalism.

Transparency was lacking from the start.

This is why, after a lengthy investigation, the UK’s data protection watchdog ruled last year that the Trust had breached UK law — saying people would not have reasonably expected their information to be used in such a way.

Nor should they. If you ended up in hospital with a broken leg you’d expect the hospital to have your data. But wouldn’t you be rather shocked to learn — shortly afterwards or indeed years and years later — that your medical records are now sitting on a Google server because Alphabet’s corporate leaders want to scale a fat healthtech profit?

In the same 2016 blog post, entitled “DeepMind Health: our commitment to the NHS”, Suleyman made a point of noting how it had asked “a group of respected public figures to act as Independent Reviewers, to examine our work and publish their findings”, further emphasizing: “We want to earn public trust for this work, and we don’t take that for granted.”

Fine words indeed. And the panel of independent reviewers that DeepMind assembled to act as an informal watchdog in patients’ and consumers’ interests did indeed contain well respected public figures, chaired by former Liberal Democrat MP Julian Huppert.

The panel was provided with a budget by DeepMind to carry out investigations of the reviewers’ choosing. It went on to produce two annual reports — flagging a number of issues of concern, including, most recently, warning that Google might be able to exert monopoly power as a result of the fact Streams is being contractually bundled with streaming and data access infrastructure.

The reviewers also worried whether DeepMind Health would be able to insulate itself from Alphabet’s influence and commercial priorities — urging DeepMind Health to “look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes”.

It turns out that was a very prescient concern since Alphabet/Google has now essentially dissolved the bits of DeepMind that were sticking in its way.

Including — it seems — the entire external reviewer structure…

A DeepMind spokesperson told us that the panel’s governance structure was created for DeepMind Health “as a UK entity”, adding: “Now Streams is going to be part of a global effort this is unlikely to be the right structure in the future.”

It turns out — yet again — that tech industry DIY ‘guardrails’ and self-styled accountability are about as reliable as verbal assurances. Which is to say, not at all.

This is also both deeply unsurprisingly and horribly shocking. The shock is really that big tech keeps getting away with this.

None of the self-generated ‘trust and accountability’ structures that tech giants are now routinely popping up with entrepreneurial speed — to act as public curios and talking shops to draw questions away from what’s they’re actually doing as people’s data gets sucked up for commercial gain — can in fact be trusted.

They are a shiny distraction from due process. Or to put it more succinctly: It’s PR.

There is no accountability if rules are self-styled and therefore cannot be enforced because they can just get overwritten and goalposts moved at corporate will.

Nor can there be trust in any commercial arrangement unless it has adequately bounded — and legal — terms.

This stuff isn’t rocket science nor even medical science. So it’s quite the pantomime dance that DeepMind and Google have been merrily leading everyone on.

It’s almost as if they were trying to cause a massive distraction — by sicking up faux discussions of trust, fairness and privacy — to waste good people’s time while they got on with the lucrative business of mining everyone’s data.