Another massive financing round for an AI chip company is coming in today, this time for SambaNova Systems — a startup founded by a pair of Stanford professors and a longtime chip company executive — to build out the next generation of hardware to supercharge AI-centric operations.

SambaNova joins an already quite large class of startups looking to attack the problem of making AI operations much more efficient and faster by rethinking the actual substrate where the computations happen. The GPU has become increasingly popular among developers for its ability to handle the kinds of lightweight mathematics in very speedy fashion necessary for AI operations. Startups like SambaNova look to create a new platform from scratch, all the way down to the hardware, that is optimized exactly for those operations. The hope is that by doing that, it will be able to outclass a GPU in terms of speed, power usage, and even potentially the actual size of the chip. SambaNova today said it has raised a huge $56 million series A financing round was co-led by GV and Walden International, with participation from Redline Capital and Atlantic Bridge Ventures.

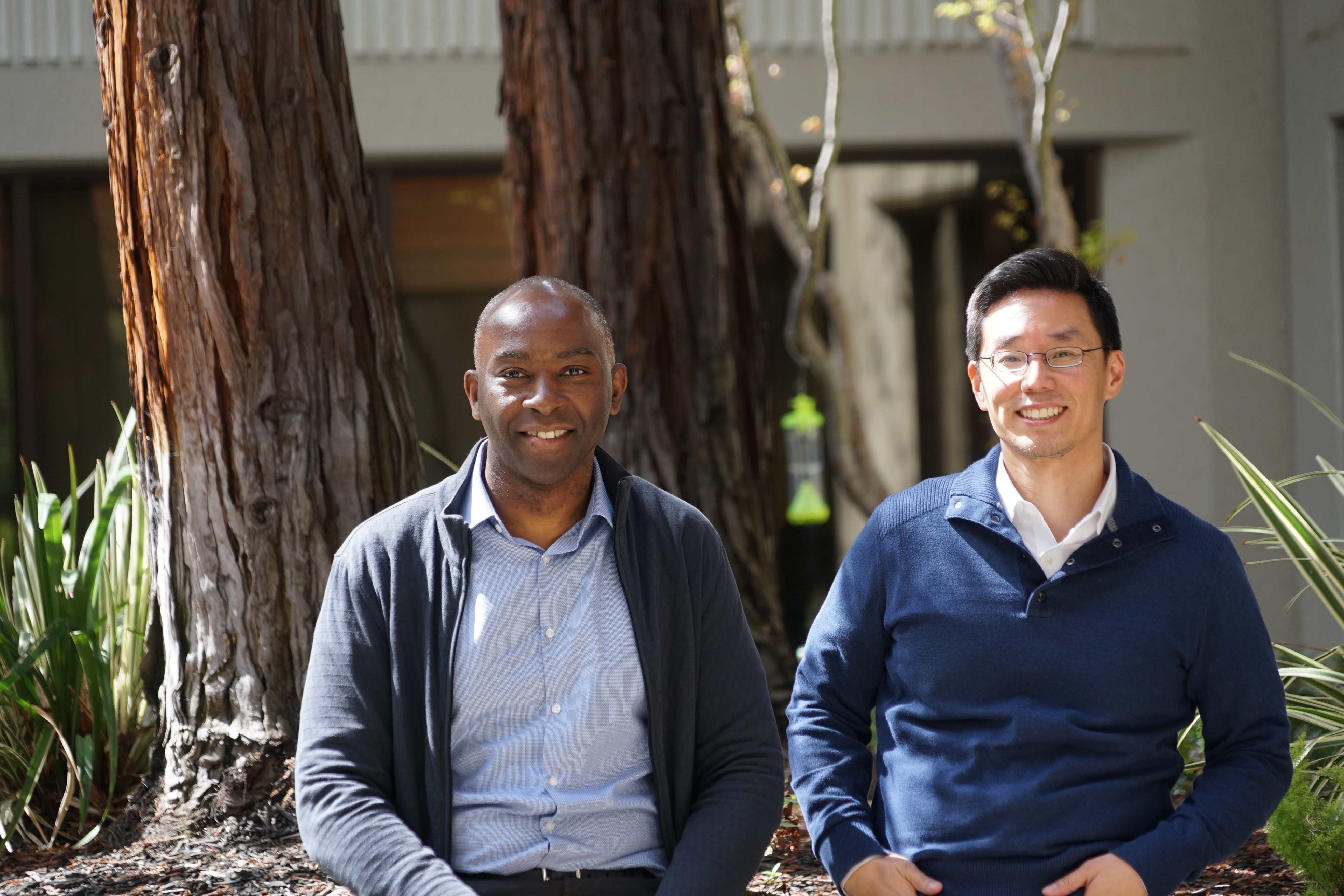

SambaNova is the product of technology from Kunle Olukotun and Chris Ré, two professors at Stanford, and led by former Oracle SVP of development Rodrigo Liang, who was also a VP at Sun for almost 8 years. When looking at the landscape, the team at SambaNova looked to work their way backwards, first identifying what operations need to happen more efficiently and then figuring out what kind of hardware needs to be in place in order to make that happen. That boils down to a lot of calculations stemming from a field of mathematics called linear algebra done very, very quickly, but it’s something that existing CPUs aren’t exactly tuned to do. And a common criticism from most of the founders in this space is that Nvidia GPUs, while much more powerful than CPUs when it comes to these operations, are still ripe for disruption.

“You’ve got these huge [computational] demands, but you have the slowing down of Moore’s law,” Olukotun said. “The question is, how do you meet these demands while Moore’s law slows. Fundamentally you have to develop computing that’s more efficient. If you look at the current approaches to improve these applications based on multiple big cores or many small, or even FPGA or GPU, we fundamentally don’t think you can get to the efficiencies you need. You need an approach that’s different in the algorithms you use and the underlying hardware that’s also required. You need a combination of the two in order to achieve the performance and flexibility levels you need in order to move forward.”

While a $56 million funding round for a series A might sound colossal, it’s becoming a pretty standard number for startups looking to attack this space, which has an opportunity to beat the big chipmakers and create a new generation of hardware that will be omnipresent among any device that is built around artificial intelligence — whether that’s a chip sitting on an autonomous vehicle doing rapid image processing to potentially even a server within a healthcare organization training models for complex medical problems. Graphcore, another chip startup, got $50 million in funding from Sequoia Capital, while Cerebras Systems also received significant funding from Benchmark Capital. Yet amid this flurry of investment activity, nothing has really shipped yet, and you’d define these companies raising tens of millions of dollars as pre-market

Olukotun and Liang wouldn’t go into the specifics of the architecture, but they are looking to redo the operational hardware to optimize for the AI-centric frameworks that have become increasingly popular in fields like image and speech recognition. At its core, that involves a lot of rethinking of how interaction with memory occurs and what happens with heat dissipation for the hardware, among other complex problems. Apple, Google with its TPU, and reportedly Amazon have taken an intense interest in this space to design their own hardware that’s optimized for products like Siri or Alexa, which makes sense because dropping that latency to as close to zero as possible with as much accuracy as possible in the end improves the user experience. A great user experience leads to more lock-in for those platforms, and while the larger players may end up making their own hardware, GV’s Dave Munichiello — who is joining the company’s board — says this is basically a validation that everyone else is going to need the technology soon enough.

“Large companies see a need for specialized hardware and infrastructure,” he said. “AI and large-scale data analytics are so essential to providing services the largest companies provide that they’re willing to invest in their own infrastructure, and that tells us more investment is coming. What Amazon and Google and Microsoft and Apple are doing today will be what the rest of the Fortune 100 are investing in in 5 years. I think it just creates a really interesting market and an opportunity to sell a unique product. It just means the market is really large, if you believe in your company’s technical differentiation, you welcome competition.”

There is certainly going to be a lot of competition in this area, and not just from those startups. While SambaNova wants to create a true platform, there are a lot of different interpretations of where it should go — such as whether it should be two separate pieces of hardware that handle either inference or machine training. Intel, too, is betting on an array of products, as well as a technology called Field Programmable Gate Arrays (or FPGA), which would allow for a more modular approach in building hardware specified for AI and are designed to be flexible and change over time. Both Munichiello’s and Olukotun’s arguments are that these require developers who have a special expertise of FPGA, which is a sort of niche-within-a-niche that most organizations will probably not have readily available.

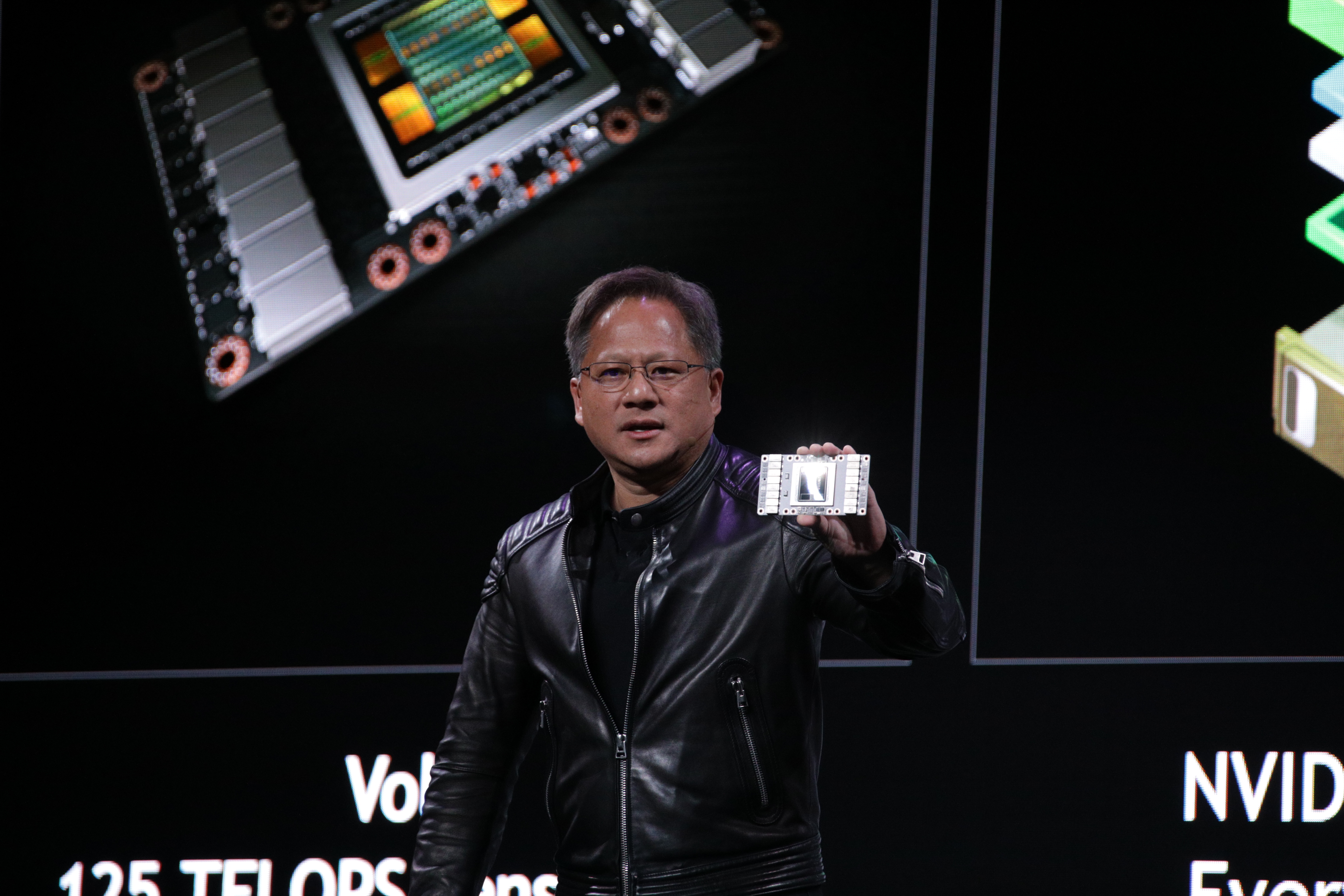

Nvidia has been a major benefactor in the explosion of AI systems, but it clearly exposed a ton of interest in investing in a new breed of silicon. There’s certainly an argument for developer lock-in on Nvidia’s platforms like Cuda. But there are a lot of new frameworks, like TensorFlow, that are creating a layer of abstraction that are increasingly popular with developers. That, too represents an opportunity for both SambaNova and other startups, who can just work to plug into those popular frameworks, Olukotun said. Cerebras Systems CEO Andrew Feldman actually also addressed some of this on stage at the Goldman Sachs Technology and Internet Conference last month.

“Nvidia has spent a long time building an ecosystem around their GPUs, and for the most part, with the combination of TensorFlow, Google has killed most of its value,” Feldman said at the conference. “What TensorFlow does is, it says to researchers and AI professionals, you don’t have to get into the guts of the hardware. You can write at the upper layers and you can write in Python, you can use scripts, you don’t have to worry about what’s happening underneath. Then you can compile it very simply and directly to a CPU, TPU, GPU, to many different hardwares, including ours. If in order to do that work, you have to be the type of engineer that can do hand-tuned assembly or can live deep in the guts of hardware, there will be no adoption… We’ll just take in their TensorFlow, we don’t have to worry about anything else.”

(As an aside, I was once told that Cuda and those other lower-level platforms are really used by AI wonks like Yann LeCun building weird AI stuff in the corners of the Internet.)

There are, also, two big question marks for SambaNova: first, it’s very new, having started in just November while many of these efforts for both startups and larger companies have been years in the making. Munichiello’s answer to this is that the development for those technologies did, indeed, begin a while ago — and that’s not a terrible thing as SambaNova just gets started in the current generation of AI needs. And the second, among some in the valley, is that most of the industry just might not need hardware that’s does these operations in a blazing fast manner. The latter, you might argue, could just be alleviated by the fact that so many of these companies are getting so much funding, with some already reaching close to billion-dollar valuations.

But, in the end, you can now add SambaNova to the list of AI startups that have raised enormous rounds of funding — one that stretches out to include a myriad of companies around the world like Graphcore and Cerebras Systems, as well as a lot of reported activity out of China with companies like Cambricon Technology and Horizon Robotics. This effort does, indeed, require significant investment not only because it’s hardware at its base, but it has to actually convince customers to deploy that hardware and start tapping the platforms it creates, which supporting existing frameworks hopefully alleviates.

“The challenge you see is that the industry, over the last ten years, has underinvested in semiconductor design,” Liang said. “If you look at the innovations at the startup level all the way through big companies, we really haven’t pushed the envelope on semiconductor design. It was very expensive and the returns were not quite as good. Here we are, suddenly you have a need for semiconductor design, and to do low-power design requires a different skillset. If you look at this transition to intelligent software, it’s one of the biggest transitions we’ve seen in this industry in a long time. You’re not accelerating old software, you want to create that platform that’s flexible enough [to optimize these operations] — and you want to think about all the pieces. It’s not just about machine learning.”