Spare a thought for Google. ‘Organizing the world’s information and making it universally accessible and useful’ isn’t exactly easy.

Even setting aside the sweating philosophical toil of algorithmically sifting for some kind of universal truth, were Mountain View to truly live up to its own mission statement it would entail massive philanthropic investments in global Internet infrastructure coupled with Herculean language localization efforts.

After all — according to a Google search snippet — there are close to 7,000 languages globally…

Which means every piece of Google-organized information should also really be translated ~7,000 times — to enable the sought for universal access. Or at least until its Pixel Buds actually live up to the universal Babel Fish claims.

We’ll let Alphabet off also needing to invest in vast global educational programs to deliver universal worldwide literacy rates, being as they do also serve up video snippets and have engineered voice-based interfaces to disperse data orally, thereby expanding accessibility by not requiring users can read to use their products. (This makes snippets of increasing importance to Google’s search biz, of course, if it’s to successfully transition into the air, as voice interfaces that read you ten possible answers would get very tedious, very fast.)

Really, a more accurate Google mission statement would include the qualifier “some of” after the word “organize”. But hey, let’s not knock Googlers for dreaming impossibly big.

And while the company might not yet be anywhere close to meaningfully achieving its moonshot mission, it has just announced some tweaks to those aforementioned search snippets — to try to avoid creating problematic information hierarchies.

As its search results unfortunately have been.

Thing is, when a search engine makes like an oracle of truth — by using algorithms to select and privilege a single answer per user generated question — then, well, bad things can happen.

Like your oracle informing the world that women are evil. Or claiming president Obama is planning a coup. Or making all sorts of other wild and spurious claims.

Here’s a great thread to get you up to speed on some of the stupid stuff Google snippets have been suggestively passing off as ‘universal truth’ since they launched in January 2014…

“Last year, we took deserved criticism for featured snippets that said things like ‘women are evil’ or that former U.S. President Barack Obama was planning a coup,” Google confesses now, saying it’s “working hard” to “smooth out bumps” with snippets as they “continue to grow and evolve”.

Bumps! We guess what they mean to say is algorithmically exacerbated bias and very visible instances of major and alarming product failure.

“We failed in these cases because we didn’t weigh the authoritativeness of results strongly enough for such rare and fringe queries,” Google adds.

For “rare and fringe queries” you should also read: ‘People deliberately trying to game the algorithm’. Because that’s what humans do (and frequently why algorithms fail and/or suck or both).

Sadly Google does not specify what proportion of search queries are rare and fringe, nor offer a more detailed breakdown of how it defines those concepts. Instead it claims:

The vast majority of featured snippets work well, as we can tell from usage stats and from what our search quality raters report to us, people paid to evaluate the quality of our results. A third-party test last year by Stone Temple found a 97.4 percent accuracy rate for featured snippets and related formats like Knowledge Graph information.

But even ~2.6% of featured snippets and related formats being inaccurate translates into a staggering amount of potential servings of fake news given the size of Google’s search business. (A Google snippet tells me the company “now processes over 40,000 search queries every second on average… which translates to over 3.5 billion searches per day and 1.2 trillion searches per year worldwide”.)

Google also flags the launch last April of updated search quality rater guidelines for IDing “low-quality webpages” — claiming this has helped it combat the problem of snippets serving wrong, stupid and/or biased answers.

“This work has helped our systems better identify when results are prone to low-quality content. If detected, we may opt not to show a featured snippet,” it writes.

Though clearly, as Nicas’ Twitter thread illustrates, Google still had plenty of work to do on the stupid snippet front as of last fall.

In his thread Nicas also noted that a striking facet of the problem for Google is the tendency for the answers it packages as ‘truth snippets’ to actually reflect how a question is framed — thereby “confirming user biases”. Aka the filter bubble problem.

Google is now admitting as much, as it blogs about the reintroduced snippets, discussing how the answers it serves can end up contradicting each other depending on the query being asked.

“This happens because sometimes our systems favor content that’s strongly aligned with what was asked,” it writes. “A page arguing that reptiles are good pets seems the best match for people who search about them being good. Similarly, a page arguing that reptiles are bad pets seems the best match for people who search about them being bad. We’re exploring solutions to this challenge, including showing multiple responses.”

So instead of a single universal truth, Google is flirting with multiple choice relativism as a possible engineering solution to make its suggestive oracle a better fit for messy (human) reality (and bias).

“There are often legitimate diverse perspectives offered by publishers, and we want to provide users visibility and access into those perspectives from multiple sources,” writes Google, self-quoting its own engineer employee, Matthew Gray.

No shit Sherlock, as the kids used to say.

Gray leads the featured snippets team, and is thus presumably the techie tasked with finding a viable engineering workaround for humanity’s myriad shades of grey. We feel for him, we really do.

Another snippets tweak Google says it’s toying with — in this instance mostly to make itself look less dumb when its answers misfire in relation to the specific question being asked — is to make it clearer when it’s showing only a near match for a query, not an exact match.

“Our testing and experiments will guide what we ultimately do here,” it writes cautiously. “We might not expand use of the format, if our testing finds people often inherently understand a near-match is being presented without the need for an explicit label.”

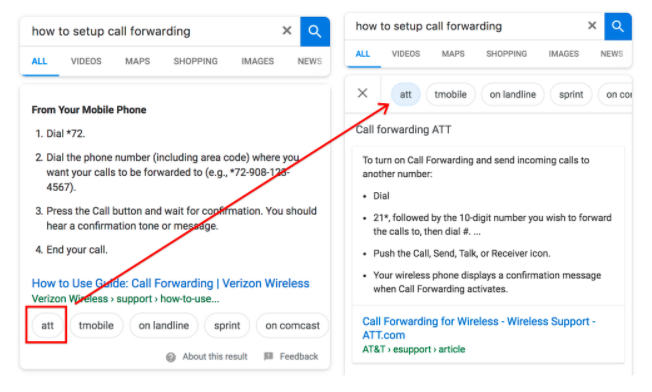

Google also notes that it recently launched another feature that lets users interact with snippets by providing a nugget more input to select the correct one to be served.

It gives the example of a question asking ‘how to set up call forwarding’ — which of course varies by carrier (and, er, country, and device being used… ). Google’s solution? To show a bunch of carriers as labels people can click on to pick the answer that fits.

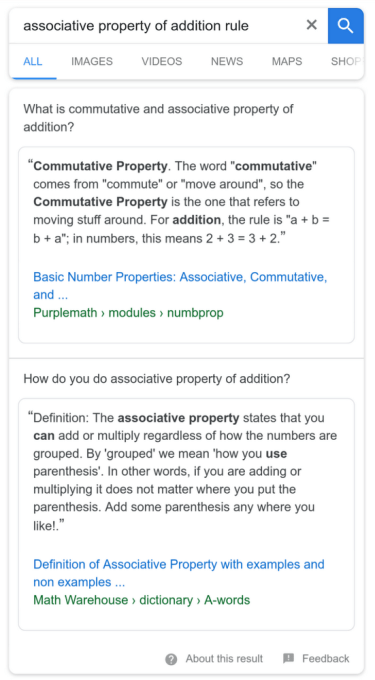

Another tweak Google slates as coming soon — and “designed to help people better locate information” — will show more than one featured snippet related to what was originally being searched for.

Albeit, on mobile this will apparently work by stacking snippets on top of one another, so one is still going to come out on top…

“Showing more than one featured snippet may also eventually help in cases where you can get contradictory information when asking about the same thing but in different ways,” it adds, suggesting Google’s plan to burst filter bubbles is to actively promote counter speech and elevate alternative viewpoints.

If so, it may need to tread carefully to avoid bubbling up radically hateful points of view, as it agrees its recommendation engines on YouTube currently can, for example. It has also had problems with algorithms cribbing dubious views off of Twitter and parachuting them into the top of its general search results.

“Featured snippets will never be absolutely perfect, just as search results overall will never be absolutely perfect,” it concludes. “On a typical day, 15 percent of the queries we process have never been asked before. That’s just one of the challenges along with sifting through trillions of pages of information across the web to try and help people make sense of the world.”

So it’s not yet quite ’50 shades of snippets’ being served up in Google search — but that one universal truth is clearly beginning to fray.