Slowly, but surely, the Baxter robot is learning. It starts as a series of random grasps — the big, red robot pokes and prods clumsily at objects on the table in front of it. The process is pretty excruciating to us humans, with around 50,000 grasps unfolding over the course of a month of eight-hour days. The robot is learning through tactile feedback and trial and error — or, as the Carnegie Mellon computer science team behind the project puts it, it’s learning about the world around it like a baby.

In a paper titled “The Curious Robot: Learning Visual Representations via Physical Interactions,” the team demonstrates how an artificial intelligence can be trained to learn about objects by repeatedly interacting with them. “For example,” the CMU students write, “babies push objects, poke them, put them in their mouth and throw them to learn representations. Towards this goal, we build one of the first systems on a Baxter platform that pushes, pokes, grasps and observes objects in a tabletop environment.”

By the time we arrive at the CMU campus, Baxter has, thankfully, already slogged through the process numerous times. Lab assistant Dhiraj Gandhi has set out a demo for us. The robot stands behind a table and Gandhi lays out a strange cross-section of objects. There’s a pencil case, an off-brand Power Ranger, some car toys and a stuffed meerkat, among others — dollar-bin tchotchkes selected for their diverse and complex shapes.

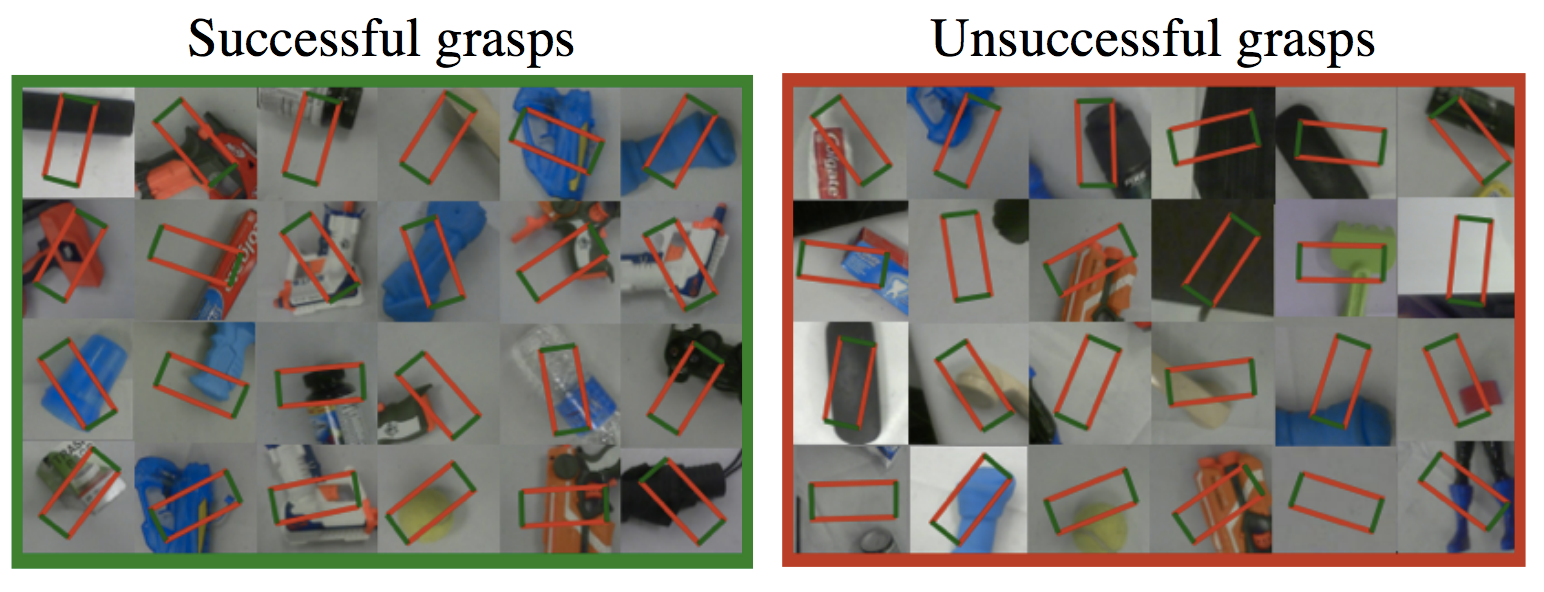

The demo is a combination of familiar and unfamiliar objects, and the contrast is immediately apparent. When the robot recognizes an object, it grasps it firmly, with a smile on its tablet-based face, dropping it into the appropriate box. If the object is unfamiliar, the face contorts, turning red and confused — but it’s nothing that another 50,000 or so additional grasps can’t solve.

The research marks a change from more traditional forms of computer vision learning, in which systems are taught to recognize objects through a “supervised” process that involves the inputting of labels. CMU’s robot teaches itself all on its own. “Right now what happens in computer vision is you have passive data,” explains Gandhi. “There is no interaction between the image and how you get the label. What we want is that you get active data as you interact with the objects. Through that data, we want to learn features that will be useful for other vision tasks.”

To explain the importance of touch, Gandhi makes reference to an experiment from the mid-70s, in which a British researcher studied the development of two kittens. One was able to interact with the world like normal and the other was allowed to look — but not touch — objects. The result was one poor kitten that was left unable to do normal kitten stuff. “The one who interacts with the environment learns how to crawl,” he explains, “but the one who was only able to observe wasn’t able to do those tasks.”

The system utilizes a 3D camera similar to the Kinect. The visual and tactile information Baxter gathers is sent to a deep neural network, where it’s cross-referenced against images in the ImageNet. The additional touch data increases the robot’s identification accuracy by more than 10-percent over robots trained only with image data. “This is quite encouraging,” the team writes in the paper, “since the correlation between robot tasks and semantic classification tasks have been assumed for decades but never been demonstrated.”

The research is still in its early stages, but it’s promising. In the future, a combination of touch and vision learning could be used for sorting robots like the kind developed by ZenRobotics, which are designed to separate garbage from recycling. “When we talk about deploying [the system] in a real-world environment, it’s a very big challenge,” says Gandhi. “We want to solve that challenge, but right now, we’re taking baby steps toward it.”