With the announcement of Apple’s ARKit, I’ve been bombarded with questions about what ARKit means for Augmented Reality (AR) developers, specifically ones building hard mobile AR technologies.

We, along with our friends at Modsy, were even called out in a recent TechCrunch piece as being “Sherlocked” by Apple. So let me make it very clear up front what ARKit does to the AR developer market: It grows it.

ARKit history from the outside

The introduction of ARKit is a long time coming. Apple’s Acquisition of Metaio in 2015 signaled to everyone in the AR community that Apple was officially pushing hard into Augmented Reality. The Metaio SDK, as good as it was, was not quite up to Apple standards so we figured it was going to be a few years before native AR support was released. In the intervening period, everyone knew there would be a growing market for AR apps and as a result SDK’s, which has been true.

When the iPhone 7 was released, we expected Apple would release an AR framework alongside it, so we immediately halted further development of our SDK for iOS. However in later conversations with the Apple team, they wanted to understand better how the dual camera could be used for longer range depth before an AR framework was released.

The interaxial distance between cameras on the 7 really only gives about 18″ worth of quality depth return, good for the portrait depth but not for longer range stereoscopic depth.

Expecting that Apple would be doubling down on the dual camera depth setup, we thought Apple would be either modifying the camera positions for the iPhone 8 or going with a monocular solution.

Based on ARKit support it is clear now that single camera (monocular) SLAM is the core technology they are implementing, though ARKit might be doing something tricky as well with the dual cameras on the 7. With rumors about better depth hardware coming on the iPhone 8 they could still surprise everyone with more robust depth support.

So what does this mean for third party AR developers?

Companies that have developed a monocular SLAM SDK for iOS will need to sunset that product. That’s pretty much it. Considering there were about a dozen companies worldwide working on that, the impact on the AR market is minimal.

In reality ARKit takes one of the biggest costs off of their portfolios, allowing them to focus on building tools and products for some of the deeper problems in AR, namely mapping.

We, along with Wikitude, Kudan and others have filled the hole that Metaio left behind for AR developers. In the end though, everyone in the AR developer community knew that eventually the hardware companies would integrate these technologies into their frameworks, we just didn’t know when.

Apple’s not the only AR player

The line of reasoning that Apple is killing third party AR companies ignores the reality that Apple is only a fraction of the mobile AR market. The lack of a robust SLAM solution for Android remains an open problem and opportunity for hard AR developers.

The Android ecosystem is so diverse that making a standard monocular SLAM framework, is orders of magnitude harder than for the small set of cameras that Apple has to deal with. We expect however that Google will take up the challenge of an AR framework soon, so Android SLAM developers shouldn’t bank on winning in that market in the long term.

As I have written previously, the introduction of AR Studio by Facebook has at least as big of an impact, if not greater, on the AR developer market than ARKit does.

The cross platform nature of AR Studio on all of the Facebook apps with almost 2 billion installs means the barriers to entry for users is magnitudes lower — something developers frequently ignore. Snapchat of course wants to be one of the winners in the AR race, and they have a good start. If Snap wants to keep up though, support for AR developers and building a publishing platform for independent developers is critical.

People are arguing that Apple will do their best to prevent AR being used inside other apps that are on iOS. Whether or not Apple decides to prevent Facebook, Snap or other companies from building AR experiences in their platform apps, is an open question. That would be anti-competitive, and if Apple took this approach, they would face significant resistance from users and developers.

There is more to AR than depth tracking

As I’ve outlined in detail before, there are a massive number of unsolved problems and opportunities remaining for AR developers. Hard won experience in the entire process of delivering AR experiences to consumers is hugely valuable and the market is still wide open for the majority of those problems. 95% of success in AR comes through helping users and clients with 3D modeling and optimization, content infrastructure, content delivery, rendering optimization, user interfaces, user workflows, and partnerships. The biggest opportunities still lie in tying the ecosystems together as basic infrastructure with wide-scale maps, content, and hardware integrations.

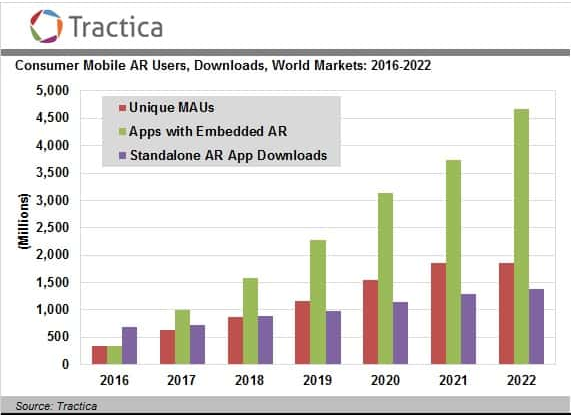

Expect that as new AR hardware becomes available, the same arguments will come up and companies will need to rapidly anticipate and adjust. AR is such a big opportunity and such a fast growing market that understanding users and how they interact with AR is the key to winning.