Though Facebook is rarely mentioned alongside Apple, Microsoft and Amazon in discussions about conversational AI, the company has published a hoard of papers that underscore a deep interest in dialog systems. As has become clear with Siri, Cortana and Alexa, dialog is hard — it requires more than just good speech recognition to deliver a killer experience to users. From the sidelines Facebook has been tinkering with big challenges like natural language understanding and text generation. And today the Facebook AI Research team added to its portfolio with a paper bringing negotiation into the conversation (all puns intended).

Facebook’s team smashed game theory together with deep learning to equip machines to negotiate with humans. By applying rollout techniques more commonly used in game-playing AIs to a dialog scenario, Facebook was able to create machines capable of complex bargaining.

To start, Facebook dreamed up an imaginary negotiation scenario. Humans on Amazon’s Mechanical Turk were given an explicit value function and told to negotiate in natural language to maximize reward by splitting up a pot of random objects — five books, three hats and two balls. The game was capped at ten rounds of dialog, the rules stated that nobody would receive any reward if that limit was exceeded.

Because each agent had distinct hidden preferences, the two had to engage in dialog to sort out which objects should be given to which agent. Over the course of the interactions, machines naturally adopted many common negotiation tactics — like placing false emphasis on a low-value item in an attempt to use it as a more valuable bargaining chip later.

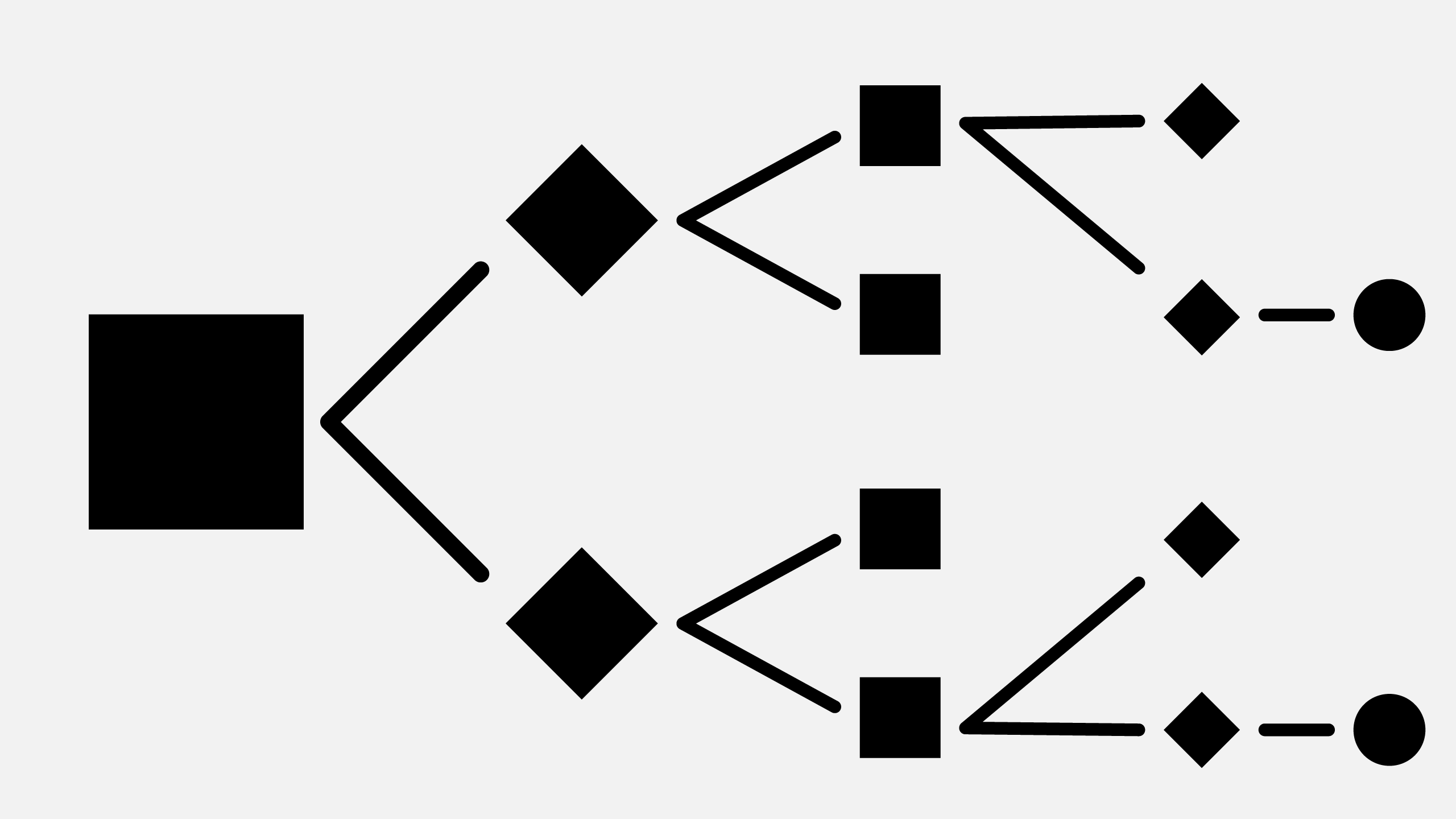

Under the hood, Facebook’s rollout technique takes the form of a decision tree. Decision trees are a critical component of many intelligent systems. They allow us to model future states from the present to make decisions. Imagine a game of tic-tac-toe, at any given point of the game, there is a finite option set (places you can place your “X” on the board.

In that scenario, each move has an expected value. Humans don’t usually consider this value in an explicit way but if you decompose your decision process when playing the game, you are effectively short-handing this math in your head.

Games like Tic Tac Toe are simple enough that they can be completely solved in a decision tree. More complex games like Go and Chess require strategies and heuristics to reduce the total number of states (it’s an almost unimaginable number of possible states). But even Chess and Go are relatively simple compared to dialog.

Dialog doesn’t draw from a finite set of outcomes. This means that for any question, there is an infinite number of possible human responses. To model a conversation, researchers have to take extra effort to bound the uncertainty problem into a reasonable size and scope. Opting to model a negotiation scheme makes this possible. The language itself can exist in an infinite number of states but its intent generally clusters around simple outcomes (I’ll take the deal or reject it).

But even in a bounded world, it’s still difficult to get machines to interact with humans in a believable way. To this avail, Facebook trained its models on negotiations between pairs of people. Once this was done, the machines were set up to negotiate with each other using reinforcement learning. At the end of each round of conversation, agents received rewards to guide improvement.

FAIR researchers Michael Lewis and Dhruv Batra explained to me that their algorithms were better at preventing individuals from making bad decisions than ensuring individuals made the best decisions. This is still important — the team told me to imagine a calendar application that doesn’t try to schedule meetings for the best time for everyone but instead tries to just ensure the meeting actually happens.

As with a lot of research, the application of this technology isn’t necessarily as explicit as the scenario simulated for the paper. Engineers often employ adversarial relationships between machines to improve outcomes — think using generative adversarial networks to generate training data by having a machine generate data looking to fool another “gatekeeper” machine.

Semi-cooperative, semi adversarial relationships, like the relationship between a coach and an athlete, could be an interesting next frontier — further connecting game theory and machine learning.

Facebook has open sourced its code from this research project. If you’re interested, you can read additional details about the work in the full paper here.