Apple once led the pack with its intelligent assistant Siri, but in just a few years, Amazon, Microsoft and Google have chipped away at its lead.

Siri is a critical component of Apple’s vision for the future, so integral that it was willing to spend $200 million to acquire Lattice Data over the weekend. The startup was working to transform the way businesses deal with paragraphs of text and other information that lives outside neatly structured databases. These engineers are uniquely prepared to assist Apple with building a next-generation internal knowledge graph to power Siri and its next generation of intelligent products and services.Broadly speaking, the Lattice Data deal was an acquihire. Apple paid roughly $10 million for each of Lattice’s 20 engineers. This is generally considered to be fair market value. Google paid about $500 million for DeepMind back in 2014. At that time, the startup had roughly 75 employees, of which a portion were machine learning developers. Give or take a few million, the math pretty much works out. But beneath the surface, the deal signals that Apple is willing to spend significant capital shoring up the backbone of Siri.

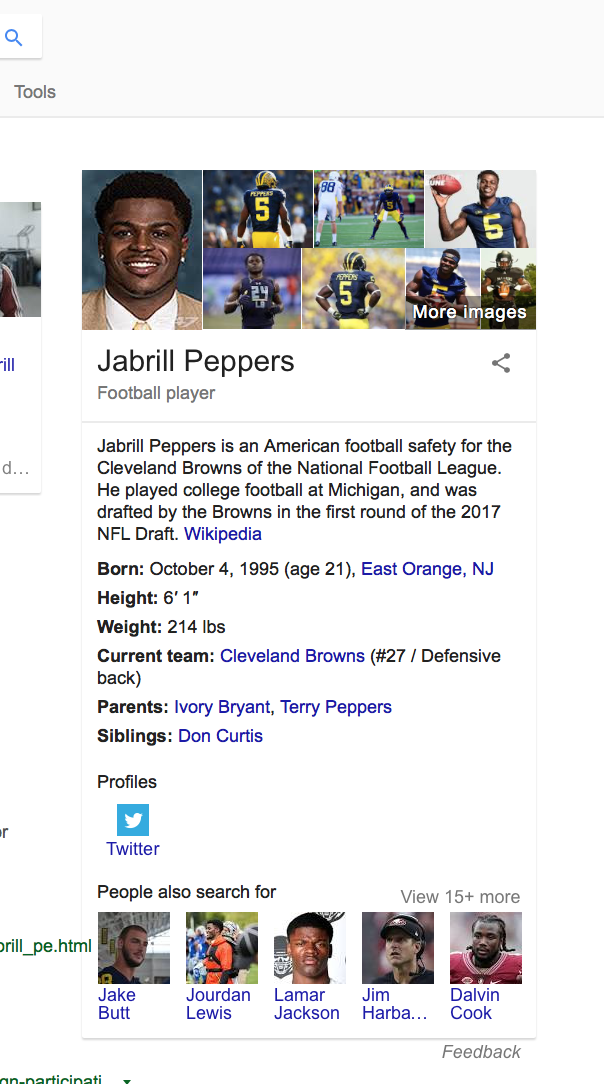

Apple and its peers grapple with the challenge of teaching conversational assistants basic knowledge about the world. Apple relies on a number of partnerships, including a major one with Yahoo, to provide Siri with the facts it needs to answer questions. It competes with Google, a company that possesses what is largely considered to be the crème de la crème of knowledge graphs. Apple surely has an interest in improving the size and quality of its knowledge graph while unshackling itself from partners.

Lattice’s experienced engineers are particularly important to Apple as it designs future products for an AI-first world. Companies like Microsoft, Facebook and Google have already declared their intentions to build up infrastructure to support the implementation of machine learning in as many products and services as possible. Apple brought on Rus Salakhutdinov in October 2017 to lead research efforts at the company, and it has acquired startups like Turi and RealFace, but it still has a lot of work to do if it intends to remain competitive in AI in the long run.

“Google is applying machine and deep-learning to about 2,500 different use cases internally now. Apple should be doing the same,” asserted Chris Nicholson, CEO of Skymind, the creators of the DL4J deep learning library.

At Apple, the Lattice Data team could start by helping Apple get its knowledge graph up to speed. This infrastructure is integral to Apple’s plan to embed Siri into each of its products. It’s an ideal place to start because it both improves existing offerings like Siri search on Apple TV and lays the groundwork for future products like its rumored Amazon Echo competitor.

Siri for Apple TV allows for complex multi-part natural language searches.

A knowledge graph is a representation of known information about the world. Information within a knowledge graph can either come from structured data from a database or unstructured data scraped from a document or the internet.

When you use Siri to search iTunes, the results have to come from somewhere. A knowledge graph makes it possible to draw complex relationships between entries. Today, Siri on Apple TV allows for complex natural language search like “Find TV shows for kids” followed up by “Only comedies.” A surprising amount of information is required to return that request and some of it might be buried in the summaries of the shows or scattered on the internet.

“Machine learning algorithms produce better results the more data you expose them to,” explained Nicholson. “So if you can find a way to extract value from unstructured data, you’re tapping the largest data set in the world, and the expectation would be that it produce the best results.”

Google’s knowledge graph returns basic information on popular topics so you don’t always have to click on a website to get what you’re looking for.

The problem with extracting data from unstructured sources is that it’s difficult to verify the accuracy of the information being pulled. Dr. Dan Klein, chief scientist at Semantic Machines, a startup building its own conversational AI, explained to me that companies typically run shallow natural language models to pull dates and facts from text sources. Initially this process is probabilistic, meaning that what text is labeled as important data is a matter of confidence and likelihoods, but once that data is extracted, it’s effectively treated as a certainty.

“You can do a better job of extracting unstructured data if you track confidence all the way through,” added Klein.

This is the idea behind Stanford professor Christopher Ré‘s work on DeepDive that was ultimately commercialized as Lattice Data. Classical databases assume everything is correct, so any future queries might unwittingly return false information. You can better account for this dangerous uncertainty by tracking how vetted information is. A unified rather than pipeline approach increases accuracy and makes it clear what is known, unknown and uncertain at any given time, Klein told me.

Greater confidence in the information you’re extracting allows you to create larger, more connected, knowledge graphs that can accommodate more complex searches. This gives any machine intelligence-powered services that sit on top of the data an edge over competitors. Siri could be improved to answer a wider variety of questions — accounting for its own uncertainty to deliver a better customer experience.